Chapter 10 Partial and Individual Permutation Feature Importance

Author: Moritz Wagner

Supervisor: Giuseppe Casalicchio

The introductory chapter discussed the relevance of PFI and LOCO for the interpretability of machine learning models. It was argued that for interpretation purposes, the concept of Feature Importance is an indispensable complement to analyzing Feature Effects. The Feature Importance quantifies the contribution of a specific feature to the prediction power of a model. Yet, it was also remarked that an aggregated, global measure of Feature Importance might be insufficient. It might be the case that within a feature, some single values or subgroups are more important for predictions than others. This heterogeneity, however, could not be captured by a single metric. Besides, it is trivial to understand that with increasing heterogeneity, the global PFI becomes less revealing. By implication, a complementary method that captures such heterogeneity becomes more decisive.

To identify whether such heterogeneity exists, an algorithm is required that calculates the Feature Importance for each value of the respective feature. Briefly, a measure that indicates the contribution of an individual value to the global Feature Importance. However, as the range of values can be rather large, a tabular description seems to be cluttered. Therefore, a visualization tool that allows gaining meaningful and concise insights on how the Feature Importance varies, should be derived. One would then plot the respective values of the feature against its local Feature Importance measures. In the case of heterogeneity, the plotted curve should then deviate from a constant shape.

Following the objective of visualization, one can make use of the concepts of Partial Dependence and Individual Conditional Expectation, as these methods allow us to detect heterogeneity in the context of Feature Effects. Casalicchio, Molnar, and Bischl (2018) avail themselves from these concepts and transfer them to the concept of Feature Importance, by introducing the metrics Partial Importance (PI) and Individual Conditional Importance (ICI) (see Goldstein et al. (2013)). They show that these methods enable to detect subgroups with differing levels of Feature Importance and are, therefore when striving for a complete picture, non-negligible complements to the global PFI metric.

However, it might be of interest to not only reliably detect heterogeneity but to also better understand its drivers. In general, it can be distinguished between three different sources for heterogeneity. First, if the relationship between a feature and the response is non-linear. Secondly, if features are correlated and third if interaction effects between features are existent. In what follows, it will be focused on the latter as the first represents no actual concern and the second was already discussed in the previous chapter.

As will be seen, uncovering interaction effects is not as straightforward, as the structural relationship between covariates and the outcome variables is unknown. Hence, from first glance, it is unclear whether there is just a non-linear relationship or whether indeed interactions between covariates exist. But if the PI or ICI method does not enable us to distinguish between these sources, the applicant does not gain a better understanding. If heterogeneity is not understood, one can hardly interpret the results and if so, the methods failed to a certain extent.

Hence, the motivation is clear. If interaction effects between covariates are existent, it should be, for the sake of the interpretability of a machine learning model, of major interest to detect them. Detecting them is an indispensable objective to be enabled to then explain them. And only if they can be explained, the heterogeneity in Feature Importance can be understood and the results can be interpreted accordingly.

To answer the questions, formulated above, the remaining subchapters are structured as follows: In chapter 10.1, the concepts of Partial Importance (PI) and Individual Conditional Importance (ICI) are theoretically introduced. This shall provide the reader with an in-depth understanding of how the Feature Importance can be visualized, both on an aggregated, global and disentangled, local level. With these preliminaries, the reader is equipped with sufficient knowledge to understand the following simulations (see chapter 10.2) which are meant to cover two broader topics.

In the subchapter 10.2.1, it will be focussed on to what extent the PI and ICI plots can uncover interaction effects between features. To give this a new angle of perspective, a new method, called "derivative-ICI" (see chapter 10.2.1.2) will be introduced.

The subchapter 10.2.2 will then discuss the issue of actually explaining the detected interaction effects. Pursuing this objective, an additional method will be introduced which will predict the global Feature Importance of the feature of interest based on the remaining features in the model (see chapter 10.2.2.1). A significant relationship between the PFI and at least one feature would then not only confirm the conjecture of interaction effects but also explain between which features these interactions took place.

The simulation chapter will then be closed by bringing the results together. With that, one can then calculate the respective conditional Feature Importance (see chapter 10.2.2.2). Plotting this provides the user with an exhaustive understanding of why the local Feature Importance differs between subgroups. Further, it even allows quantifying the difference in Feature Importance. Yet, the focus here will lay on the visualization and not on the direct quantification. The latter was already discussed by Casalicchio, Molnar, and Bischl (2018).

The whole simulation chapter serves as a "cookbook" on how to still reach meaningful and interpretable results when heterogeneity is driven by unobserved interaction effects. After this is completed, the methods will be verified on real data (see chapter 10.3). Pursuing this, a brief analysis is conducted on the Boston Housing Data.

The chapter is then closed by a summary and discussion of the methods (see chapter 10.4). This will include a final evaluation of the PI and ICI plots and thereby answer the question of whether the methods are useful or not.

10.1 Preliminaries on Partial and Individual Conditional Importance

Once the concept of the global PFI is clear, it will be shown that deriving the Partial Importance as well as the Individual Conditional Importance is straightforward. To be able to comprehend that, one should briefly recall that the global PFI of a feature \(S\) is defined as

\[\begin{align} PFI_{S} = E(L(f(X_{S}, X_{C}), Y)) - E(L(f(X), Y)) \label{eq:eq1}\tag{1} \end{align}\]where the first term corresponds to the theoretical generalization error of the model, including the permuted feature \(x_{S}\) and the second term depicts the generalization error resulting from the original model. The difference then gives the global PFI of feature \(S\). However, in the application, the joint distribution of \(X\) and \(Y\) is unknown so that the generalization error needs to be approximated by the empirical error. The first term of equation (1) is derived by the formula

\[\begin{align} \widehat{GE_{C}}(\hat{f}, D) = \frac{1}{n} \sum_{i = 1}^{n}\frac{1}{n} \sum_{k = 1}^{n}L(\hat{f}(X_{S}^{(k)}, X_{C}^{(i)}), y^{(i)}) \label{eq:e2}\tag{2} \end{align}\]which states that the empirical losses for all observations \(i \in \{i, ..., n\}\) are calculated respectively for each permutation \(k \in \{i, ..., n\}\) of \(X_{S}\) and averaged over \(n\). Here, \(\widehat{GE_{C}}(\hat{f}, D)\) is subscripted with \(C\) as it shall indicate the generalization error when only predicting with the remaining feature subset \(X_{C}\). Equivalently, the second term of equation (1) can be approximated by the formula

\[\begin{align} \widehat{GE}(f, D) = \frac{1}{n}\sum_{i = 1}^{n}L(f(x^{(i)}, y^{(i)}) \label{eq:eq3}\tag{3} \end{align}\]In equations (2) and (3), \(\hat{f}\) corresponds respectively to the fitted supervised machine learning model and \(D\) is defined as the underlying test data, sampled from a \(i.i.d\) distribution \(P\). Taking the difference of both approximations from equations (2) and (3) yields the formula for the global \(PFI_{S}\) which is defined as

\[\begin{align} \widehat{PFI}_{S} = \frac{1}{n^{2}}\sum_{i = 1}^{n}\sum_{k = 1}^{n}(L(\hat{f}(X_{S}^{(k)}, X_{C}^{(i)}), y^{(i)}) - L(f(x^{(i)}, y^{(i)}) \label{eq:eq4}\tag{4} \end{align}\]whereby calculating the global PFI becomes computationally expensive when n is large as the iteration scales with \(O(n^{2})\). This issues becomes more apparent when considering the full set of possible permutations \((\tau_{1}, ..., \tau_{n!})\), resulting in an equation equivalent to formula (5) where the algorithm iterates over all \(n!\) permutations

\[\begin{align} \widehat{GE}_{C, perm}(\hat{f}, D) = \frac{1}{n}\sum_{i = 1}^{n} \frac{1}{n!}\sum_{k = 1}^{n!}L(f(x_{S}^{\tau_{k}^{(i)}}, x_{C}^{(i)}), y^{(i)}) \label{eq:eq5}\tag{5} \end{align}\]To circumvent the computational disadvantage, it is advisable to rather approximate \(GE_{C}(f,D)\) by \(GE_{C, approx}(f,D)\) which only entails a randomly selected set of m permutations, defined as

\[\begin{align} \widehat{GE}_{C, approx}(\hat{f}, D) = \frac{1}{n}\sum_{i = 1}^{n} \frac{1}{m}\sum_{k = 1}^{m}L(f(x_{S}^{\tau_{k}^{(i)}}, x_{C}^{(i)}), y^{(i)}) \label{eq:eq6}\tag{6} \end{align}\]This results in an approximated global PFI defined as

\[\begin{align} PFI_{S,approx} = \frac{1}{n \cdot m}\sum_{i = 1}^{n}\sum_{k = 1}^{m}(L(f(x_{S}^{\tau_{k}^{(i)}}, x_{C}^{(i)}), y^{(i)}) - L(f(x^{(i)}, y^{i})) \label{eq:eq7}\tag{7} \end{align}\]From there, Individual Conditional Importance can be computed. One can calculate the change in performance for each i-th observation by taking the summands from equation (7) which is defined as

\[\begin{align} \Delta L^{(i)}(x_{S}) = L(\hat{f}(x_{S}, x_{C}^{(i)}), y^{(i)}) - L(\hat{f}(x^{(i)}), y^{(i)}) \label{eq:eq8}\tag{8} \end{align}\]and repeat that for all permutations \(m\), resulting in \(m\) components \(\Delta L^{(i)}(x_{S}^{(k)})\) for each observation \(i\). Taking the average overall permutations yields the global PFI for observation \(i\) which can be interpreted as the individual contribution of the i-th observation to the global PFI metric.

In order to visualize the ICI, one can plot the pairs \(\Big\{(x_{S}^{(k)}, \Delta L^{i}(x_{S}^{(k)}))\Big\}_{k = 1}^{n}\). In the same manner, the partial importance (PI) which corresponds to the expected change in performance at a certain value of \(x_{S}\). The estimated PI can be derived by taking the pointwise average over all ICI curves at the respective fixed points of \(x_{S}\). This is equivalent to \(\widehat{PI}_{S}(x_{S}) = \frac{1}{n}\sum_{i = 1}^{n} \Delta L^{(i) (x_{S})}\). Equivalent to above, the PI curve can visualized by plotting the pairs \(\Big\{(x_{S}^{(k)}, \widehat{PI}_{S}(x_{S}^{(k)}))\Big\}_{k = 1}^{n}\).

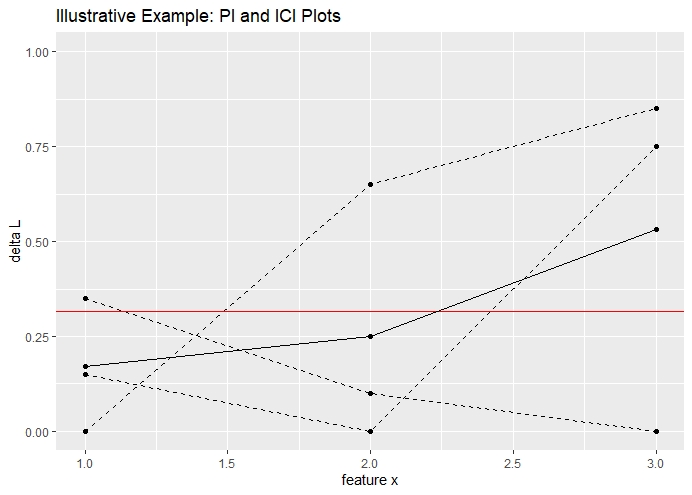

The visualization of both, the ICI curves and the PI curves is illustrated in figure 10.1. The illustration corresponds to an artificial dataset with only three observations. The dashed lines correspond to the respective ICI curves and the solid line illustrates the PI curve. The plot can be interpreted as follows. If the ICI curve takes the value 0, the original value of \(x_{S}\) was replaced by its original value and therefore, no change in performance occurred. Besides, it is also expected that if the distance between the original value and the replacing value increases, the difference in performance also increases. This is also confirmed by the shape of the ICI curves.

FIGURE 10.1: Visualization of PI and ICI plots based on an illustrative example. The visualization corresponds to three observations and a total of three permuted datasets. The dashed lines correspond to the ICI curves. The solid line corresponds to the PI curve.

As already theoretical described, averaging the ICI curves, yields the PI curve and taking the integral of the PI curve yields the global PFI of feature \(x_{S}\). In figure 10.1, the red line corresponds to the global PFI.

Note, that the exchangeability between PFI, PI and ICI depicts a convenient property for further analyses. The PI allows detecting regions with a higher or lower Feature Importance, whereby the ICI allows us to analyze individual observations and its contribution to the global PFI.

10.2 Simulations: A cookbook for using with PI and ICI

Simulations are a convenient choice to check statistical models for their correctness and validity. The following simulations are meant to guide the reader through a proposed step-by-step procedure which first, shall detect, then explain and lastly visualize interaction effects and their impact on a feature's heterogeneity in importance. Even though each step is motivated by a limitation from its preceding method, they should be considered as mutual complements that aim to derive a complete picture.

10.2.1 Detect Interactions

In general, two kinds of relationships between two covariates exist. First, the most common one, they are correlated. Second, the covariates do interact. Detecting correlation can be obtained by calculating the correlation matrix between the features. If two features are independent, the correlation is 0. The reverse, however, is not necessarily true as correlation measures only linear dependence. In such cases, a concept of information theory could be used. The metric "mutual information" describes the amount of information about one feature that is obtained when observing the other feature. Briefly, it quantifies the amount of shared information between features and therefore, measures implicitly the dependence between them. If the mutual information is 0, the features are indeed independent. This allows to even quantify interactions between features.

Hence, methods exist which can detect and even quantify the dependence between variables, apart from correlation. Now, it is to be clarified whether PI or ICI plots do have a similar power.

10.2.1.1 Partial Importance and Individual Conditional Importance plots

Again, the goal is to assess whether visualizations can detect interaction effects. To gain a first visual understanding of PI and ICI plots, consider the following data-generating model.

\[ y \, = \, 5x_{1} \, + \, 5x_{2} \, + x_{3} \, + \, \epsilon\] \[ x_{1} \, \overset{i.i.d}{\sim} \, \mathcal{N}(0,1), \, x_{2} \, \overset{i.i.d}{\sim} \mathcal{N}(0, 1) \, and \, x_{3} \overset{i.i.d}{\sim} B(1, 0.5),\, \epsilon \overset{i.i.d}{\sim} \mathcal{N}(0, 1)\]

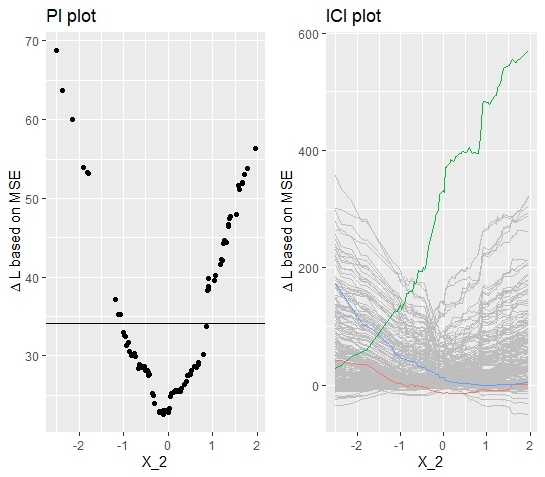

The model is simulated with 1000 observations which are split into 80% training and 20% test data. On the training data, a Random Forest model is fitted and based on the estimates, the Partial Importance and the Individual Conditional Importance are calculated on the test data. The latter is done as outlined in the preliminaries. In figure 10.2, the plots are visualized for feature \(w_{2}\).

FIGURE 10.2: Simulated data (simulation 1): PI Plot and ICI Plot for feature \(x_{2}\). Introductory example with no interaction effect. Still, heterogeneity is observed.

The PI plot indicates already a heterogeneous relationship, where the Partial Importance becomes large for large absolute values. The minimum is reached at around \(x_{2} = 0\). The respective ICI plot provides even more insights. It shows that some observations have a low local Feature Importance for large negative values and a large local Feature Importance for large positive values and vice-versa. As the shape of the curves are in both directions similar and the minimum is around \(x_{2} = 0\), it can be concluded that the feature \(x_{2}\) is equally distributed around its mean.

But how does this coincide with the fact that none of the above-discussed sources for heterogeneity are apparent in this simulation? As expected, no heterogeneity should be observed and yet the heterogeneity is considerable. The observed heterogeneity is because inherently extreme input values are considered on average as more important. Extreme values are replaced by values that are on average further away from the original input value which in turn results in higher loss differences. This problem is getting worse when choosing loss functions that penalize large errors more extreme. Briefly, the heterogeneity should be higher with a L2-loss compared to a more robust L1-loss.

Hence, the plots might be misleading as it should not be concluded that large positive or large negative values are more important. Therefore, if features are normally distributed, the shape of the curves should be considered as a baseline plot whereby only deviations from there can be considered as a "true", interpretable or meaningful heterogeneity. Yet, keeping that in mind, the PI and ICI plots do explain the heterogeneity to the full extent.

With these baseline insights, one can now evaluate to what extent PI and ICI plots can detect heterogeneity which evolved through interaction effects. Following this objective, the following data-generating model is considered:

\[ y \, = \, x_{1} \, + \, 5x_{2} \, + 5x_{2} 1_{x_2 > 2, x_3 = 0} \, + \, \epsilon\]

\[ x_{1} \, \overset{i.i.d}{\sim} \, \mathcal{N}(0,1), \, x_{2} \, \overset{i.i.d}{\sim} \mathcal{N}(0, 4) \quad \text{and} \quad x_{3} \overset{i.i.d}{\sim} B(1, 0.5),\, \epsilon \overset{i.i.d}{\sim} \mathcal{N}(0, 1)\]

Besides the comparable linear relationship between the covariates and the outcome variable, the model contains additionally an interaction effect between \(x_{2}\) and \(x_{3}\). The model suggest that the feature \(x_{2}\) should become more important for values \(x_{2} > 2\) and \(x_{3} = 0\). Hence, the plots should reveal large values in this area.

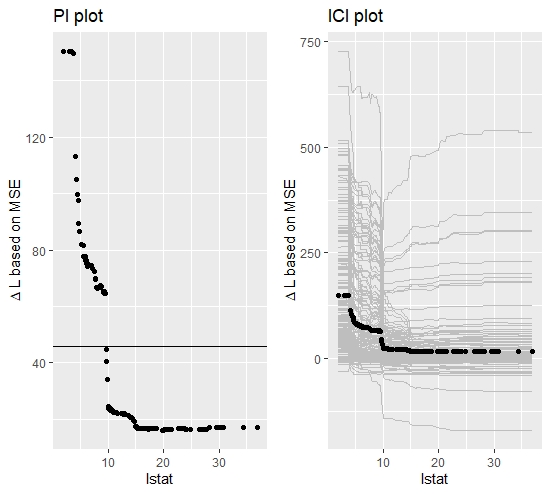

The respective PI and ICI plots (see figure 10.3) are at first glance quite similar to the plots, resulting from simulation 1. Yet, the above-mentioned differences can be observed. First, the PI plot reveals that the Feature Importance increases with a higher magnitude for large positive values, indicating that these observations are relatively more important. Looking at the ICI plot and highlighting the observations with the highest, the lowest and the median FI, yields some clearer insights. The blue curve corresponds to the observation with the largest contribution to the global PFI. Its initial value for \(x_{2}\) is beyond the threshold of 10 and the corresponding \(x_{3}\) takes the value 0. Hence, the interaction effect triggered and the feature became more important. The blue curve ascends decisively in the area around \(x_{2} = 2\). Once the threshold is reached, the fitted model does not trigger the interaction effect anymore and therefore, the predictions diverge increasingly. This observed property already indicates that an interaction effect is a major driver for heterogeneity.

Yet, the quite similar plots from simulation 1 and simulation 2 might cause problems to identify this interaction at first glance. Therefore, in what follows, an additional method will be introduced which aims for less ambiguous results. Besides, it is important to note that these plots still do not explain which features drive the heterogeneity in Feature Importance.

FIGURE 10.3: Simulated data (simulation 2): PI Plot and ICI Plot for feature \(x_{2}\). Visualizations correspond to data-generative model with interaction effect between \(x_{2}\) and \(x_{3}\).

10.2.1.2 d-ICI (derivative Individual Conditional Importance)

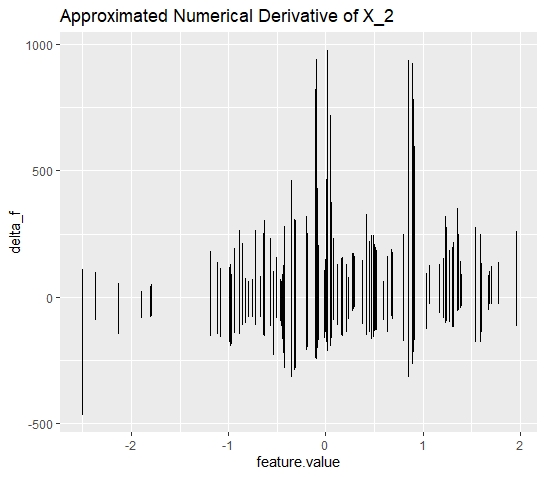

To supplement the results, yielded from the PI and ICI plots, a method will be proposed that was introduced by Goldstein et al. (2013) with the purpose to detect interaction effects in the context of analyzing Feature Effects.

By calculating the numerical derivative for each ICE curve, they show that the respective derivative plots enable to detect interaction effects. They argue that the derivatives should be constant over the range of values if no interaction effects exist. In the case of interaction effects, the derivatives should show a larger positive or negative magnitude at the point where the interaction effect takes place.

Taking this, we can transfer the theoretical concept from Feature Effects to Feature Importance by calculating the derivatives of each ICI curve respectively. It was already observed that an interaction triggers an initially higher level of Feature Importance which was represented in a sudden increase or decrease of the ICI curves that were affected by the interaction. This should be perfectly captured by the derivatives.

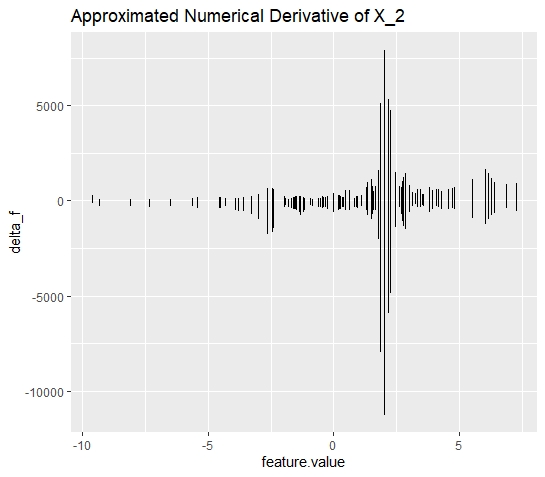

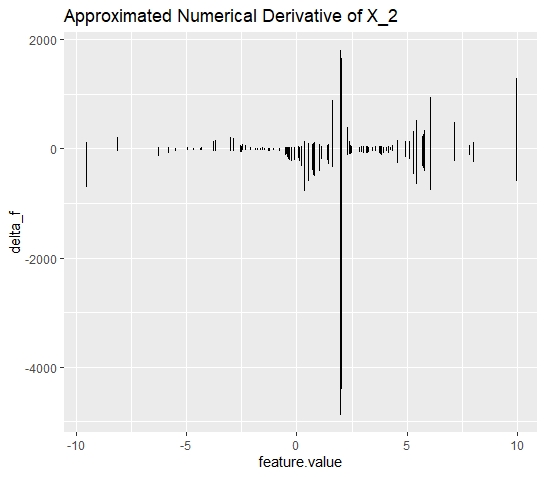

Even though the conceptual transfer seems straightforward, the interpretation of the derivative plots should be adjusted slightly. First, one should not expect that the plots are constant in the case when no interaction is existent. Figure 10.4 shows for the model with no interaction effect that, there is also some altitude in the derivatives. However, this is approximately equally distributed over the feature's range of values and therefore, it is reasonable to assume that there is no interaction effect.

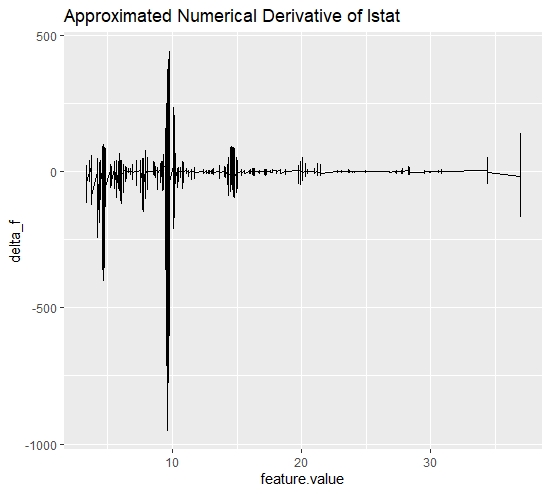

Secondly, by contrast to the derivative of the ICE curves, it is to be expected that the derivatives of the ICI curves are both, negative (descending curves) and positive (ascending curves). The respective d-ICI plot (see figure 10.5) for simulation 2 depicts a distinguished picture. Over the whole range of values, the derivatives are comparably low in magnitude, except for the derivatives at \(x_{2} = 2\).

Without going into further detail at this point, one can conclude that d-ICI plot seems to be a valid method to obtain a more robust indicator for interaction effects. Besides, the plots directly reveal where the interaction effect triggers which constitutes an important property when aiming for better interpretability.

FIGURE 10.4: Simulated data (simulation 1): d-ICI plot for feature \(x_{2}\). The d-ICI plot shows a less clear structure of the derivatives. This corresponds to a the case where the respective feature does not interact

FIGURE 10.5: Simulated data (simulation 2): d-ICI plot for feature \(x_{2}\). The d-ICI plots shows a distinguished amplitude at \(x_{2} = 2\). This finding is in line with the interaction effect between \(x_{2}\) and \(x_{3}\).

However, even if the d-ICI plots are sometimes convenient choices to detect interaction effects, they are still only applicable if some properties hold. First, and that is the most crucial one, the interaction effects shall not be existent over the entire range of values of the considered feature. If so, one cannot identify a single spot within the range where the feature becomes initially more important. Hence, the d-ICI plot is expected to take a similar shape as if no interaction was existent.

Secondly, the d-ICI plot is only assumed to yield unambiguous results, if the interaction effect is strong enough. However, this must not necessarily be interpreted as a limitation. It merely shows that the second-order effect (interactions between two features) should not be decisively smaller than the first-order effect (main effect of the feature). If the second-order effect is too small, then it is open for discussion whether detecting this interaction effect is even decisive for interpreting the machine learning model.

10.2.2 Explain Interactions

So far it is understood that PI and ICI plots do probably not provide the clearest insights on whether interaction effects exist or not. Yet, calculating the Individual Conditional Importance allows implementing d-ICI plots which provide better insights.

Still, it is not clarified between which features the interaction takes place. Enabling this would have a major impact on the interpretability of machine learning models. The following will introduce a reliable method that resolves the issue of a lack of explanatory power for interaction effects. These results will then be complemented by the insights from the previous simulations to obtain a full picture of the heterogeneity in Feature Importance.

10.2.2.1 Drivers for Heterogeneity in Feature Importance

Chapter 10.1 highlighted the fact the taking the integral of the Individual Conditional Importance yields the aggregated Feature Importance for each observation. This property can be used to predict the global Feature Importance for each observation concerning the remaining covariates. If interaction exists, for instance a d-ICI plot suggests, then a significant relationship with at least one other feature should be yielded.

Here, it is still not clarified which learner is the most suited. Ideally, one would like to yield sparse results where noise is not fitted. This would prevent that other independent features are included in the model. This property would hold for any regularized regression model. Yet, it would be additionally convenient if the learner would additionally output the threshold were the interaction takes place.

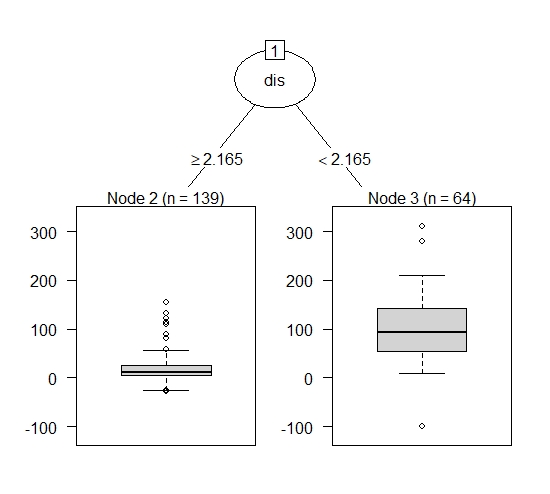

Both desired properties hold best when inducing a decision-tree with a tree-depth of 1. This configuration is quite robust against noise and the returned split point indicates the threshold for which the conditional Feature Importance should be calculated.

In conjunction with the results from the d-ICI plots, one obtains a complete understanding of the nature of the interaction effect. These insights can then be used to later calculate and visualize the conditional Feature Importance.

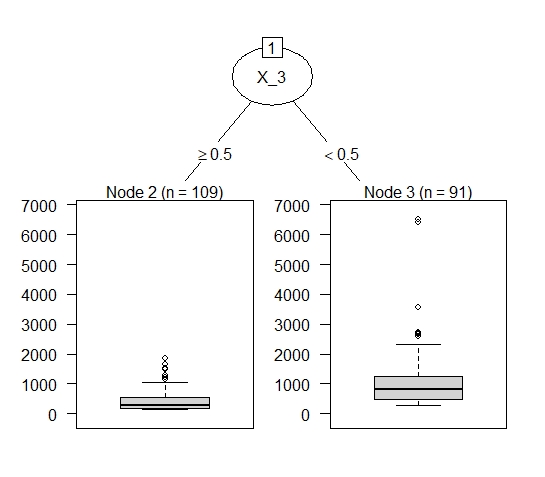

FIGURE 10.6: Simulated data (simulation 2): The decision stump reveals the interaction between \(x_{2}\) and \(x_{3}\). The split node gives information about where the interaction takes place.

Figure 10.6 visualizes the fitted decision tree. The results show that the Feature Importance of \(x_2\) is distinctly larger for values \(x_3 < 0.5\). As \(x_3\) is a binary variable, either taking the value \(0\) or \(1\), the results show that if and only if \(x_3 = 0\), interaction takes place. In the case of only one interaction effect between two covariates, the decision tree should yield stable results.

However, there might be several interaction effects taking place so that a decision-tree with tree-depth = 1 is insufficient. Still, this poses no actual problem as this method is in general not restricted to a specific learner. However, it would be still advantageous to preserve the Importance dimension. Hence, fitting a random forest model would be a suitable method. As the main idea should be clear, it will be refrained from going more into detail at this point.

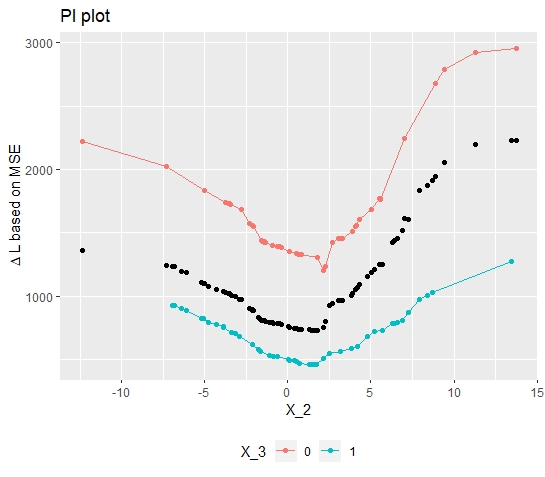

10.2.2.2 Conditional Importance plots

With results from above, one can now calculate the Conditional Individual Importance of the feature \(x_{2}\). Therefore, one just simply subdivides the Individual Conditional Importance into the respective groups. Meaning, calculate the PI for \(x_2\) for all observations where \(x_3 = 0\) and for all observations where \(x_3 = 1\). As the plot below shows, the PI for the observations where the interaction triggered is above average and hence, the observations with \(x_3 = 1\) are below average over the entire range.

FIGURE 10.7: Simulated data (simulation 2): The conditional Feature Importance plot visualizes the impact of the interaction effect on the heterogeneity in Feature Importance.

Finally plotting the conditional Feature Importance (see figure 10.7) indeed confirms the interaction effect and its impact on the Feature Importance. Besides, to get there, some additional interesting insights were obtained. Now, it is known between which features and where in the feature's range of values the interaction effect takes place. With the conditional Feature Importance, one can even quantify the difference and therefore measure the impact of the interaction effect. Concluding, that the initially observed heterogeneity is understood to its full extent.

Applying this on a real data application would then allow a more meaningful, contextual interpretation.

10.2.3 Stress Methods in a Non-Linear Relationship Setting

When trying to detect interaction effects, it was already seen that the inherent heterogeneity diffuses a clear and unambiguous pícture. However, with some background knowledge and the d-ICI plot, it was still possible to reliably detect the interaction effect.

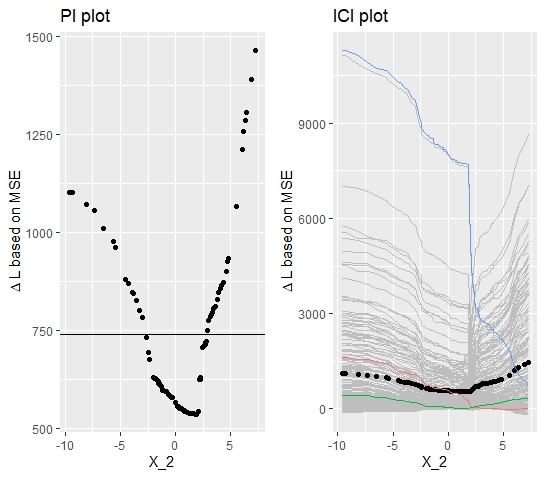

Still, it seems reasonable to further validate the robustness of these methods within a data-generative model with a non-linear relationship. By doing so, it can be assessed whether the methods still detect interaction effects even though additional inherent heterogeneity is introduced. For this purpose, consider the following data-generative model:

\[y \, = \, x_{1} \, - \, 5*sin(x_{2}) \, + x_{3} + 5x_{2} 1_{x_2 > 2, x_3 = 0} \, + \, \epsilon\] \[ x_{1} \, \overset{i.i.d}{\sim} \, \mathcal{N}(0,1), \, x_{2} \, \overset{i.i.d}{\sim} \mathcal{N}(1, 4) \quad \text{and} \quad x_{3} \overset{i.i.d}{\sim} B(1, 0.5),\, \epsilon \overset{i.i.d}{\sim} \mathcal{N}(0, 1)\] Inducing further heterogeneity by including the sinus function seems appropriate as heterogeneity is "uniformly" distributed over the feature's range of values. The function values are bounded by -1 and 1, so that the heterogeneity is controlled and does not exceed extreme values.

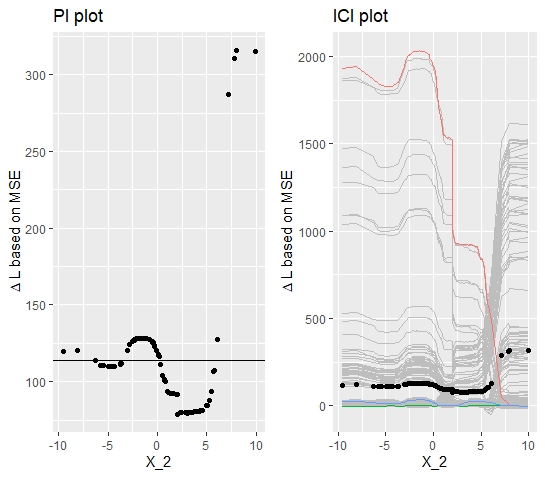

The plotted PI curve (see figure 10.8) indicates that the Feature Importance for \(x_2 > 5\) is above the average. Therefore, one might conclude that interaction which makes the feature more important takes place in this area. Disentangling the PI curve into its components yields a slightly different picture. It shows a steep descent of some curves at \(x_2 = 2\) and descending but also ascending curves at \(x_2 = 5\). Compared to the PI curves, the ICI curves allow a more detailed analysis of the heterogeneity. But, still it is not clarified whether interaction takes place at \(x_2 = 2\) or \(x_2 = 5\).

FIGURE 10.8: Simualated data (simulation 3): PI Plot and ICI Plot corresponding to the variable \(x_{2}\).

Hence, again the derivatives can be calculated and plotted as shown below (see figure 10.9).

FIGURE 10.9: Simulated data (simulation 3): d-ICI plot for variable \(x_{2}\). Calculating and plotting the derivatives of the ICI curves, reveals the spot where interaction takes place.

Despite the additional heterogeneity, the d-ICI plots still disentangles the diffused picture and uniquely identifies the interaction effects. It can be seen that the largest descent of the ICI curves takes place at \(x_2 = 2\). It further can be excluded that an interaction effect takes place at \(x_2 = 5\).

Of course, as it is known that the feature is sinusoidally distributed, the steep ascent of the ICI curves at \(x_2 = 5\) could have been explained. Having a closer look at the ICI plot it also reveals that the ascent is gradually increasing which does not hold for the ascent at \(x_2 = 2\). But still, it can be concluded that within this setting, the PI and ICI plots are not very telling.

10.3 Real Data Application: Boston Housing

Before this chapter will be closed by a brief discussion and an outlook for further research, the introduced methods will be now applied to real data. Doing so is important for final validation. Only if it can be shown that these methods apply to real data, they can be assessed as useful.

Pursuing this objective, we will calculate the PFI, PI, and ICI for the predictors of the Boston Housing dataset. In this setting, it is of interest to predict and explain the "median value of owner-occupied homes in USD1,000$. In a pre-analysis, the predictor variable lstat was chosen to conduct further analyses. Briefly, lstat measures the percentage share of lower status people in the population and from an economic perspective, it is assumed that there is a significant relationship between the predictor and the outcome variable.

As in the simulation analysis, the model is fitted equivalently by a random forest model and the calculation of the Importance metrics follows the same principle. The PI and ICI plots below, allow a first interpretation of the Feature Importance.

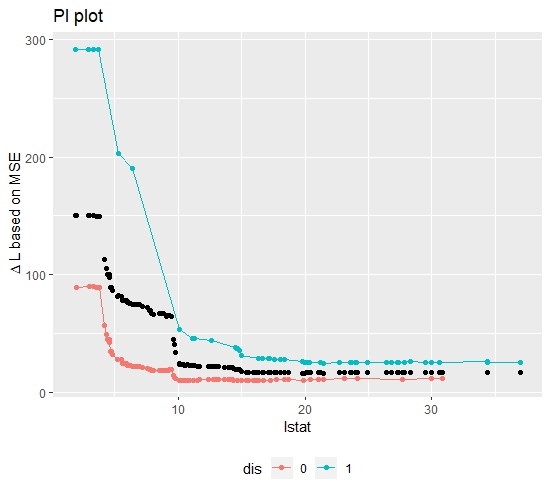

FIGURE 10.10: Boston Housing Data: PI and ICI plot for feature lstat. A clear and not uniformly distributed heterogeneity can be observed.

The PI plot reveals that on average the explanatory power of lstat becomes decisively larger for values below 10. Briefly, if the percentage of people with lower status is below 10%, the variable becomes a more important constitute of the predictive model. The ICI plot confirms this result and shows some additional heterogeneity in this area which, however, is hard to interpret within this visualization setting. Therefore, again, the derivatives of the ICI curves can be calculated to detect the heterogeneity more comprehensively.

FIGURE 10.11: Boston Housing Data: d-ICI plot for feature lstat. The plot provides further insights on the initially observed heterogeneity.

The d-ICI plot for the feature lstat additionally confirms the heterogeneity, visible in the ICI plot. Especially at \(lstat = 10\) a significant amplitude can be identified. This indicates that an interaction between lstat and another covariate takes place in the area around \(lstat <= 10\). Given this insight, it is now to be determined with which covariate the feature "lstat" interacts. Therefore, we again predict the integral of each observation's Feature Importance concerning the remaining covariates.

FIGURE 10.12: Boston Housing Data: Explain Interaction Effects for feature lstat. The decision stump identifies the interaction between feature lstat and feature dis. Again the split point indicates where the interaction effect takes place.

At this point, one has gained a rather complete picture of why there is heterogeneity in the Feature Importance of the feature "lstat". Therefore, one can now calculate the Conditional Feature Importance of lstat on the variable dis. Plotting the conditional curves (see figure 10.13), confirms the analysis of the previous results. Even though the results are not as distinguished as in the simulation settings, the interaction taking place is still clearly visible.

FIGURE 10.13: Boston Housing Data: Conditional Importance Plot. Importance of lstat conditional on values of dist

With the insights from the Real Data Application, one can conclude that the presented methods also work beyond the simulation setting and is, therefore, applicable for explaining heterogeneity in Feature Importance within a machine learning model. Yet, the heterogeneity problem was merely discussed in the context of interaction effects. It could be further discussed whether it might be even possible to identify the structural relationship between a feature and the response. Besides, it would be also interesting to investigate the PI and ICI plots in the context of correlated features.

10.4 Discussion

So far, it was stressed to what extent the PI and ICI plots are suitable tools to obtain a better interpretation of Feature Importance. To give this a final evaluation, the critical assessment is subdivided into two parts. The first part will summarize the capabilities of the visualization tools. The second part will explore the possibilities that have arisen through the data obtained.

The simulation chapter revealed that the ICI and PI plots are indeed able to visualize heterogeneity but both had its limitations when trying to detect interaction effects. First, even though no heterogeneity was expected, the PI and ICI plot still visualized heterogeneity. Even though it can be explained by the distributional properties of the feature, it can lead to confusion. One could circumvent the problem by weighting the local Feature Importance of each observation with its respective probability mass. This would "squash" the curves to a linear shape and merely only deviations from that could be interpreted as a proper heterogeneity. Second, when a non-linear relationship between response and the feature was induced, both methods did not yield robust and reliable results.

Further, it was argued that explaining interactions is as important as detecting interaction effects. Even if the ICI plots were able to detect interaction effects, it was not possible to explain them. Briefly, between which features and where did the interaction take place.

Concluding, the visualization of PI and ICI does indeed disentangle the global PFI metric but has its non-negligible limitations when interpreting the results properly.

Even though the PI and ICI plots themselves are very limited in its explanatory power, it was still possible with the underlying data to create a cookbook that enabled a full picture. The d-ICI plots represent a robust method for detecting interactions, even in a "messy" non-linear relationship. It turned out that calculating the approximated Feature Importance was easy to implement and therefore posed no major challenge. Further, as the Partial Importance was already calculated it was not difficult to implement a method which explains between which features interaction takes place. Solving that, one can calculate the conditional Feature Importance and therefore, finally visualize the actual effect of the interaction on Feature Importance.

Concluding, even though the PI and ICI plots have its limitations, the underlying data represents an exhaustive foundation for yielding a complete picture of the heterogeneity. Therefore, it can be stated that disentangling the global PFI into its components is a valid and insightful approach to better understand what exactly drives the predictions of a machine learning model.

References

Casalicchio, Giuseppe, Christoph Molnar, and Bernd Bischl. 2018. “Visualizing the Feature Importance for Black Box Models.” In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 655–70. Springer.

Goldstein, Alex, Adam Kapelner, Justin Bleich, and Emil Pitkin. 2013. “Peeking Inside the Black Box: Visualizing Statistical Learning with Plots of Individual Conditional Expectation.” Journal of Computational and Graphical Statistics 24 (September). doi:10.1080/10618600.2014.907095.