Chapter 3 Multimodal architectures

Authors: Luyang Chu, Karol Urbanczyk, Giacomo Loss, Max Schneider, Steffen Jauch-Walser

Supervisor: Christian Heumann

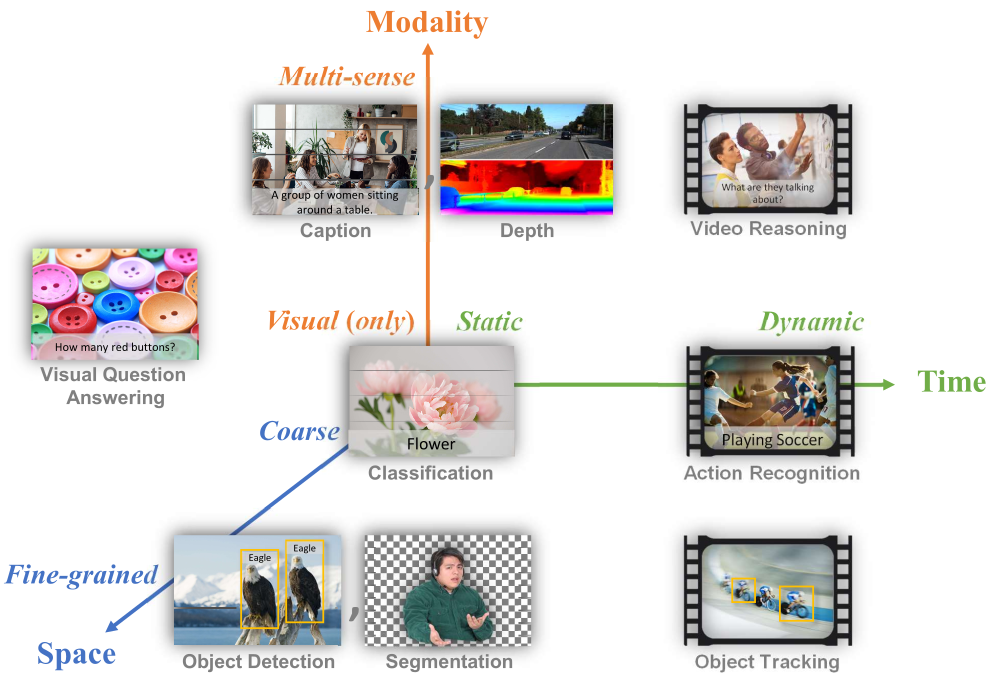

Multimodal learning refers to the process of learning representations from different types of input modalities, such as image data, text or speech. Due to methodological breakthroughs in the fields of Natural Language Processing (NLP) as well as Computer Vision (CV) in recent years, multimodal models have gained increasing attention as they are able to strengthen predictions and better emulate the way humans learn. This chapter focuses on discussing images and text as input data. The remainder of the chapter is structured as follows:

The first part “Image2Text” discusses how transformer-based architectures improve meaningful captioning for complex images using a new large scale, richly annotated dataset COCO (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b; Cornia et al. 2020). While looking at a photograph and describing it or parsing a complex scene and describing its context is not a difficult task for humans, it appears to be much more complex and challenging for computers. We start with focusing on images as input modalities. In 2014 Microsoft COCO was developed with a primary goal of advancing the state-of-the-art (SOTA) in object recognition by diving deeper into a broader question of scene understanding (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). “COCO” in this case is the acronym for Common Objects in Context. It addresses three core problems in scene understanding: object detection (non-iconic views), segmentation, and captioning. While for tasks like machine translation and language understanding in NLP, transformer-based architecture are already widely used, the potential for applications in the multi-modal context has not been fully covered yet. With the help of the MS COCO dataset, the transformer-based architecture “Meshed-Memory Transformer for Image Captioning” (\(M^2\)) will be introduced, which was able to improve both image encoding and the language generation steps (Cornia et al. 2020). The performance of \(M^2\) and other different fully-attentive models will be compared on the MS COCO dataset.

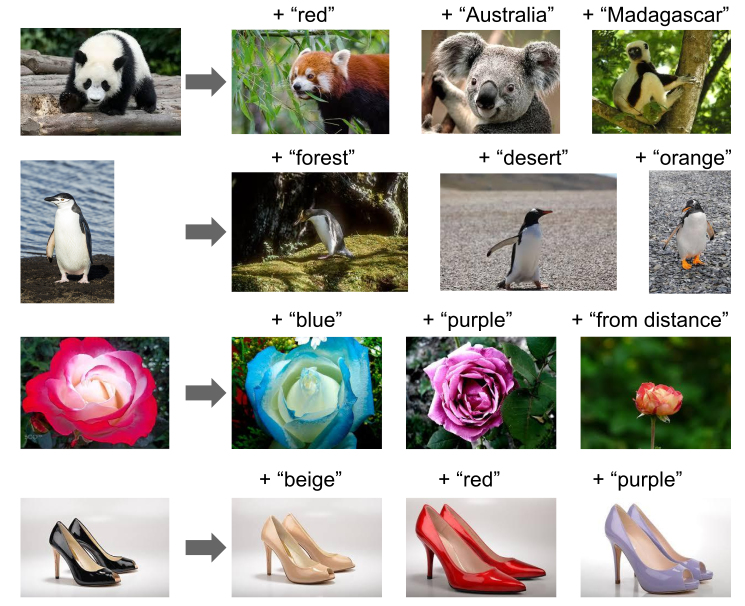

Next, in Text2Image, the idea of incorporating textual input in order to generate visual representations is described. Current advancements in this field have been made possible largely due to recent breakthroughs in NLP, which first allowed for learning contextual representations of text. Transformer-like architectures are being used to encode the input into embedding vectors, which are later helpful in guiding the process of image generation. The chapter discusses the development of the field in chronological order, looking into details of the most recent milestones. Concepts such as generative adversarial networks (GAN), variational auto-encoders (VAE), VAE with vector quantization (VQ-VAE), diffusion, and autoregressive models are covered to provide the reader with a better understanding of the roots of the current research and where it might be heading. Some of the most outstanding outputs generated by state-of-the-art works are also presented in the chapter.

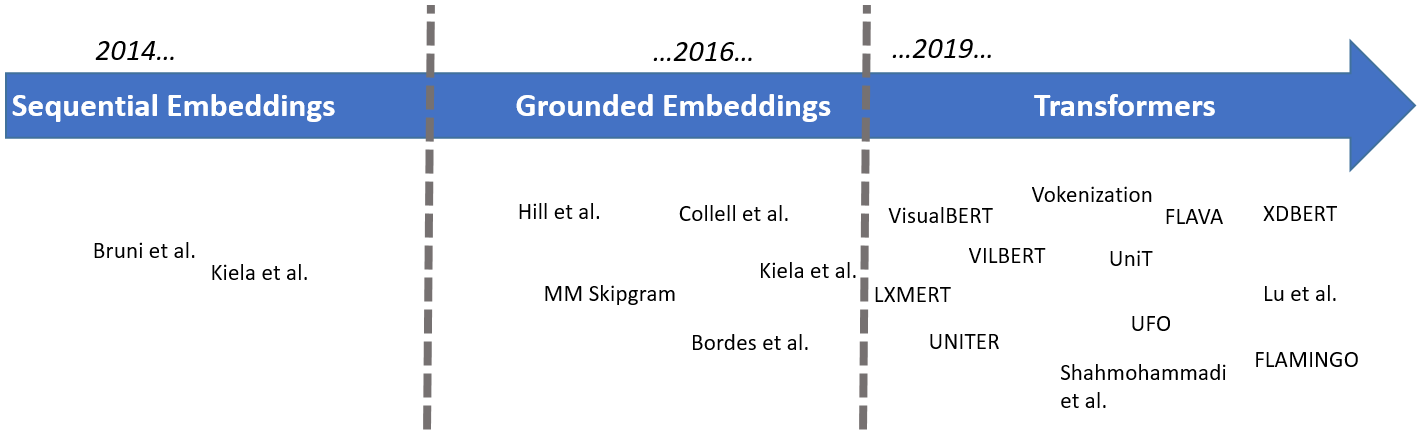

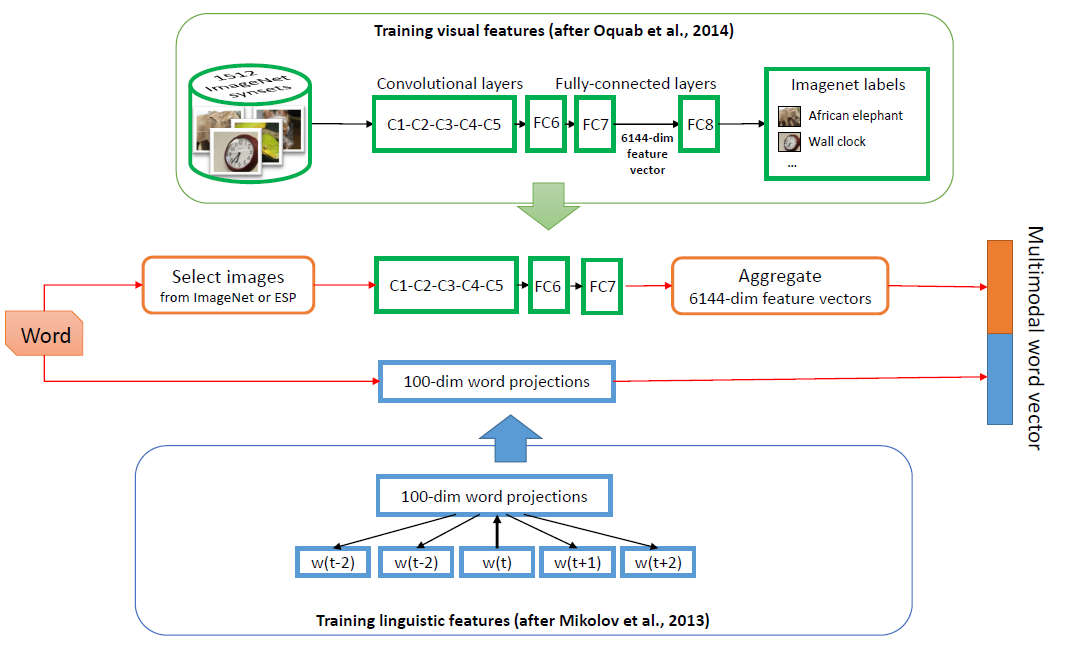

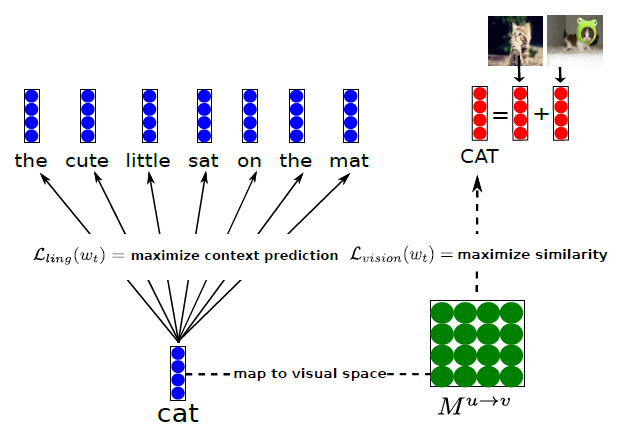

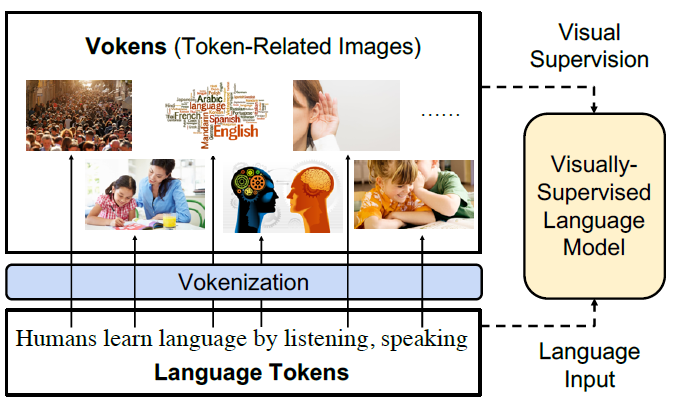

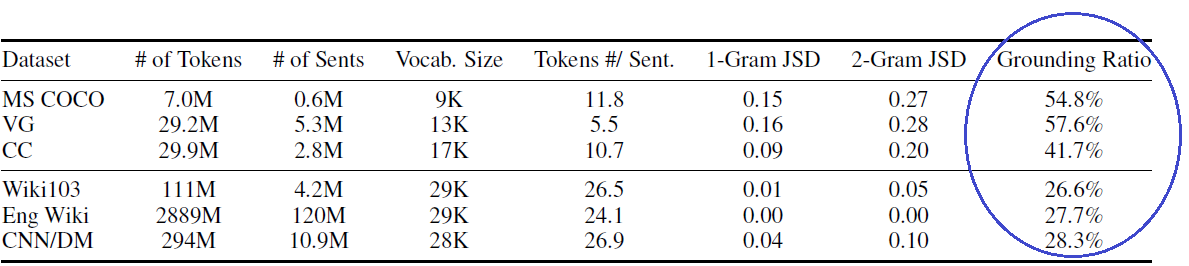

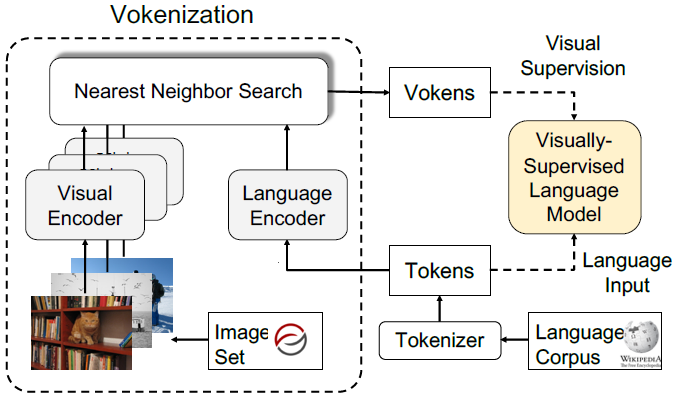

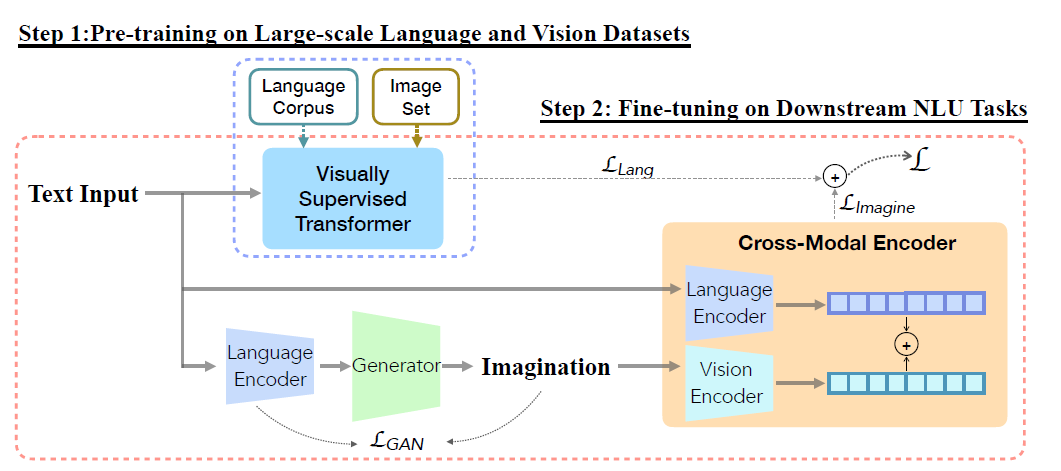

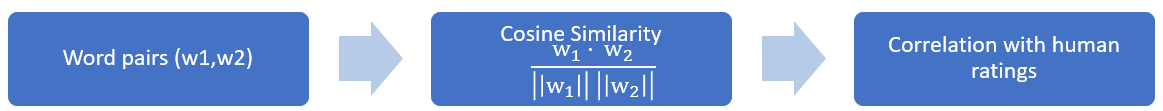

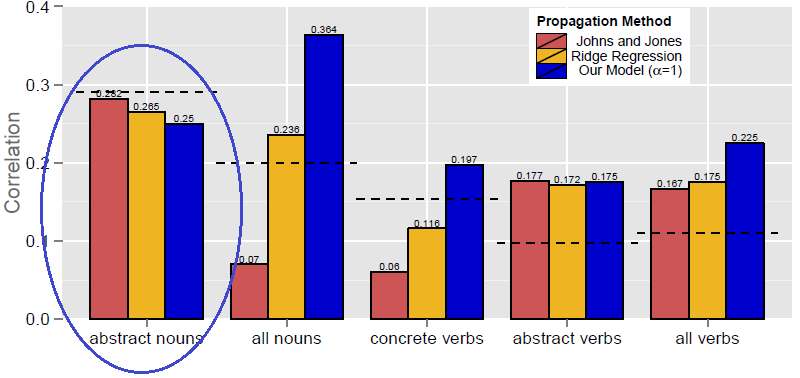

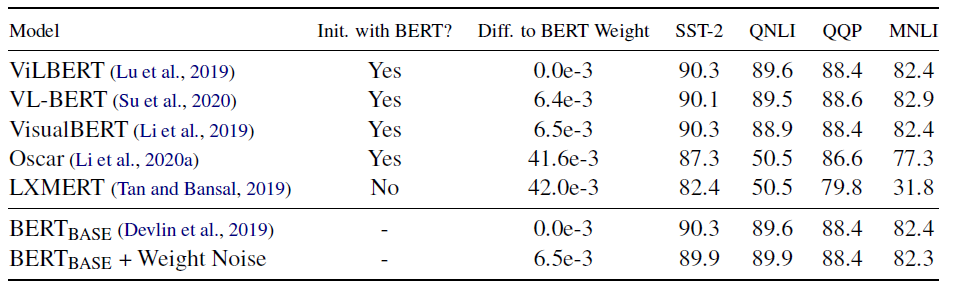

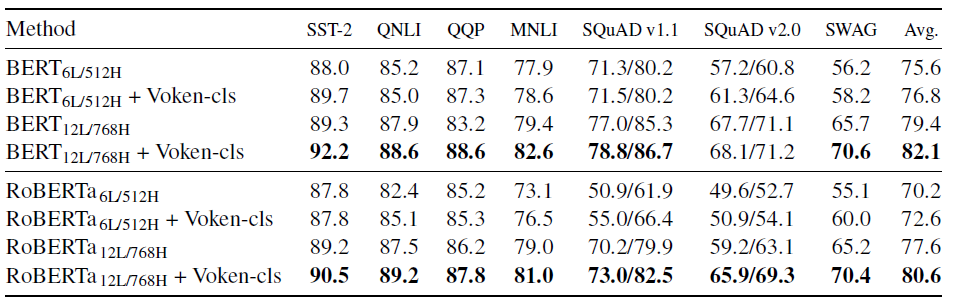

The third part, “Images supporting Language Models”, deals with the integration of visual elements in pure textual language models. Distributional semantic models such as Word2Vec and BERT assume that the meaning of a given word or sentence can be understood by looking at how (in which context) and when the word or the sentence appear in the text corpus, namely from its “distribution” within the text. But this assumption has been historically questioned, because words and sentences must be grounded in other perceptual dimensions in order to understand their meaning (see for example the “symbol grounding problem”; Harnad 1990). For these reasons, a broad range of models has been developed with the aim to improve pure language models, leveraging the addition of other perceptual dimensions, such as the visual one. This subchapter focuses in particular on the integration of visual elements (here: images) to support pure language models for various tasks at the word-/token-level as well as on the sentence-level. The starting point in this case is always a language model, into which visual representations (extracted often with the help of large pools of images rom data sets like MS COCO, see chapter “Img2Text” for further references) are to be “integrated”. But how? There has been proposed a wide range of solutions: On one side of the spectrum, textual elements and visual ones are learned separately and then “combined” afterwards, whereas on the other side, the learning of textual and visual features takes place simultaneously/jointly.

FIGURE 3.1: Left: Silberer and Lapata (2014) stack autoencoders to learn higher-level embeddings from textual and visual modalities, encoded as vectors of attributes. Right: Bordes et al. (2020) fuse textual and visual information in an intermediate space denoted as “grounded space”; the “grounding objective function” is not applied directly on sentence embeddings but trained on this intermediate space, on which sentence embeddings are projected.

For example, Silberer and Lapata (2014) implement a model where a one-to-one correspondence between textual and visual space is assumed. Text and visual representations are passed to two separate unimodal encoders and both outputs are then fed to a bimodal autoencoder. On the other side, Bordes et al. (2020) propose a “text objective function” whose parameters are shared with an additional “grounded objective function”. The training of the latter takes place in what the authors called a “grounded space”, which allows to avoid the one-to-one correspondence between textual and visual space. These are just introductory examples and between these two approaches there are many shades of gray (probably even more than fifty ..). These models exhibit in many instances better performance than pure language models, but they still struggle on some aspects, for example when they deal with abstract words and sentences.

Afterwards, in the subchapter on “Text supporting Image Models”, approaches where natural language is used as additional supervision for CV models are described. Intuitively these models should be more powerful compared to models supervised solely by manually labeled data, simply because there is much more signal available in the training data.

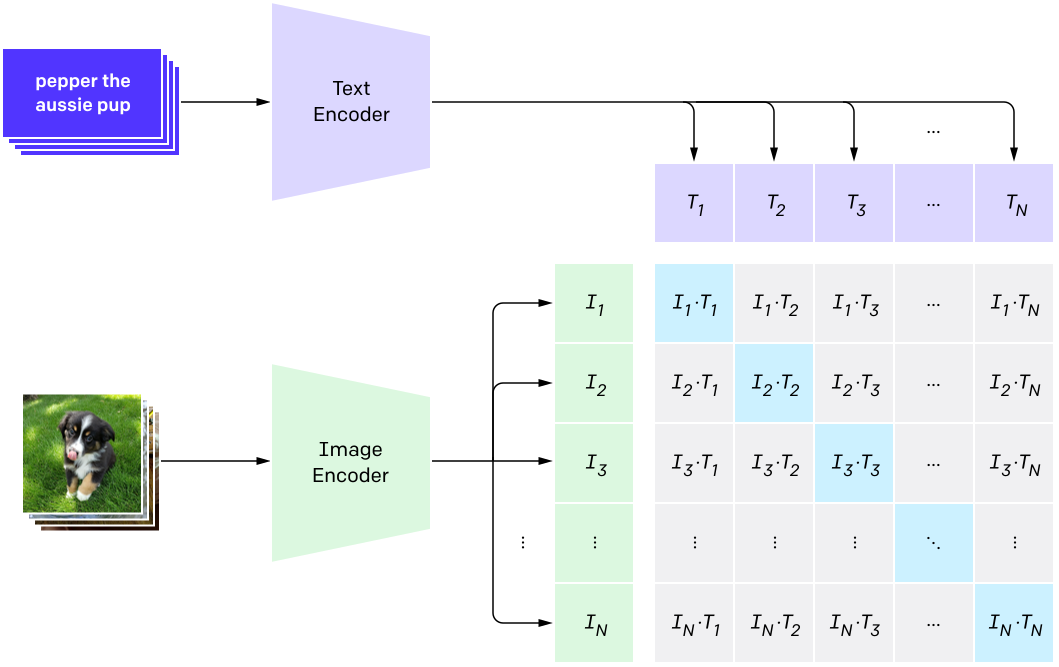

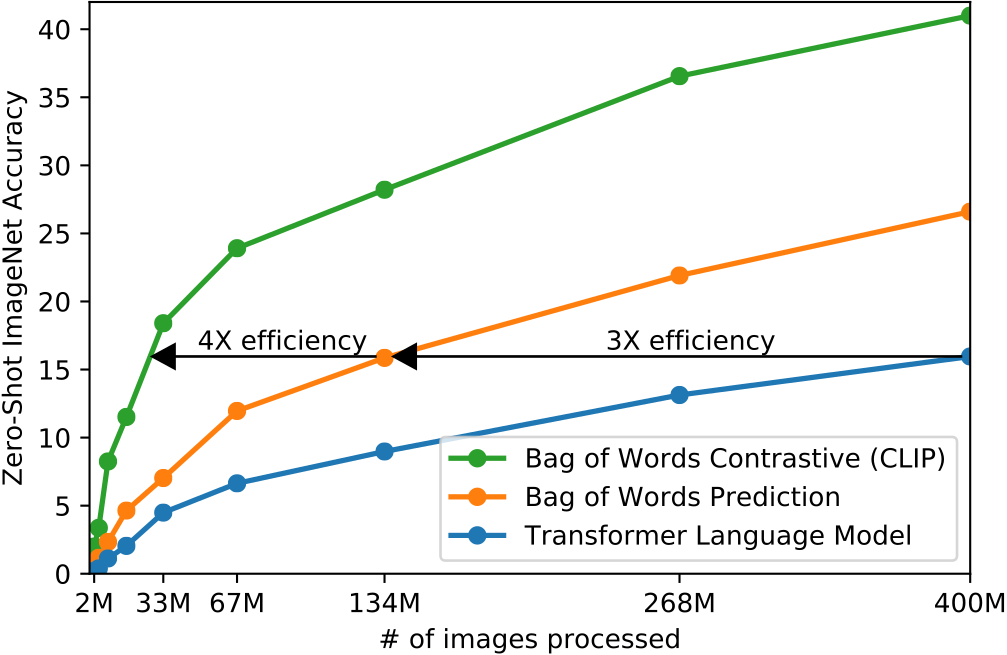

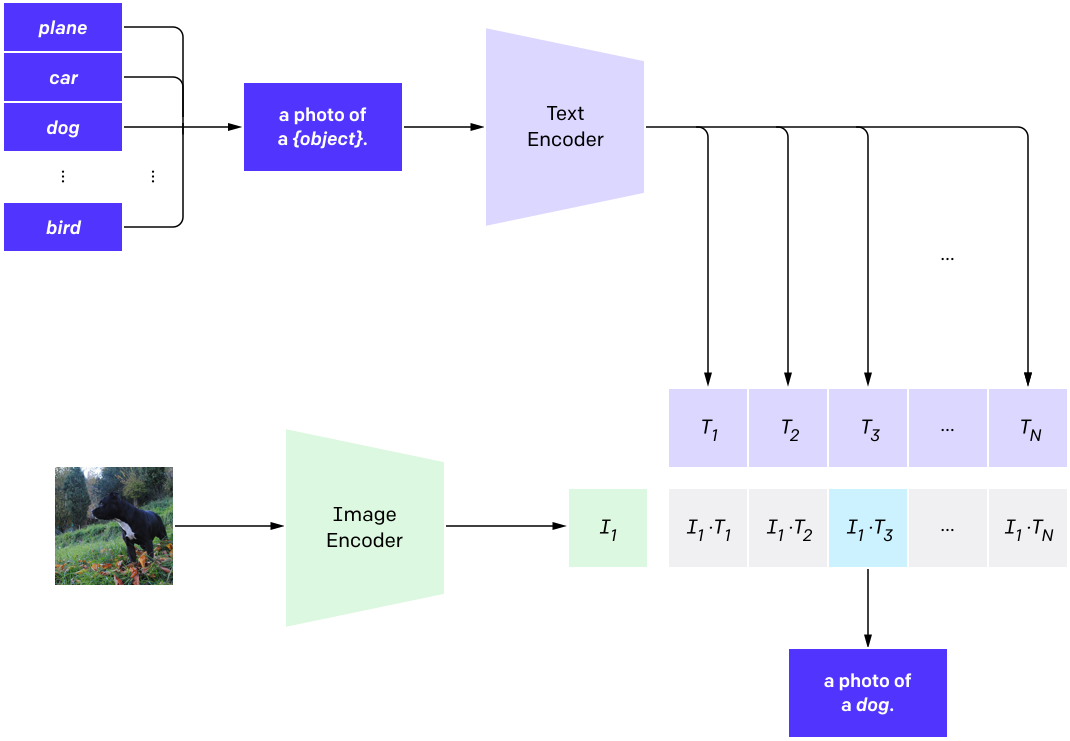

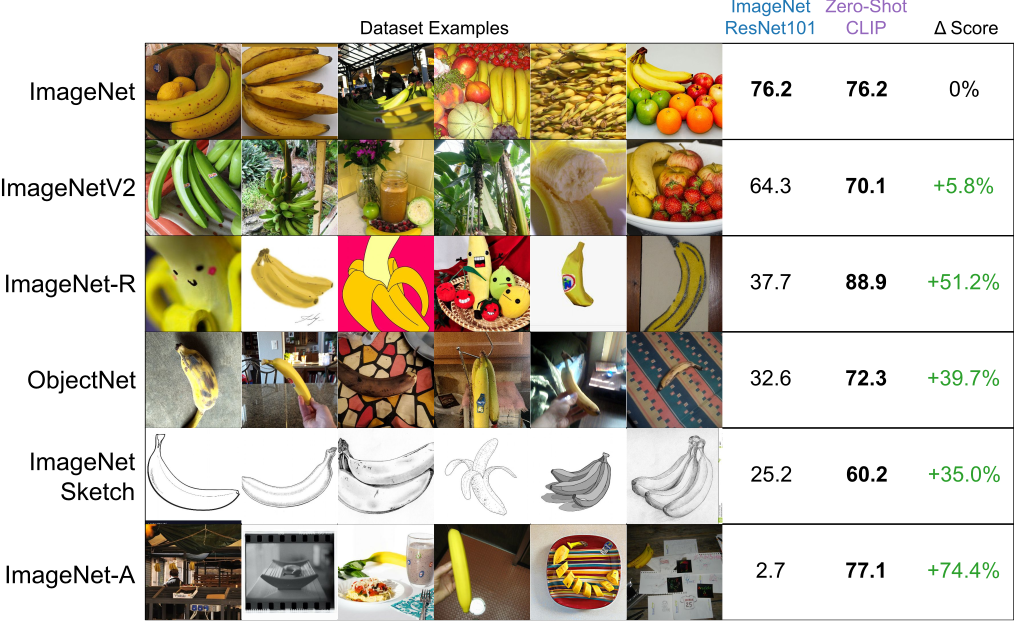

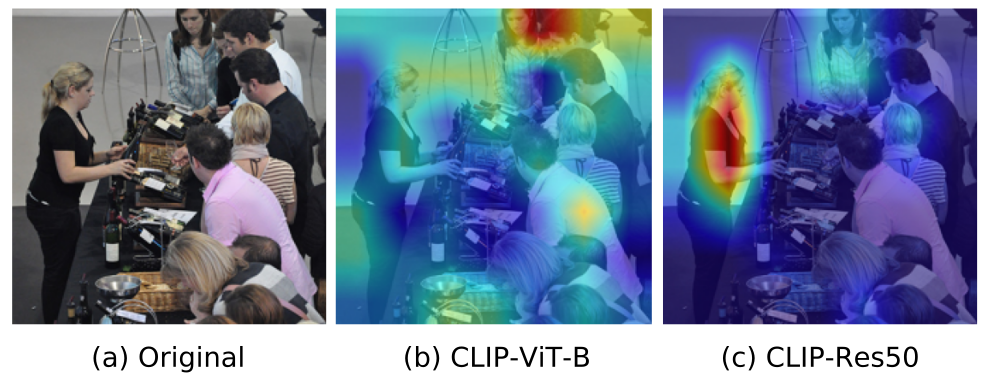

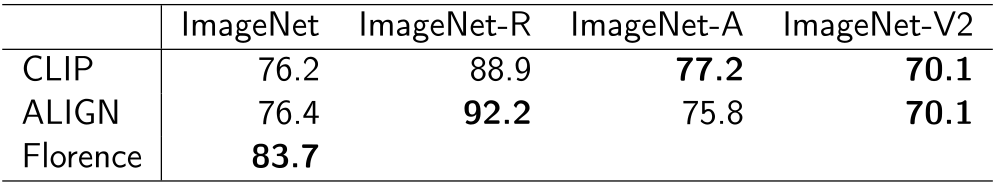

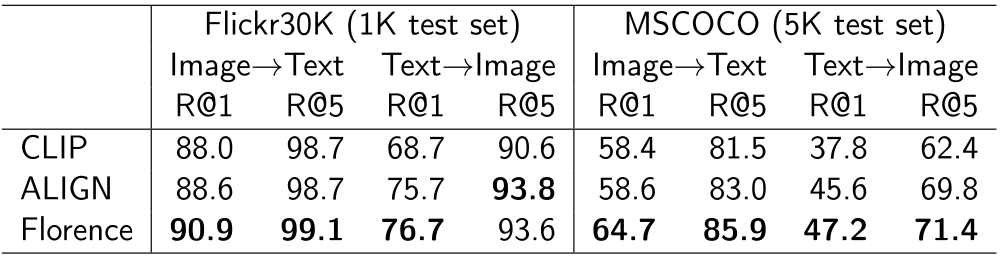

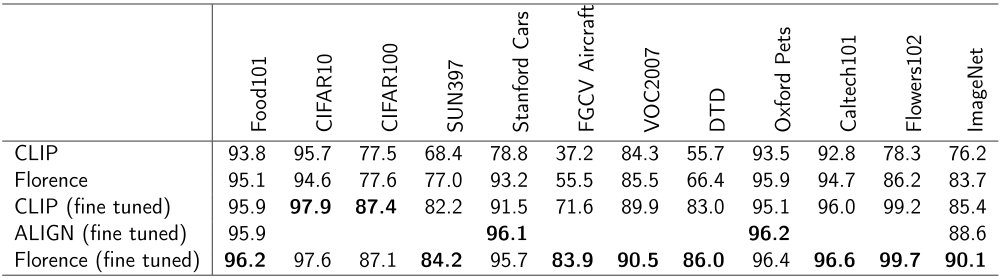

One prominent example for this is the CLIP model (Radford, Kim, Hallacy, Ramesh, Goh, Agarwal, Sastry, Askell, Mishkin, Clark, and others 2021) with its new dataset WIT (WebImageText) comprising 400 million text-image pairs scraped from the internet. Similar to “Text2Image” the recent success stories in NLP have inspired most of the new approaches in this field. Most importantly pre-training methods, which directly learn from raw text (e.g. GPT-n, Generative Pre-trained Transformer; Brown et al. 2020). So, the acronym CLIP stands for _C_ontrastive _L_anguage-_I_mage _P_re-training here. A transformer-like architecture is used for jointly pre-training a text encoder and an image encoder. For this, the contrastive goal to correctly predict which natural language text pertains to which image inside a certain batch, is employed. Training this way turned out to be more efficient than to generate captions for images. This leads to a flexible model, which at test time uses the Learned text encoder as a “zero-shot” classifier on embeddings of the target dataset’s classes. The model, for example, can perform optical character recognition, geo-location detection and action-recognition. Performance-wise CLIP can be competitive with task-specific supervised models, while never seeing an instance of the specific dataset before. This suggests an important step towards closing the “robustness gap”, where machine learning models fail to meet the expectations set by their previous performance – especially on ImageNet test-sets – on new datasets.

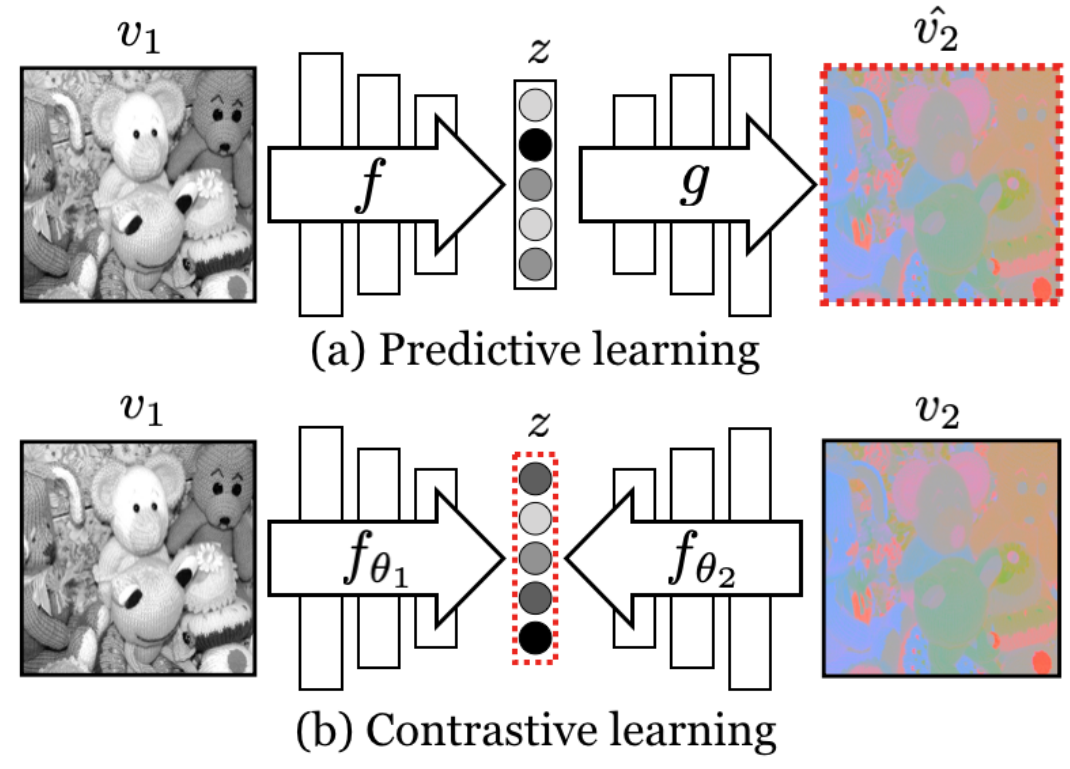

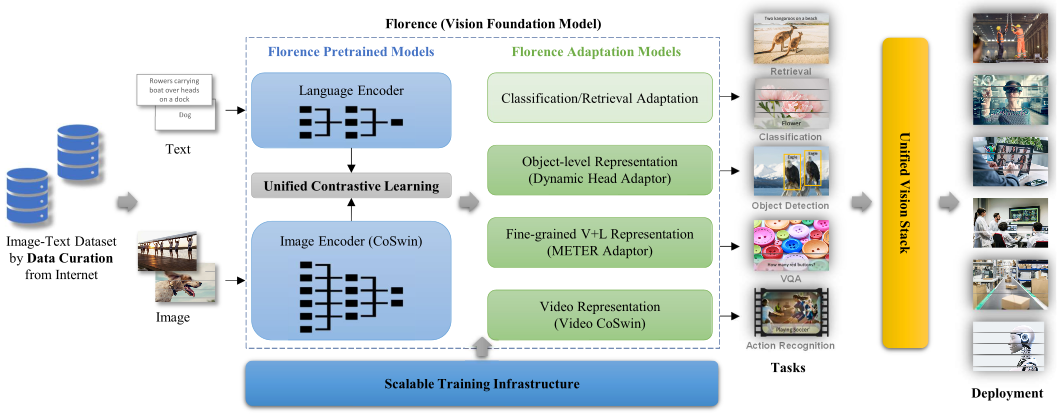

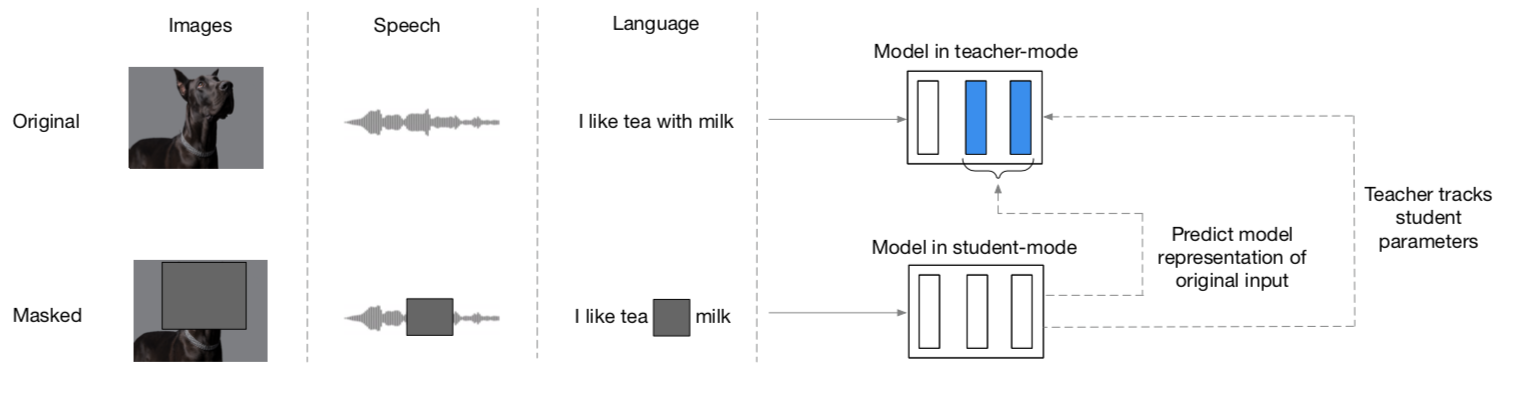

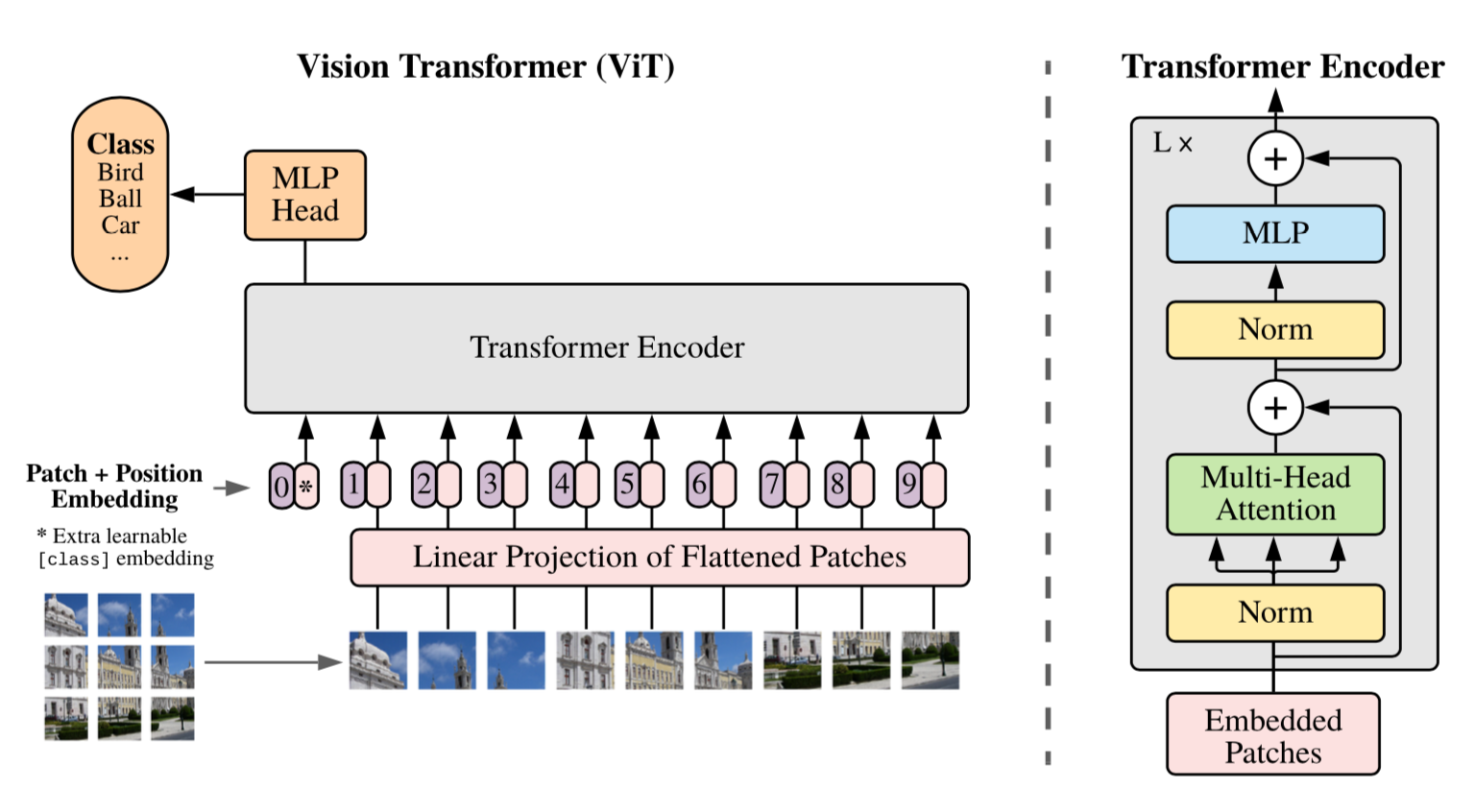

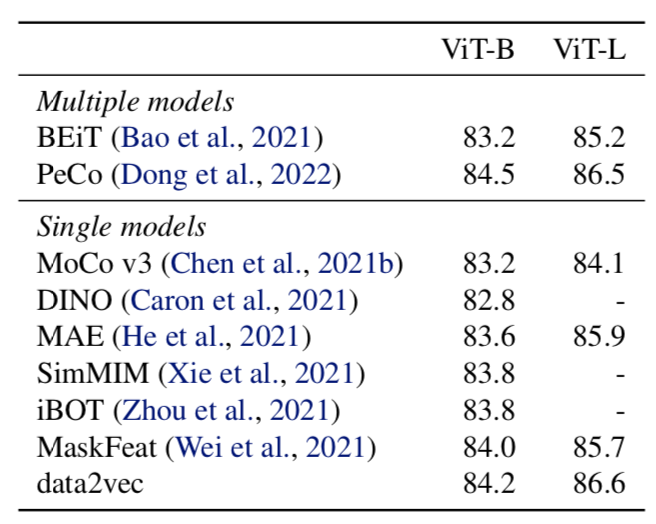

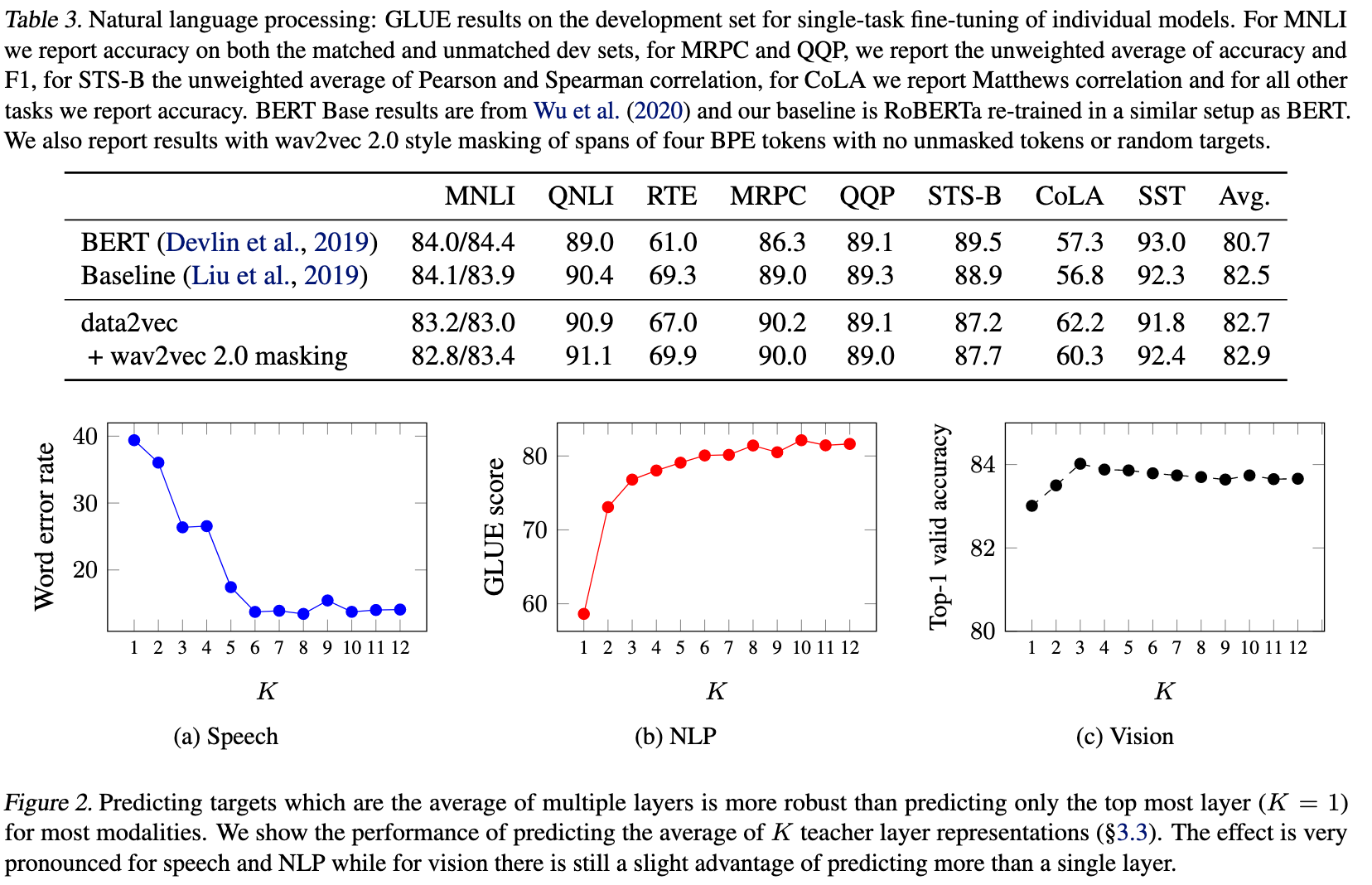

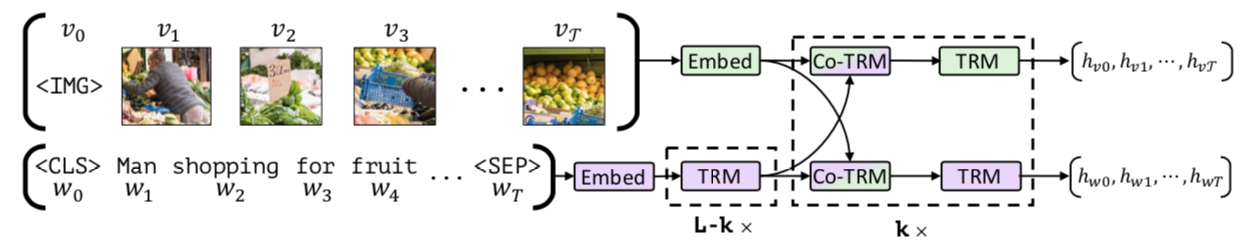

Finally, the subchapter “Models for both modalities” discusses how text and image inputs can be incorporated into a single unifying framework in order to get closer to a general self-supervised learning framework. There are two key advantages that make such an architecture particularly interesting. Similar to models mentioned in previous parts, devoid of human labelling, self-supervised models don’t suffer from the same capacity constraints as regular supervised learning models. On top of that, while there have been notable advances in dealing with different modalities using single modality models, it is often unclear to which extend a model structure generalizes across different modalities. Rather than potentially learning modality-specific biases, a general multipurpose framework can help increase robustness while also simplifying the learner portfolio. In order to investigate different challenges and trends in vision-and-language modelling, this section takes a closer look at three different models, namely data2vec (Baevski et al. (2022)), VilBert (J. Lu et al. (2019b)) and Flamingo (Alayrac et al. (2022)) Data2vec is a new multimodal self-supervised learning model which uses a single framework to process either speech, natural language or visual information. This is in contrast to earlier models which used different algorithms for different modalities. The core idea of data2vec, developed by MetaAI, is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard transformer architecture. (Baevski et al. (2022)) As a result, the main improvement is in the framework itself, not the underlying architectures themselves. For example, the transformer architecture being used follows Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, et al. (2017b). Through their parallelizability, transformers have several advantages over RNNs/CNNs particularly when large amounts of data are being used, making them the de-facto standard approach in vision-language modelling. (Dosovitskiy, Beyer, Kolesnikov, Weissenborn, Zhai, Unterthiner, Dehghani, Minderer, Heigold, Gelly, and others (2020)) VilBert is an earlier model that in contrast to data2vec can handle cross-modality tasks. Finally, Flamingo is a modern few shot learning model which features 80B parameters - significantly more than the other two models. Through a large language model incorporated in its architecture, it has great text generating capabilities to tackle open-ended tasks. It also poses the question how to efficiently train increasingly large models and shows the effectiveness of using perceiver architectures (Jaegle, Gimeno, Brock, Vinyals, Zisserman, and Carreira (2021a)) to encode inputs from different modalities as well as how to leverage communication between pretrained and frozen models.

3.1 Image2Text

Author: Luyang Chu

Supervisor: Christian Heumann

Image captioning refers to the task of producing descriptive text for given images. It has stimulated interest in both natural language processing and computer vision research in recent years. Image captioning is a key task that requires a semantic comprehension of images as well as the capacity to generate accurate and precise description sentences.

3.1.1 Microsoft COCO: Common Objects in Context

The uderstanding of visual scenes plays an important role in computer vision (CV) research. It includes many tasks, such as image classification, object detection, object localization and semantic scene labeling. Through the CV research history, high-quality image datasets have played a critical role. They are not only essential for training and evaluating new algorithms, but also lead the research to new challenging directions (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). In the early years, researchers developed Datasets (Deng et al. 2009),(Xiao et al. 2010),(Everingham et al. 2010) which enabled the direct comparison of hundreds of image recognition algorithms, which led to an early evolution in object recognition. In the more recent past, ImageNet (Deng et al. 2009), which contains millions of images, has enabled breakthroughs in both object classification and detection research using new deep learning algorithms.

With the goal of advancing the state-of-the-art in object recognition, especially scene understanding, a new large scale data called “Microsoft Common Objects in Context” (MS COCO) was published in 2014. MS COCO focuses on three core problems in scene understanding: detecting non-iconic views, detecting the semantic relationships between objects and determining the precise localization of objects (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

The MS COCO data set contains 91 common object categories with a total of 328,000 images as well as 2,500,000 instance labels. The authors claim, that all of these images could be recognized by a 4 year old child. 82 of the categories include more than 5000 labeled instances. These labeled instances wmay support the detection of relationships between objects in MS COCO. In order to provide precise localization of object instances, only “Thing” categories like e.g. car, table, or dog were included. Objects which do not have clear boundaries like e.g. sky, sea, or grass, were not included. In current object recognition research, algorithms perform well on images with iconic views. Images with iconic view are defined as containing the one single object category of interest in the center of the image. To accomplish the goal of detecting the contextual relationships between objects, more complex images with multiple objects or natural images, coming from our daily life, are also gathered for the data set.

In addition to MS COCO, researchers have been working on the development of new large databases. In recent years many new large databases like ImageNet, PASCAL VOC (Everingham et al. 2010) and SUN (Xiao et al. 2010) have been developed in the field of computer vision. Each of this dataset has its on specific focus.

Datasets for object recognition can be roughly split into three groups: object classification, object detection and semantic scene labeling.

Object classification requires binary labels to indicate whether objects are present in an image, ImageNet (Deng et al. 2009) is clearly distinguishable from other datasets in terms of the data set size. ImageNet contains 22k categories with 500-1000 images each.In comparison to other data sets, the ImageNet data set contains thus over 14 million labeled images with both entity-level and fine-grained categories by using the WordNet hierarchy and has enabled significant advances in image classification.

Detecting an object includes two steps: first is to ensure that an object from a specified class is present, the second step is to localize the object in the image with a given bounding box. This can be implemented to solve tasks like face detection or pedestrians detection. The PASCAL VOC (Everingham et al. 2010) data set can be used to help with the detection of basic object categories. With 20 object categories and over 11,000 images, PASCAL VOC contains over 27,000 labeled object instances by additionally using bounding boxes. Almost 7,000 object instances from them come with detailed segmentations (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

Labeling semantic objects in a scene requires that each pixel of an image is labeled with respect to belonging to a category, such as sky, chair, etc., but individual instances of objects do not need to be segmented (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). Some objects like sky, grass, street can also be defined and labeled in this way. The SUN data set (Xiao et al. 2010) combines many of the properties of both object detection and semantic scene labeling data sets for the task of scene understanding, it contains 908 scene categories from the WordNet dictionary (Fellbaum 2000) with segmented objects. The 3,819 object categories split them to object detection datasets (person, chair) and to semantic scene labeling (wall, sky, floor) (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

3.1.1.1 Image Collection and Annotation for MS COCO

MS COCO is a large-scale richly annotated data set, the progress of building consisted of two phases: data collection and image annotation.

In order to select representative object categories for images in MS COCO, researchers collected several categories from different existing data sets like PASCAL VOC (Everingham et al. 2010) and other sources. All these object categories could, according to the authors, be recognized by children between 4 to 8. The quality of the object categories was ensured by co-authors. Co-authors rated the categories on a scale from 1 to 5 depending on their common occurrence, practical applicability and diversity from other categories (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). The final number of categories on their list was 91. All the categories from PASCAL VOC are included in MS COCO.

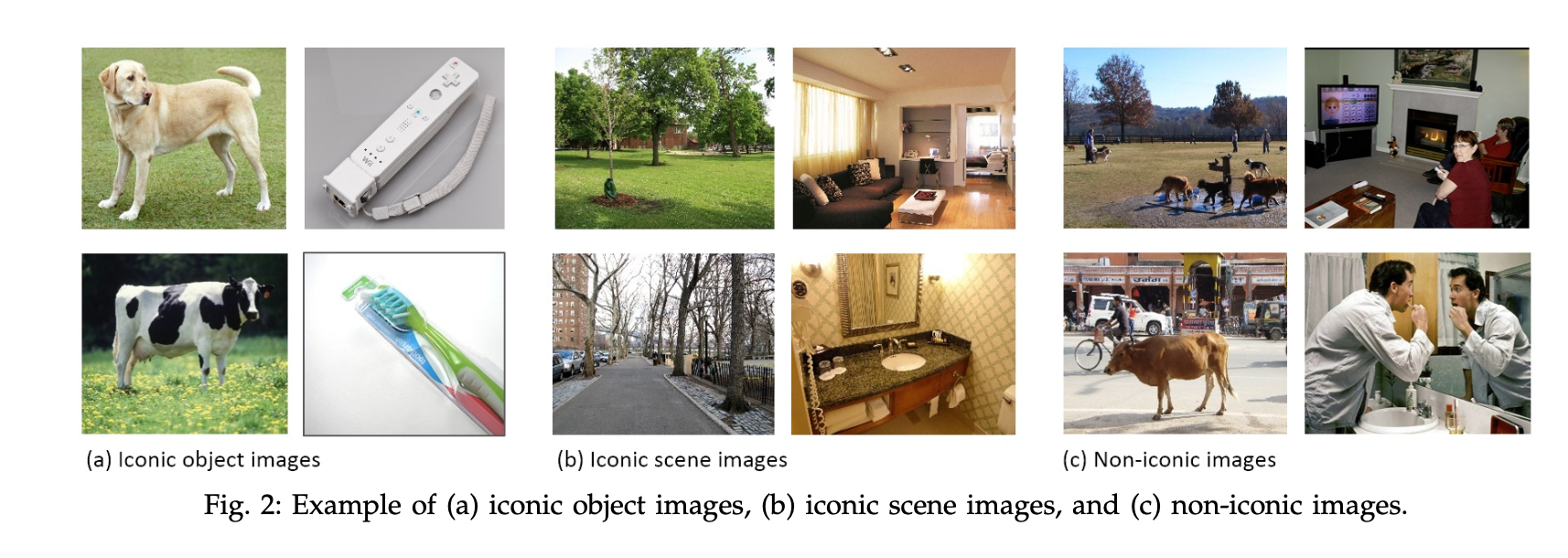

With the help of representative object categories, the authors of MS COCO wanted to collect a data set in which a majority of the included images are non-iconic. All included images can be roughly divided into three types according to Fig. 3.2: iconic-object images, iconic-scene images and non-iconic images (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

FIGURE 3.2: Type of images in the data set (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

Images are collected through two strategies: firstly images from Flickr, a platform for photos uploaded by amateur photographers, with their keywords are collected. Secondly, researchers searched for pairwise combinations of object categories like “dog + car” to gather more non-iconic images and images with rich contextual relationships (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

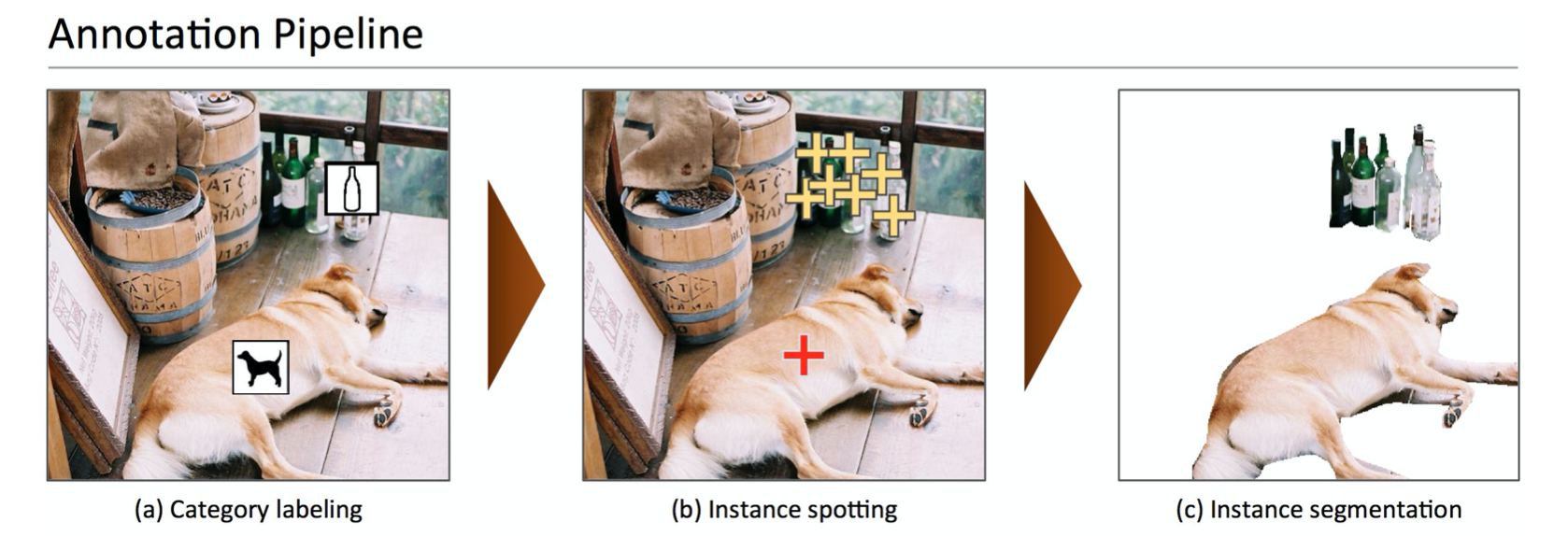

Due to the scale of the dataset and the high cost of the annotation process, the design of a high quality annotation pipeline with efficient cost depicted a difficult task. The annotation pipeline in Fig. 3.3 for MS COCO was split into three primary tasks: 1. category labeling, 2.instance spotting, and 3. instance segmentation (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

FIGURE 3.3: Annotation pipeline for MS COCO (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

As we can see in the Fig 3.3, object categories in each image were determined in the first step. Due to the large number of data sets and categories, they used a hierarchical approach instead of doing binary classification for each category. All the 91 categories were grouped into 11 super-categories. The annotator did then examine for each single instance whether it belongs to one of the given super-categories. Workers only had to label one instance for each of the super-categories with a category’s icon (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). For each image, eight workers were asked to label it. This hierarchical approach helped to reduce the time for labeling. However, the first phase still took ∼20k worker hours to be completed.

In the next step, all instances of the object categories in an image were labeled, at most 10 instances of a given category per image were labeled by each worker. In both the instance spotting and the instance segmentation steps, the location of the instance found by a worker in the previous stage could be seen by the current worker. Each image was labeled again by eight workers summing up to a total of ∼10k worker hours.

In the final segmenting stage, each object instance was segmented, the segmentation for other instances and the specification of the object instance by a worker in the previous stage were again shown to the worker. Segmenting 2.5 million object instances was an extremely time consuming task which required over 22 worker hours per 1,000 segmentations. To minimize cost and improve the quality of segmentation, all workers were required to complete a training task for each object category. In order to ensure a better quality, an explicit verification step on each segmented instance was performed as well.

3.1.1.2 Comparison with other data sets

In recent years, researchers have developed several pre-training data sets and benchmarks which helped the developemnt of algorithms for CV. Each of these data sets varies significantly in size, number of categories and types of images. In the previos part, we also introduced the different research focus of some data sets like e.g. ImageNet (Deng et al. 2009), PASCAL VOC (Everingham et al. 2010) and SUN (Xiao et al. 2010). ImageNet, containing millions of images, has enabled major breakthroughs in both object classification and detection research using a new class of deep learning algorithms. It was created with the intention to capture a large number of object categories, many of which are fine-grained. SUN focuses on labeling scene types and the objects that commonly occur in them. Finally, PASCAL VOC’s primary application is in object detection in natural images. MS COCO is designed for the detection and segmentation of objects occurring in their natural context (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

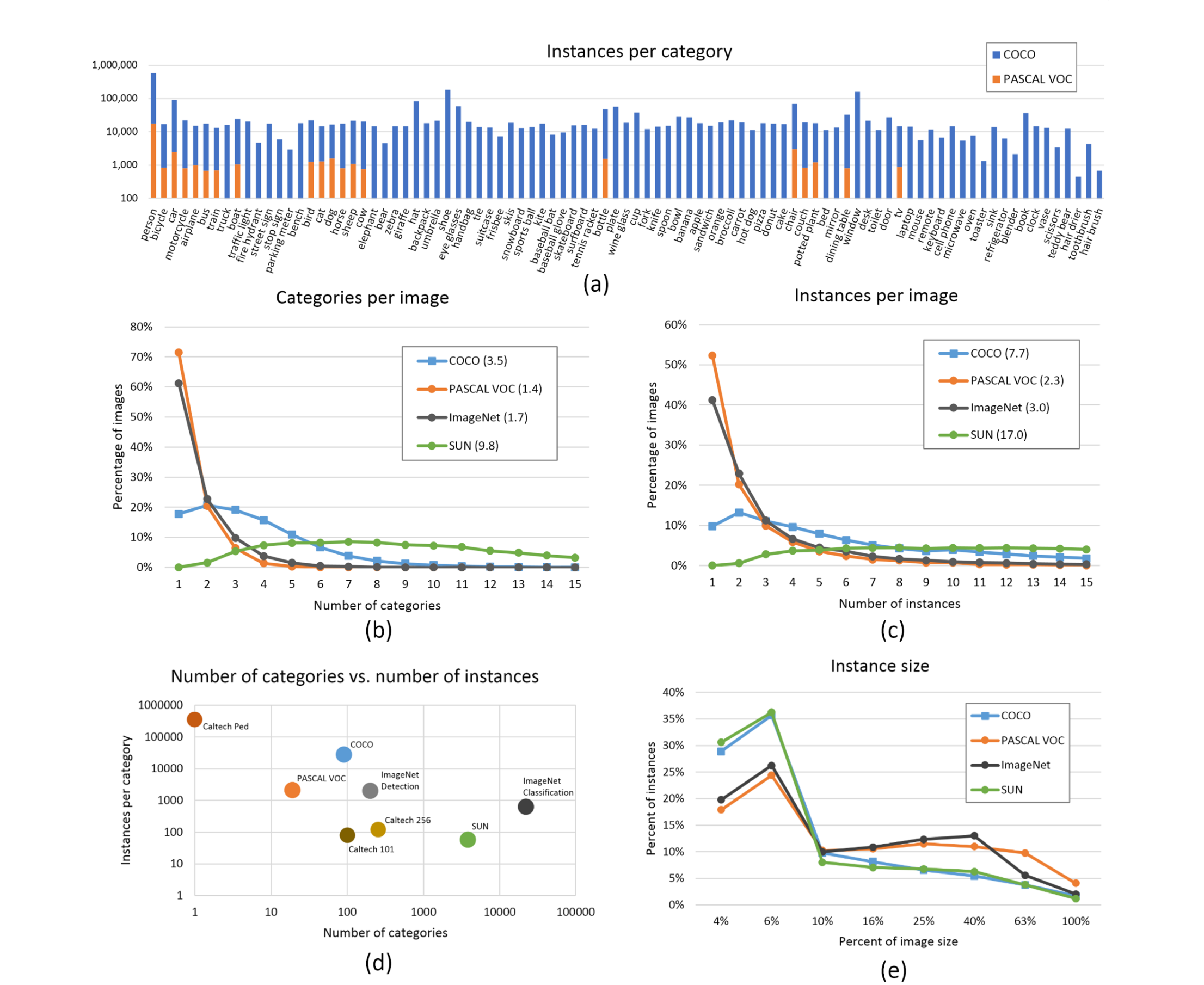

With the help of Fig. 3.4, one can compare MS COCO to ImageNet, PASCAL VOC and SUN with respect to different aspects (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

FIGURE 3.4: Comparison MS COCO with PASCAL VOC, SUN and ImageNet (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b).

The number of instances per category for all 91 categories in MS COCO and PASCAL VOC is shown in subfigure 3.4 (a). Compared to PASCAL VOC, MS COCO has both more categories and (on average) more instances per category. The number of object categories and the number of instances per category for all the datasets is shown in subfigure 3.4 (d). MS COCO has fewer categories than ImageNet and SUN, but it has the highest average number of instances per category among all the data sets, which from the perspective of authors might be useful for learning complex models capable of precise localization (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). Subfigures 3.4 (b) and (c) show the number of annotated categories and annotated instances per image for MS COCO, ImageNet, PASCAL VOC and SUN (average number of categories and instances are shown in parentheses). On average MS COCO contains 3.5 categories and 7.7 instances per image. ImageNet and PASCAL VOC both have on average less than 2 categories and 3 instances per image. The SUN data set has the most contextual information, on average 9.8 categories and 17 instances per image. Subfigure 3.4 (e) depicts the distribution of instance sizes for the MS COCO, ImageNet Detection, PASCAL VOC and SUN data set.

3.1.1.3 Discussion

MS COCO is a large scale data set for detecting and segmenting objects found in everyday life, with the aim of improving the state-of-the-art in object recognition and scene understanding. It focuses on non-iconic images of objects in natural environments and contains rich contextual information with many objects present per image. MS COCO is one of the typically used vision data sets, which are labor intensive and costly to create. With the vast cost and over 70,000 worker hours, 2.5 Mio instances were annotated to drive the advancement of object detection and segmentation algorithms. MS COCO is still a good benchmark for the field of CV (T.-Y. Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick 2014b). The MS COCO Team also shows directions for future. For example “stuff” label like “sky”, “grass”, and “street”, etc, may also be included in the dataset since “stuff” categories provide significant contextual information for the object detection.

3.1.2 Models for Image captioning

The image captioning task is generally to describe the visual content of an image in natural language, so it requires an algorithm to understand and model the relationships between visual and textual elements, and to generate a sequence of output words (Cornia et al. 2020). In the last few years, collections of methods have been proposed for image captioning. Earlier approaches were based on generations of simple templates, which contained the output produced from the object detector or attribute predictor (Socher and Fei-fei 2010), (Yao et al. 2010). With the sequential nature of language, most research on image captioning has focused on deep learning techniques, using especially Recurrent Neural Network models (RNNs) (Vinyals et al. 2015), (Karpathy and Fei-Fei 2014) or one of their special variants (e.g. LSTMs). Mostly, RNNs are used for sequence generation as languages models, while visual information is encoded in the output of a CNN. With the aim of modelling the relationships between image regions and words, graph convolution neural networks in the image encoding phase (T. Yao et al. 2018a) or single-layer attention mechanisms (Xu et al. 2015) on the image encoding side have been proposed to incorporate more semantic and spatial relationships between objects. RNN-based models are widely adopted, however, the model has its limitation on representation power and due to its sequential nature (Cornia et al. 2020). Recently, new fully-attentive models, in which the use of self-attention has replaced the recurrence, have been proposed. New approaches apply the Transformer architecture (Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, et al. 2017c) and BERT (Devlin et al. 2019) models to solve image captioning tasks. The transformer consists of an encoder with a stack of self-attention and feed-forward layers, and a decoder which uses (masked) self-attention on words and cross-attention over the output of the last encoder layer (Cornia et al. 2020). In some other transformer-based approaches, a transformer-like encoder was paired with an LSTM decoder, while the aforementioned approaches have exploited the original transformer architecture. Others (Herdade et al. 2019) proposed a transformer architecture for image captioning with the focus on geometric relations between input objects at the same time. Specifically, additional geometric weights between object pairs, which is used to scale attention weights, are computed. Similarly, an extension of the attention operator, in which the final attended information is weighted by a gate guided by the context, was introduced at a similar time (Huang et al. 2019).

3.1.3 Meshed-Memory Transformer for Image Captioning (\(M^2\))

Transformer-based architectures have been widely implemented in sequence modeling tasks like machine translation and language understanding. However, their applicability for multi-modal tasks like image captioning has still been largely under-explored (Cornia et al. 2020).

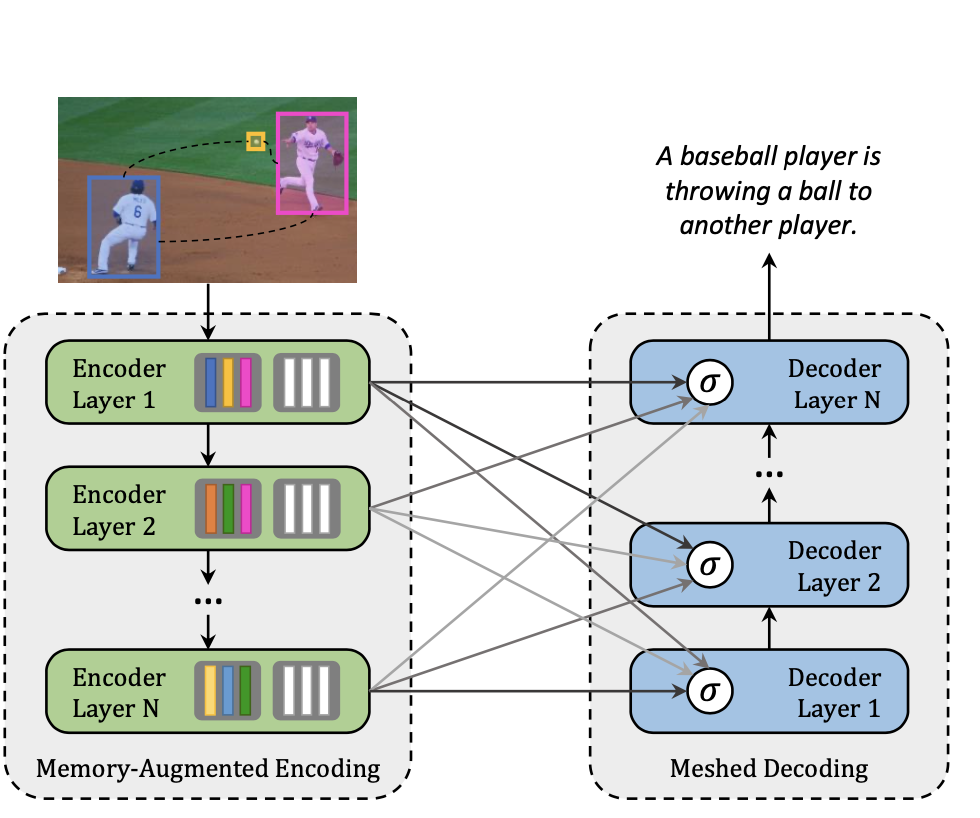

FIGURE 3.5: \(M^2\) Transformer (Cornia et al. 2020).

A novel fully-attentive approach called Meshed-Memory Transformer for Image Captioning (\(M^2\)) was proposed in 2020 (Cornia et al. 2020) with the aim of improving the design of both the image encoder and the language decoder. Compared to all previous image captioning models, \(M^2\) (see Fig. 3.5 has two new novelties: The encoder encodes a multi-level representation of the relationships between image regions with respect to low-level and high-level relations, and a-priori knowledge can be learned and modeled by using persistent memory vectors. The multi-layer architecture exploits both low- and high-level visual relationships through a learned gating mechanism, which computes the weight at each level, therefore, a mesh-like connectivity between encoder and decoder layers is created for the sentence generation process (Cornia et al. 2020).

3.1.3.1 \(M^2\) Transformer Architecture

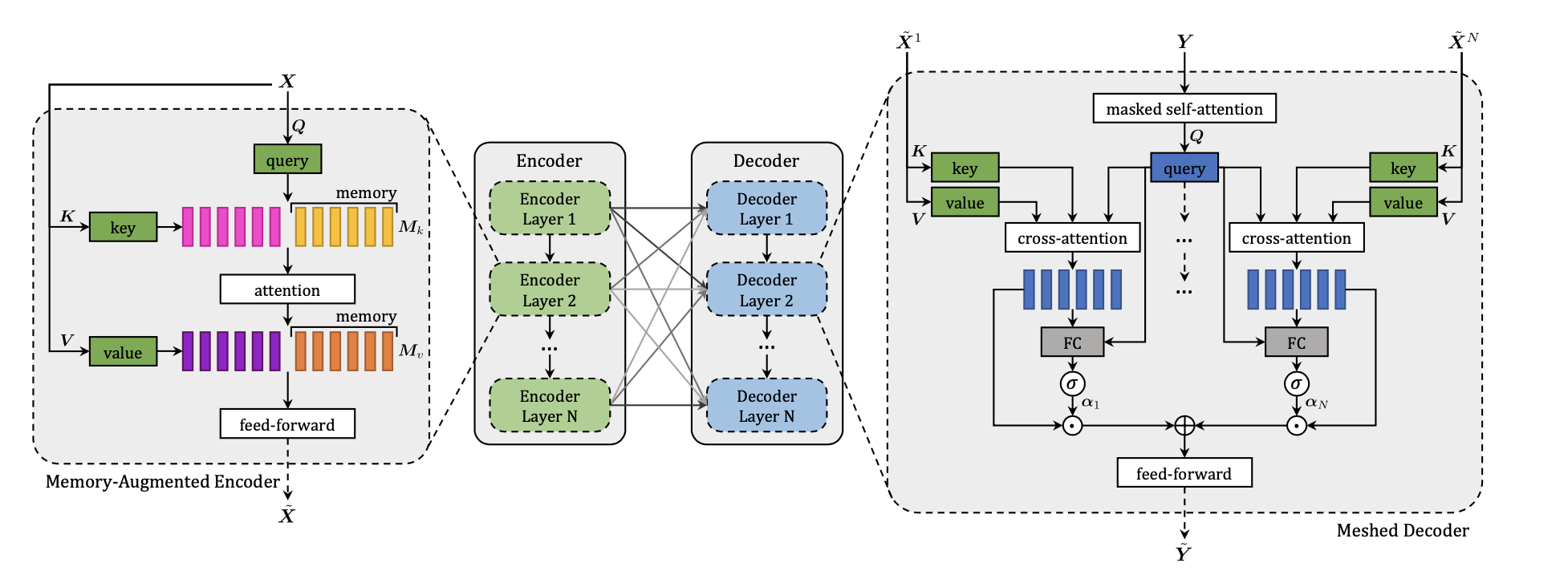

FIGURE 3.6: \(M^2\) Transformer Architecture (Cornia et al. 2020).

Fig. 3.6 shows the detailed architecture of \(M^2\) Transformer. It can be divided into the encoder (left) module and the decoder (right) module, both modules with multiple layers. Given the input image region \(X\), the image is passed through the attention and feed forward layers. The relationship between image regions with a-priori knowledge will be encoded in each encoding layer, the output of each encoding layers will be read by decoding layers to generate the caption for image word by word (Cornia et al. 2020).

All interactions between word and image-level features of the input image \(X\) are modeled by using scaled dot-product attention. Attention operates on vectors of queries \(q\), keys \(k\) and values \(n\), and takes a weighted sum of the value vectors according to a similarity distribution between query and key vectors. Attention can be defined as follows (Cornia et al. 2020):

\[\begin{equation} Attention(Q, K, V) = softmax(\frac{QK^T}{\sqrt{d}}) V \tag{3.1} \end{equation}\]

where \(Q\) is a matrix of \(n_q\) query vectors, \(K\) and \(V\) both contain \(n_k\) keys and values, all the vectors has the same dimensionality, and \(d\) is a scaling factor.

3.1.3.1.1 Memory-Augmented Encoder

For the given image region \(X\), attention can be used to obtain a permutation invariant encoding of \(X\) through the self-attention operations, the operator from the Transformer can be defined as follows (Cornia et al. 2020):

\[\begin{equation} S(X) = Attention(W_q X, W_k X, W_vX) \end{equation}\]

In this case, queries, keys, and values are linear projections of the input features, and \(W_q\), \(W_k\), \(W_v\) are their learnable weights, they depend solely on the pairwise similarities between linear projections of the input set X. The self-attention operator encodes the pairwise relationships inside the input. But self-attention also has its limitation: a prior knowledge on relationships between image regions can not be modelled. To overcome the limitation, the authors introduce a Memory-Augmented Attention operator by extending the keys and values with additional prior information, which does not depend on image region \(X\). The additional keys and values are initialized as plain learnable vectors which can be directly updated via SGD. The operator can be defined as follows (Cornia et al. 2020):

\[\begin{align} M_{mem}(X) &= Attention(W_qX, K, V ) \notag \\ K &= [W_kX, M_k]\notag \\ V &= [W_vX, M_v] \end{align}\]

\(M_k\) and \(M_v\) are learnable matrices, with \(n_m\) rows, [·,·] indicates concatenation. The additional keys and value could help to retrieve a priori knowledge from input while keeping the quries unchanged (Cornia et al. 2020).

For the Encoding Layer, a memory-augmented operator d is injected into a transformer-like layer, the output is fed into a position-wise feed-forward layer (Cornia et al. 2020):

\[\begin{equation} F(X)_i= U\sigma(V X_i + b) + c; \end{equation}\]

\(X_i\) indicates the \(i\)-th vector of the input set, and \(F(X)_i\) the \(i\)-th vector of the output. Also, \(\sigma(·)\) is the ReLU activation function, \(V\) and \(U\) are learnable weight matrices, \(b\) and \(c\) are bias terms (Cornia et al. 2020).

Each component will be complemented by a residual connection and the layer norm operation. The complete definition of an encoding layer can be finally written as (Cornia et al. 2020):

\[\begin{align} Z &= AddNorm(M_{mem}(X))\notag \\ \tilde{X}&=AddNorm(F(Z)) \end{align}\]

Finally the Full Encoder has multiple encoder layers in a sequential fashion, therefore the \(i\)-th layer uses the output set computed by layer \(i − 1\), higher encoding layers can exploit and refine relationships identified by previous layers, \(n\) encoding layers will produce the output \(\tilde{X} = (\tilde{X}^1 \dots \tilde{X}^n)\) (Cornia et al. 2020).

3.1.3.1.2 Meshed Decoder

The decoder depends on both previously generated words and image region encodings. Meshed Cross-Attention can take advantage of all the encoder layers to generate captions for the image. On the right side of the Fig. 3.6 the structure of the meshed decoder is shown. The input sequence vector \(Y\) and the outputs from all encoder layers \(\tilde{X}\) are connected by the meshed attention operator gated through cross-attention. The meshed attention operator can is formally defined as (Cornia et al. 2020):

\[\begin{equation} M_{mesh}(\tilde{X}, Y) =\sum_{i = 1}^{N}\alpha_i C(\tilde{X^i}, Y) \end{equation}\]

\(C(·,·)\) stands for the encoder-decoder cross-attention, it is defined with queries from decoder, while the keys and values come from the encoder (Cornia et al. 2020).

\[\begin{equation} C(\tilde{X^i}, Y) = Attention(W_q Y, W_k \tilde{X^i}, W_v \tilde{X^i}) \end{equation}\]

\(\alpha_i\) is a matrix of weights of the same size as the cross-attention results, \(\alpha_i\) models both single contribution of each encoder layer and the relative importance between different layers (Cornia et al. 2020).

\[\begin{equation} \alpha_i = \sigma(W_i [Y,C(\tilde{X^i}, Y)]+ b_i) \end{equation}\]

The [·,·] indicates concatenation and \(\sigma(·)\) is the sigmoid activation function here, \(W_i\) is a weight matrix, and \(b_i\) is a learnable bias vector (Cornia et al. 2020).

In decoder layers the prediction of a word should only depend on the previously generated word, so the decoder layer comprises a masked self-attention operation, which means that the operator can only make connections between queries derived from the \(t\)-th element of its input sequence Y with keys and values from left sub-sequence, i.e. \(Y_{≤t}\).

Simlilar as the encoder layers, the decoder layers also contain a position-wise feed-forward layer, so the decoder layer can be finally defined as (Cornia et al. 2020):

\[\begin{align} Z &= AddNorm(M_{mesh}(X,AddNorm(S_{mask}(Y ))) \notag \\ \tilde{Y} &= AddNorm(F(Z)), \end{align}\]

where \(S_{mask}\) indicates a masked self-attention over time (Cornia et al. 2020). The full decoder with multiple decoder layers takes the input word vectors as well as the \(t\)-th element (and all elements prior to it) of its output sequence to make the prediction for the word at \(t + 1\), conditioned on th \(Y_{≤t}\). Finally the decoder takes a linear projection and a softmax operation, which can be seen as a probability distribution over all words in the vocabulary (Cornia et al. 2020).

3.1.3.1.3 Comparison with other models on the MS COCO data sets

The \(M^2\) Transformer was evaluated on MS COCO, which is still one of the most commonly used test data set for image captioning. Instead of using the original MS COCO dat set, Cornia et al. (2020) follow the split of MS COCO provided by Karpathy and Fei-Fei (2014). Karpathy uses 5000 images for validation, 5000 images for testing and the rest for training.

For model evaluation and comparison, standard metrics for evaluating generated sequences, like BLEU (Papineni et al. 2002), METEOR (Banerjee and Lavie 2005), ROUGE (Lin 2004,), CIDEr (Vedantam, Zitnick, and Parikh 2015), and SPICE (Anderson et al. 2016), which have been introduced in the second chapter, are used.

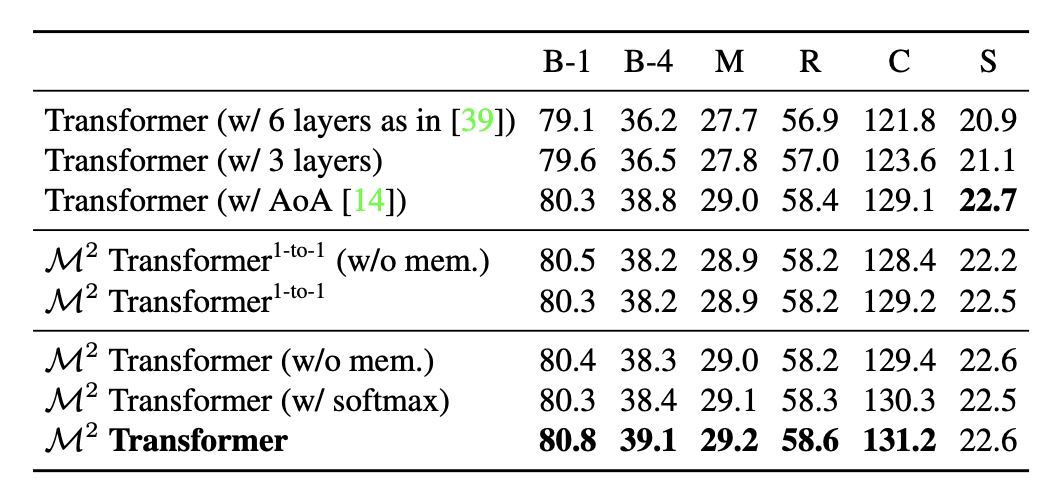

FIGURE 3.7: Comparison of \(M^2\) with Transformer-based alternatives (Cornia et al. 2020)

The transformer architecture in its original configuration with six layers has been applied for captioning, researchers speculated that specific architectures might be required for captioning, so variations of the original transformer are compared with \(M^2\) Transformer. Other variations are a transformer with three layers and the “Attention on Attention” (AoA) approach (Huang et al. 2019) to the attentive layers, both in the encoder and in the decoder (Cornia et al. 2020). The second part intends to evaluate the importance of the meshed connections between encoder and decoder layers. \(M^2\) Transformer (1 to 1) is a reduced version of the original \(M^2\) Transformer, in which one encoder layer is connected to only corresponding decoder layer instead of being connected to all the decoder layers. As one can see from the Fig. 3.7, the original Transformer has a 121.8 CIDEr score, which is lower than the reduced version of \(M^2\) Transformer, showing an improvement to 129.2 CIDEr. With respect to meshed connectivity, which helps to exploit relationships encoded at all layers and weights them with a sigmoid gating, one can observe a further improvement in CIDEr from 129.2 to 131.2. Also the role of memory vectors and the softmax gating schema for \(M^2\) Transformer are also included in the table. Eliminating the memory vector leads to a reduction of the performance by nearly 1 point in CIDEr in both the reduced \(M^2\) Transformer and the original \(M^2\) Transformer (Cornia et al. 2020).

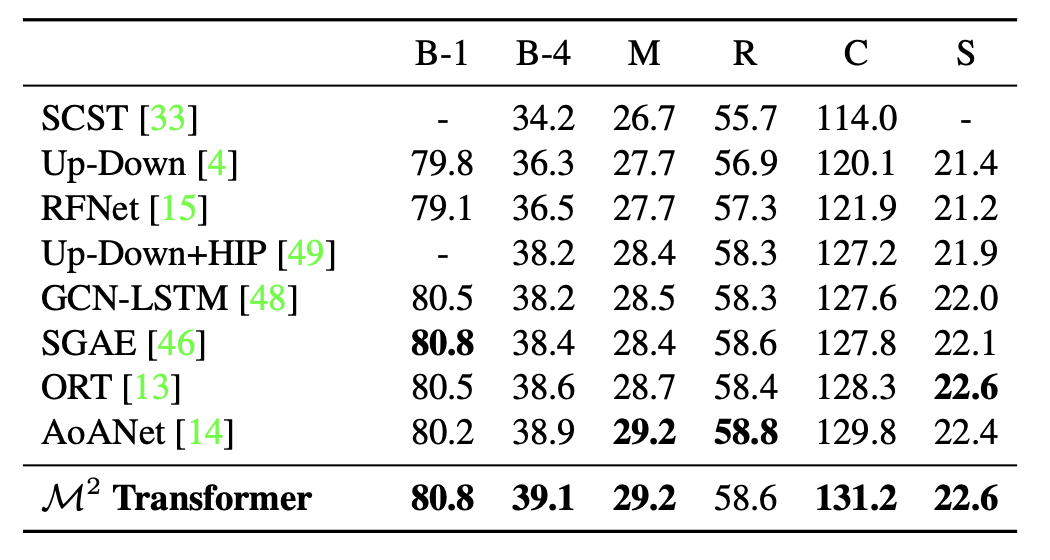

FIGURE 3.8: Comparison with the state-of-the-art on the “Karpathy” test split, in single-model setting (Cornia et al. 2020).

Fig 3.8 compares the performance of \(M^2\) Transformer with several recently proposed models for image captioning. SCST (Rennie et al. 2017) and Up-Down (Anderson et al. 2018), use attention over the grid of features and attention over regions. RFNet (???) uses a recurrent fusion network to merge different CNN features; GCN-LSTM (T. Yao et al. 2018b) uses a Graph CNN to exploit pairwise relationships between image regions; SGAE (Yang et al. 2019) uses scene graphs instead ofauto-encoding. The original AoA-Net (Huang et al. 2019) approach uses attention on attention for encoding image regions and an LSTM language model. Finally, the ORT (Herdade et al. 2019) uses a plain transformer and weights attention scores in the region encoder with pairwise distances between detections (Cornia et al. 2020).

In Fig. 3.8, the \(M^2\) Transformer exceeds all other models on BLEU-4, METEOR, and CIDEr. The performance of the \(M^2\) Transformer was very close and competitive with SGAE on BLEU-1 and with ORT with respect to SPICE.

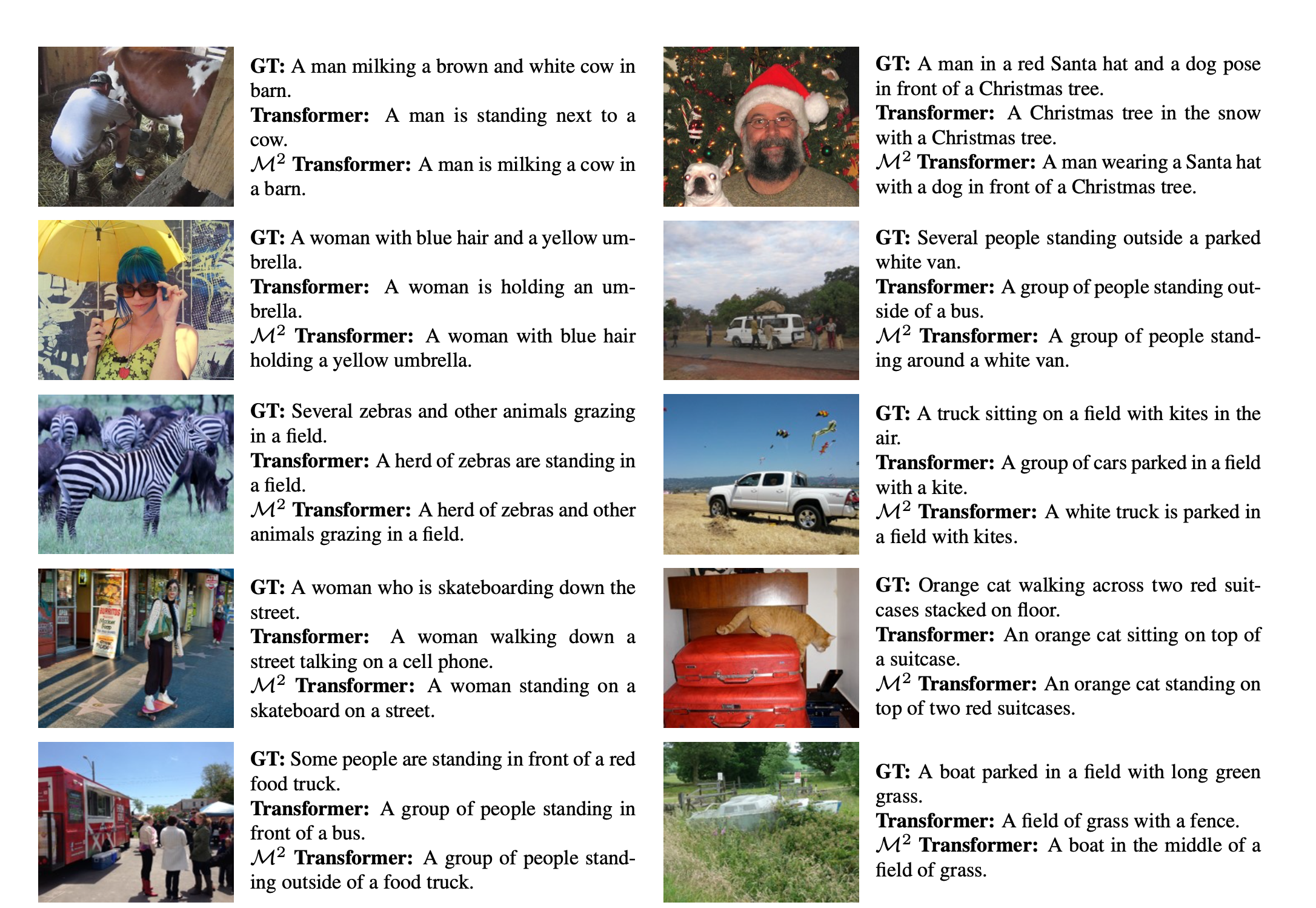

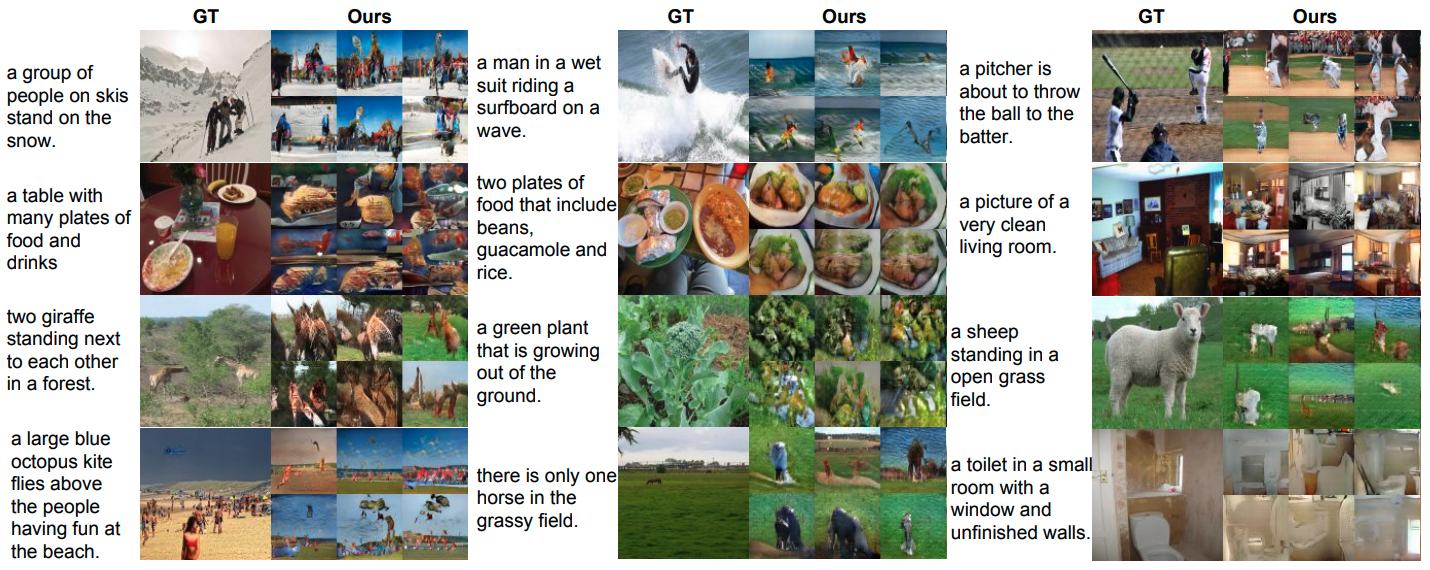

FIGURE 3.9: Examples of captions generated by \(M^2\) Transformer and the original Transformer model, as well as the corresponding ground-truths (Cornia et al. 2020).

Fig. 3.9 shows some examples of captions generated by \(M^2\) Transformer and the original transformer model, as well as the corresponding ground-truths. According to the selected examples of captions, \(M^2\) Transformer shows the ability to generate more accurate descriptions of the images, and the approach could detect the more detailed relationships between image regions (Cornia et al. 2020).

The \(M^2\) Transformer is a new transformer-based architecture for image captioning. It improves the image encoding by learning a multi-level representation of the relationships between image regions while exploiting a priori knowledge from each encoder layer, and uses a mesh-like connectivity at decoding stage to exploit low- and high-level features at the language generation steps. The results of model evaluation with MS COCO shows that the performance of the \(M^2\) Transformer approach surpasses most of the recent approaches and achieves a new state of the art on MS COCO (Cornia et al. 2020).

3.2 Text2Image

Author: Karol Urbańczyk

Supervisor: Jann Goschenhofer

Have you ever wondered what a painting artist could paint for you if you ordered a high-quality oil painting of a psychedelic hamster dragon? Probably not. Nevertheless, one of the answers could be:

FIGURE 3.10: Hamster dragon

The catch is that there is no human artist. The above picture comes from a 3.5-billion parameter model called GLIDE by OpenAI (Nichol et al. 2021b). Every single value of every pixel was generated from a distribution that the model had to learn in the first place. Before generating the image, GLIDE abstracted the concepts of ‘hamster’ and ‘dragon’ from looking at millions of training images. Only then, it was able to create and combine them successfully into a meaningful visual representation. Welcome to the world of current text-to-image modelling!

The cross-modal field of text-to-image models has developed significantly over recent years. What was considered unimaginable only a few years ago, today constitutes a new benchmark for researchers. New breakthroughs are being published every couple of months. Following these, possible business use cases are emerging, which attracts investment from the greatest players in AI research. However, a further trend of closed-source models is continuing and the text-to-image field is probably one the most obvious ones where it can be noticed. We might need to get used to the fact that the greatest capabilities will soon be monopolized by few companies.

At the same time, the general public is becoming aware of the field itself and the disruption potential it brings. Crucial questions are already emerging. What constitutes art? What does the concept of being an author mean? The result of a generative model is in a sense a combination, or variation, of the abstracts it has seen in the past. But the same stands for a human author. Therefore, is a discussion about the prejudices and biases needed? Answers to all of these will require refinement through an extensive discussion. The last section of this chapter will try to highlight the most important factors that will need to be considered.

However, the primary intention of this chapter is to present the reader with a perspective on how the field was developing chronologically. Starting with the introduction of GANs, through the first cross-domain models, and ending with state-of-the-art achievements (as of September 2022), it will also try to grasp the most important concepts without being afraid of making technical deep dives.

The author is aware that since the rapid development pace makes it nearly impossible for this section to stay up-to-date, it might very soon not be fully covering the field. However, it must be stressed that the cutting-edge capabilities of the recent models tend to come from the scale and software engineering tricks. Therefore, focusing on the core concepts should hopefully gives this chapter a universal character, at least for some time. This design choice also explains why many important works did not make it to this publication. Just to name a few of them as honorable mentions: GAWWN (S. E. Reed, Akata, Mohan, et al. 2016), MirrorGAN (Qiao et al. 2019), or most recent ones: LAFITE (Zhou et al. 2021), Make-a-Scene (Gafni et al. 2022) or CogView (Ding et al. 2021). In one way or another, all of them pushed the research frontier one step further. Therefore, it needs to be clearly stated - the final selection of this chapter’s content is a purely subjective decision of the author.

3.2.1 Seeking objectivity

Before diving into particular models, we introduce objective evaluation procedures that help assess the performance of consecutive works in comparison to their predecessors. Unfortunately, objectivity in comparing generative models is very hard to capture since there is no straight way to draw deterministic conclusions about the model’s performance (Theis, Oord, and Bethge 2015). However, multiple quantitative and qualitative techniques have been developed to make up for it. Unfortunately, there is no general consensus as to which measures should be used. An extensive comparison has been performed by Borji (2018). A few of the most widely used ones in current research are presented below.

Inception Score (IS)

Introduced by Salimans et al. (2016), Inception Score (IS) uses the Inception Net (Szegedy et al. 2015) trained on ImageNet data to classify the fake images generated by the assessed model. Then, it measures the average KL divergence between the marginal label distribution \(p(y)\) and the label distribution conditioned on the generated samples \(p(y|x)\).

\[exp(\mathop{{}\mathbb{E}}_{x}[KL(p(y|x) || p(y))])\]

\(p(y)\) is desired to have high diversity (entropy), in other words: images from the generative model should represent a wide variety of classes. On the other hand, \(p(y|x)\) is desired to have low diversity, meaning that images should represent meaningful concepts. If a range of cat images is being generated, they all should be confidently classified by Inception Net as cats. The intention behind IS is that a generative model with a higher distance (KL divergence in this case) between these distributions should have a better score. IS is considered a metric that correlates well with human judgment, hence its popularity.

Fréchet Inception Distance (FID)

A metric that is generally considered to improve upon Inception Score is the Fréchet Inception Distance (FID). Heusel et al. (2017) argue that the main drawback of IS is that it is not considering the real data at all. Therefore, FID again uses Inception Net, however this time it embeds the images (both fake and real samples) into feature space, stopping at a specific layer. In other words, some of the ultimate layers of the network are being discarded. Feature vectors are then assumed to follow a Gaussian distribution and the Fréchet distance is calculated between real and generated data distributions:

\[d^2((m, C), (m_{w}, C_{w})) = ||m-m_{w}||_{2}^2 + Tr(C+C_{w}-2(CC_{w})^{1/2})\]

where \((m, C)\) and \((m_{w}, C_{w})\) represent mean and covariance of generated and real data Gaussians respectively. Obviously, low FID levels are desired.

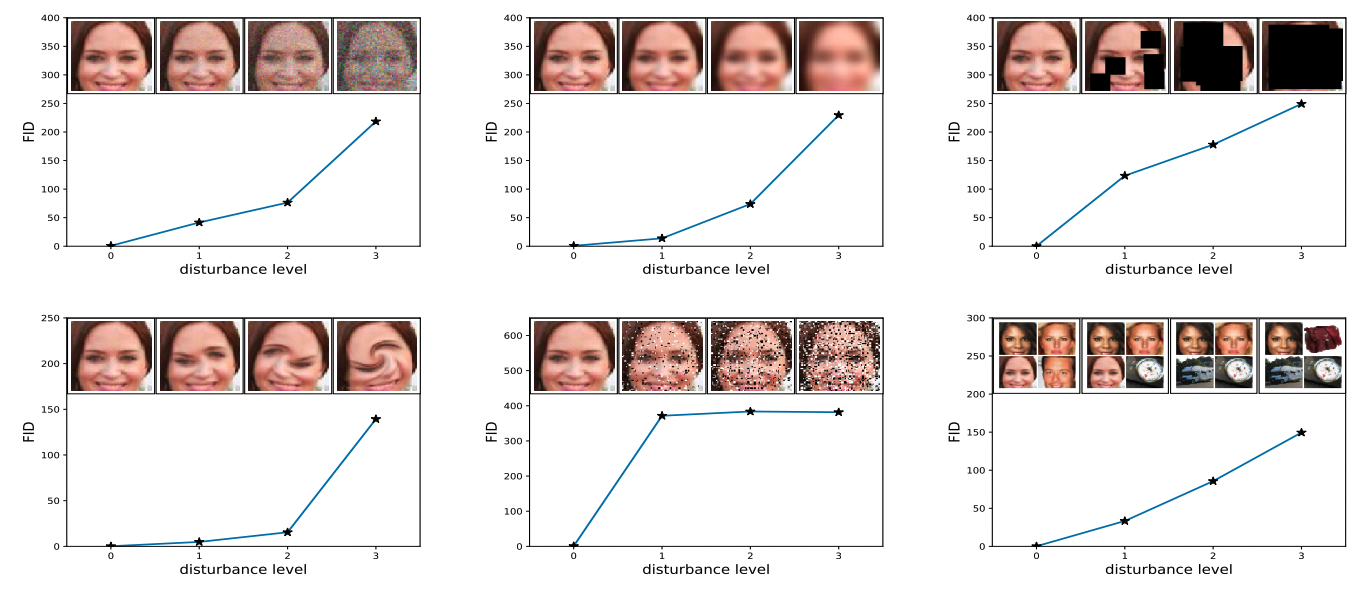

FID is considered to be consistent with human judgement and sensitive to image distortions, which are both desired properties. Figure 3.11 shows how FID increases (worsens) for different types of noise being added to images.

FIGURE 3.11: FID is evaluated for different noise types. From upper left to lower right: Gaussian noise, Gaussian blur, implanted black rectangles, swirled images, salt and pepper, CelebA dataset contaminated by ImageNet images. Figure from Heusel et al. (2017).

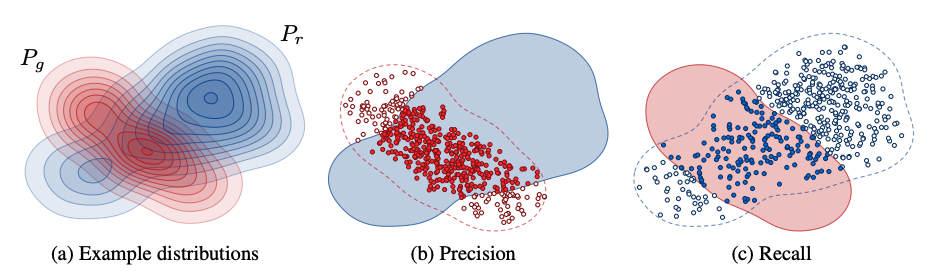

Precision / Recall

Precision and recall are one of the most widely used metrics in many Machine Learning problem formulations. However, their classic definition cannot be applied to generative models due to the lack of objective labels. Sajjadi et al. (2018) came up with a novel definition of these metrics calculated directly from distributions, which was further improved by Kynkäänniemi et al. (2019). The argument behind the need for such an approach is that metrics such as IS or FID provide only a one-dimensional view of the model’s performance, ignoring the trade-off between precision and recall. A decent FID result might very well mean high recall (large variation, i.e. wide range of data represented by the model), high precision (realistic images), or anything in between.

Let \(P_{r}\) denote the probability distribution of the real data, and \(P_{g}\) be the distribution of the generated data. In short, recall measures to which extend \(P_{r}\) can be generated from \(P_{g}\), while precision is trying to grasp how many generated images fall within \(P_{r}\).

FIGURE 3.12: Definition of precision and recall for distributions. Figure from Kynkäänniemi et al. (2019).

See Kynkäänniemi et al. (2019) for a more thorough explanation.

CLIP score

CLIP is a model from OpenAI [CLIP2021] which is explained in detail in the chapter about text-supporting computer vision models. In principle, CLIP is capable of assessing the semantic similarity between the text caption and the image. Following this rationale, the CLIP score can be used as metric and is defined as:

\[\mathop{{}\mathbb{E}}[s(f(image)*g(caption))]\]

where the expectation is taken over the batch of generated images and \(s\) is the CLIP logit scale (Nichol et al. 2021b).

Human evaluations

It is common that researchers report also qualitative measures. Many potential applications of the models are focused on deceiving the human spectator, which motivates reporting of metrics that are based on human evaluation. The general concept of these evaluation is to test for:

- photorealism

- caption similarity (image-text alignment)

Usually, a set of images is presented to a human, whose task is to assess their quality with respect to the two above-mentioned criteria.

3.2.2 Generative Adversarial Networks

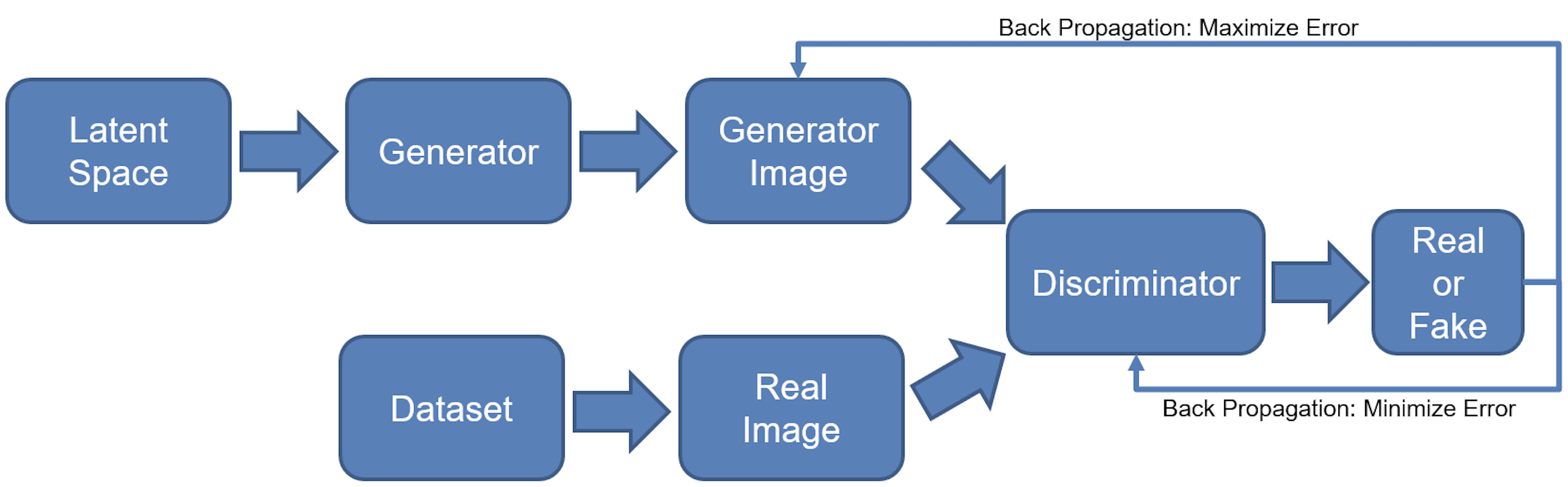

The appearance of Generative Adversarial Networks (GAN) was a major milestone in the development of generative models. Introduced by Ian J. Goodfellow et al. (2014b), the idea of GANs presented a novel architecture and training regime, which corresponds to a minimax two-player game between a Generator and a Discriminator (hence the word adversarial).

GANs can be considered as an initial enabler for the field of text-to-image models and for a long time, GAN-like models were achieving state-of-the-art results, hence the presentation of their core concepts in this chapter

3.2.2.1 Vanilla GAN for Image Generation

In a vanilla GAN, the Generator model (\(G\)) and Discriminator model (\(D\)) are optimized together in a minimax game, where \(G\) aims at generating a sample so convincing, that \(D\) will not be able to distinguish whether it comes from a real or generated image distribution. On the other hand, \(D\) is being trained to discriminate between the two. Originally, a multilayer perceptron was proposed as a model architecture for both \(D\) and \(G\), although in theory any differentiable function could be used.

More formally, let \(p_{z}\) denote the prior distribution defined on the input noise vector \(z\). Then, the generator \(G(z)\) represents a function that is mapping this noisy random input to the generated image \(x\). The discriminator \(D(x)\) outputs a probability that \(x\) comes from the real data rather than generator’s distribution \(p_{g}\). In this framework, \(D\) shall maximize the probability of guessing the correct label of both real and fake data. \(G\) is trained to minimize \(log(1-D(G(z)))\). Now, such representation corresponds to the following value function (optimal solution):

\[\min_{G}\min_{D}V(D,G) = \mathop{{}\mathbb{E}}_{x \sim p_{data}(x)} [log(D(x))] + \mathop{{}\mathbb{E}}_{z \sim p_{z}(z)} [log(1-D(G(z)))]\]

Figure 3.13 depicts this process in a visual way.

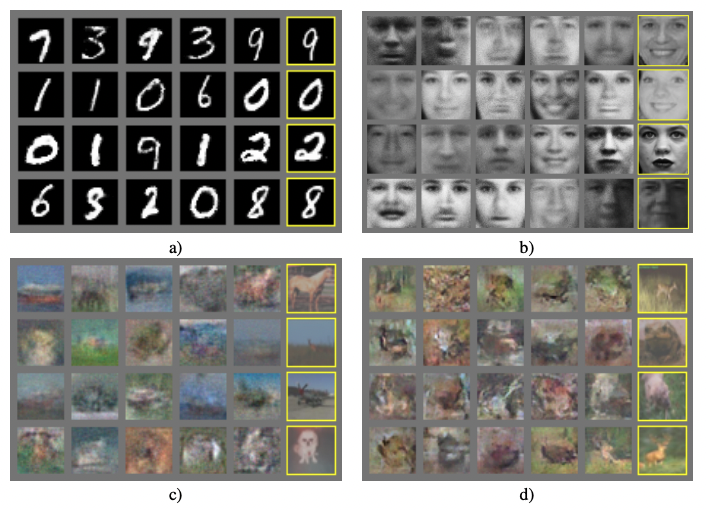

Some of the generated samples that had been achieved with this architecture already in 2014 can be seen in Figure 3.14.

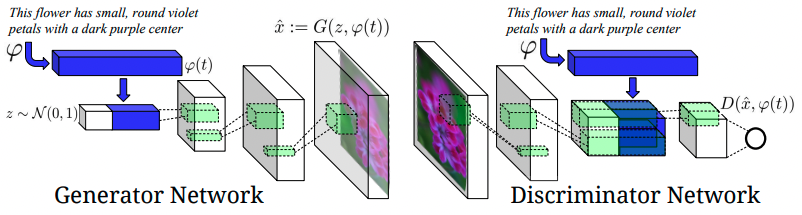

3.2.2.2 Conditioning on Text

So far, only image generation has been covered, completely ignoring textual input. S. E. Reed, Akata, Yan, et al. (2016) introduced an interesting concept of conditioning DC-GAN (GAN with CNNs as Generator and Discriminator) on textual embeddings. A separate model is being trained and used for encoding the text. Then, result embeddings are concatenated with the noise vector and fed into the Generator and the Discriminator takes embeddings as an input as well. The resulting model is referred to as GAN-INT-CLS. Both abbreviations (INT and CLS) stand for specific training choices, which are going to be explained later in the chapter. The overview of the proposed architecture can be seen in Figure 3.15.

FIGURE 3.15: The proposed architecture of the convolutional GAN that is conditioned on text. Text encoding \(\varphi(t)\) is fed into both the Generator and the Discriminator. Before further convolutional processing, it is first projected to lower dimensionality in fully-connected layers and concatenated with image feature maps. Figure from S. E. Reed, Akata, Yan, et al. (2016).

Text embeddings

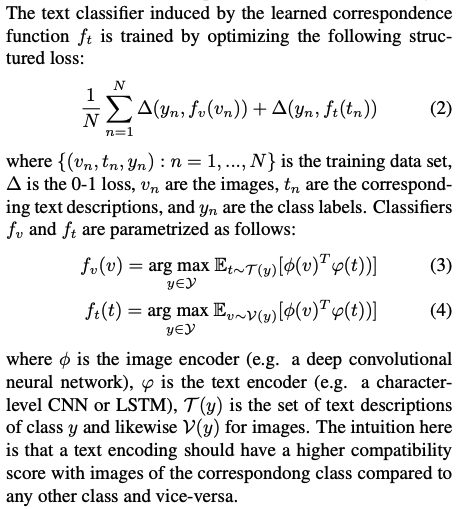

Since regular text embeddings are commonly trained in separation from visual modality simply by looking at the textual context, they are not well suited for capturing visual properties. This motivated S. E. Reed, Akata, Schiele, et al. (2016) to come up with structured joint embeddings of images and text descriptions. GAN-INT-CLS implements it in a way described in Figure 3.16.

FIGURE 3.16: Figure from S. E. Reed, Akata, Yan, et al. (2016).

GoogLeNet is being used as an image encoder \(\phi\). For text encoding \(\varphi(t)\), authors use a character-level CNN combined with RNN. Essentially, the objective of the training is to minimize the distance between the encoded image and text representations. The image encoder is then discarded and \(\varphi\) only is used as depicted in Figure 3.15.

GAN-CLS

CLS stands for Conditional Latent Space, which essentially means the GAN is conditioned on the embedded text. However, in order to fully grasp how exactly the model is conditioned on the input, we need to go beyond architectural choices. It is also crucial to present a specific training regime that was introduced for GAN-CLS and the motivation behind it.

One way to train the system is to view text-image pairs as joint observations and train the discriminator to classify the entire pair as real or fake. However, in such a case the discriminator does not have an understanding of whether the image matches the meaning of the text. This is because the discriminator does not distinguish between two types of error that exist, namely when the image is unrealistic or when it is realistic but the text does not match.

A proposed solution to this problem is to present the discriminator with three observations at a time, all of which are included later in the loss function. These three are: {real image with right text}, {real image with wrong text}, {fake image with right text}. The intention is that the discriminator should classify them as {true}, {false}, {false}, respectively.

GAN-INT

The motivation behind this concept comes from the fact that interpolating between text embeddings tends to create observation pairs that are still close to the real data manifold. Therefore, generating additional synthetic text embeddings and using them instead of real captions in the training process might help in the sense that it works as a form of data augmentation and helps regularize the training process. Figure 3.17 might be helpful for developing the intuition behind the interpolation process.

FIGURE 3.17: Interpolating between sentences. Figure from S. E. Reed, Akata, Yan, et al. (2016).

Results

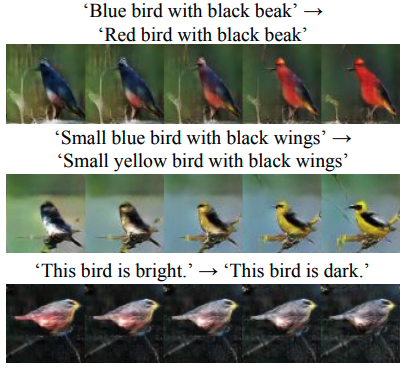

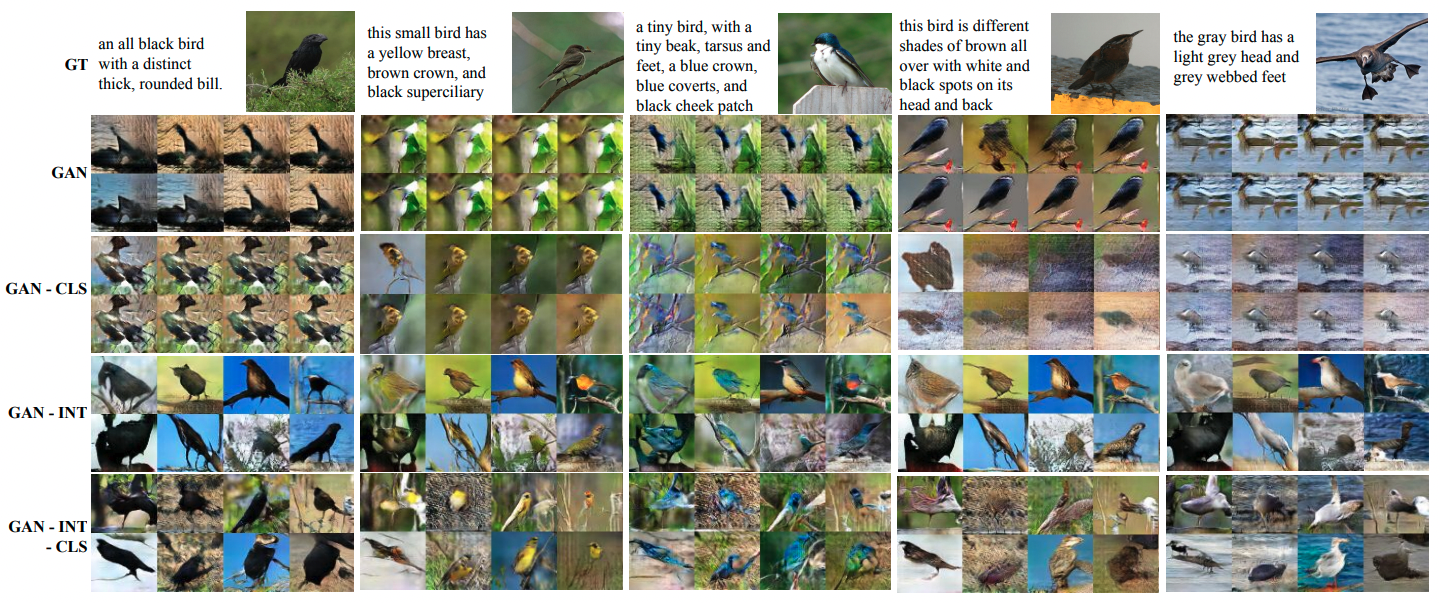

The model achieves the best performance when both of the mentioned methods are in use (GAN-INT-CLS). Models prove to successfully transfer style (pose of the objects) and background from the training data when trained on CUB (birds) and Oxford-102 (flowers) datasets. They also show interesting zero-shot abilities, meaning they can generate observations from unseen test classes (Figure 3.18). When trained on MS-COCO, GAN-CLS proves its potential to generalize over many domains, although the results are not always coherent (Figure 3.19).

FIGURE 3.18: Zero-shot generated birds using GAN, GAN-CLS, GAN-INT, GAN-INT-CLS. Figure from S. E. Reed, Akata, Yan, et al. (2016).

FIGURE 3.19: Generated images using GAN-CLS on MS-COCO validation set. Figure from S. E. Reed, Akata, Yan, et al. (2016).

3.2.2.3 Further GAN-like development

Generative Adversarial Networks were a leading approach for text-to-image models for most of the field’s short history. In the following years after the introduction of GAN-INT-CLS, new concepts were emerging, trying to push the results further. Many of them had a GAN architecture as their core part. In this section, a few such ideas are presented. The intention is to quickly skim through the most important ones. A curious reader should follow the corresponding papers.

StackGAN

Zhang et al. (2016) introduced what the StackGAN. The main contribution of the paper which also found its place in other researchers’ works, was the idea to stack more than one generator-discriminator pair inside the architecture. Stage-II (second pair) generator is supposed to improve the results from Stage-I, taking into account only:

- text embedding (same as Stage-I)

- image generated in Stage-I

without a random vector. Deliberate omission of the random vector results in the generator directly working on improving the results from Stage-I. The purpose is also to increase resolution (here from 64x64 to 256x256). Authors obtained great results already with two stages, however, in principle architecture allows for stacking many of them.

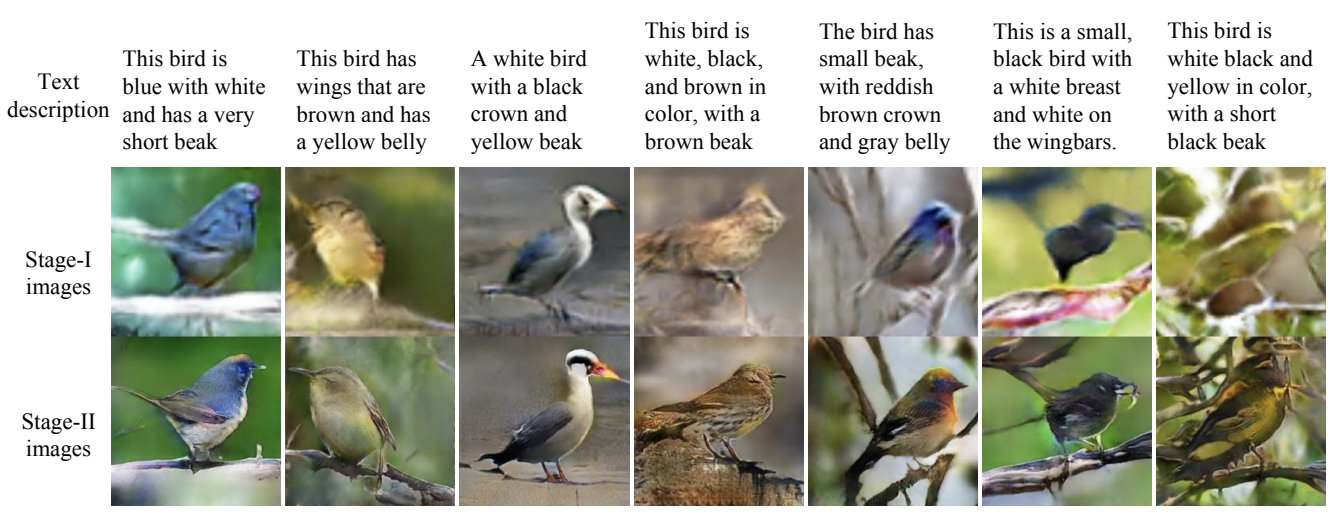

FIGURE 3.20: (ref:stackgan)

AttnGAN

It is 2017 and many researchers believe attention is all they need (Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, et al. 2017b). Probably for the first time in text-to-image generation attention mechanism was used by Xu et al. (2017). The authors combined the idea with what StackGAN proposed and used three stages (generators \(G_{0}\), \(G_{1}\) and \(G_{2}\)). However, this time first layers of a particular generator are attending to word feature vectors. This mechanism not only helps control how particular areas of the image are being improved by consecutive generators but also allows for visualizing attention maps.

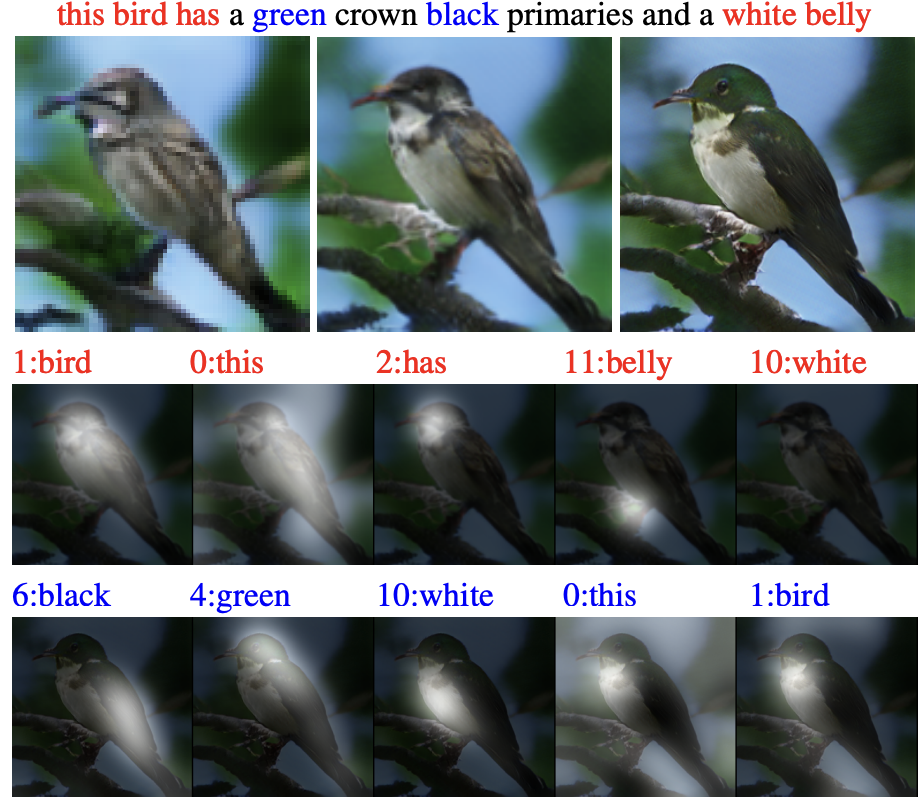

FIGURE 3.21: Images generated by \(G_{0}\), \(G_{1}\), \(G_{2}\). Two bottom rows show 5 most attended words by \(G_{1}\) and \(G_{2}\) respectively. Figure from Xu et al. (2017).

DM-GAN

Another important milestone was DM-GAN (Dynamic Memory GAN) (Zhu et al. 2019). At that time, models were primarily focusing on generating the initial image and then refining it to a high-resolution one (as e.g. StackGAN does). However, such models heavily depend on the quality of the first image initialization. This problem was the main motivation for the authors to come up with a mechanism to prevent it. DM-GAN proposes a dynamic memory module, which has two main components. First, its memory writing gate helps select the most important information from the text based on the initial image. Second, a response gate merges the information from image features with the memories. Both of these help refine the initial image much more effectively.

DF-GAN

Last but not least, DF-GAN (Deep Fusion GAN) (Tao et al. 2020) improves the results by proposing three concepts. One-Stage Text-to-Image Backbone focuses on providing an architecture that is capable of abandoning the idea of multiple stacked generators and using a single one instead. It achieves that by a smart combination of a couple of factors, i.a. hinge loss and the use of residual blocks. Additionally, Matching-Aware Gradient Penalty helps achieve high semantic consistency between text and image and regularizes the learning process. Finally, One-Way Output helps the process converge more effectively.

3.2.3 Dall-E 1

OpenAI’s Dall-E undoubtedly took the text-to-image field to another level. For the first time, a model showed great zero-shot capabilities, comparable to previous domain-specific models. To achieve that, an unprecedented scale of the dataset and training process was needed. 250 million text-image pairs were collected for that purpose, which enabled training of a 12-billion parameter version of the model. Unfortunately, Dall-E is not publicly available and follows the most recent trend of closed-source models. Or, to put it more precisely, it started this trend, and GLIDE, Dall-E 2, Imagen, Parti and others followed. Nevertheless, Dall-E’s inner workings are described in Ramesh, Pavlov, et al. (2021b) and this section will try to explain its most important parts. However, before that, it is crucial to understand one of the fundamental concepts that has been around in the field of generative models for already quite some time - namely Variational Autoencoders.

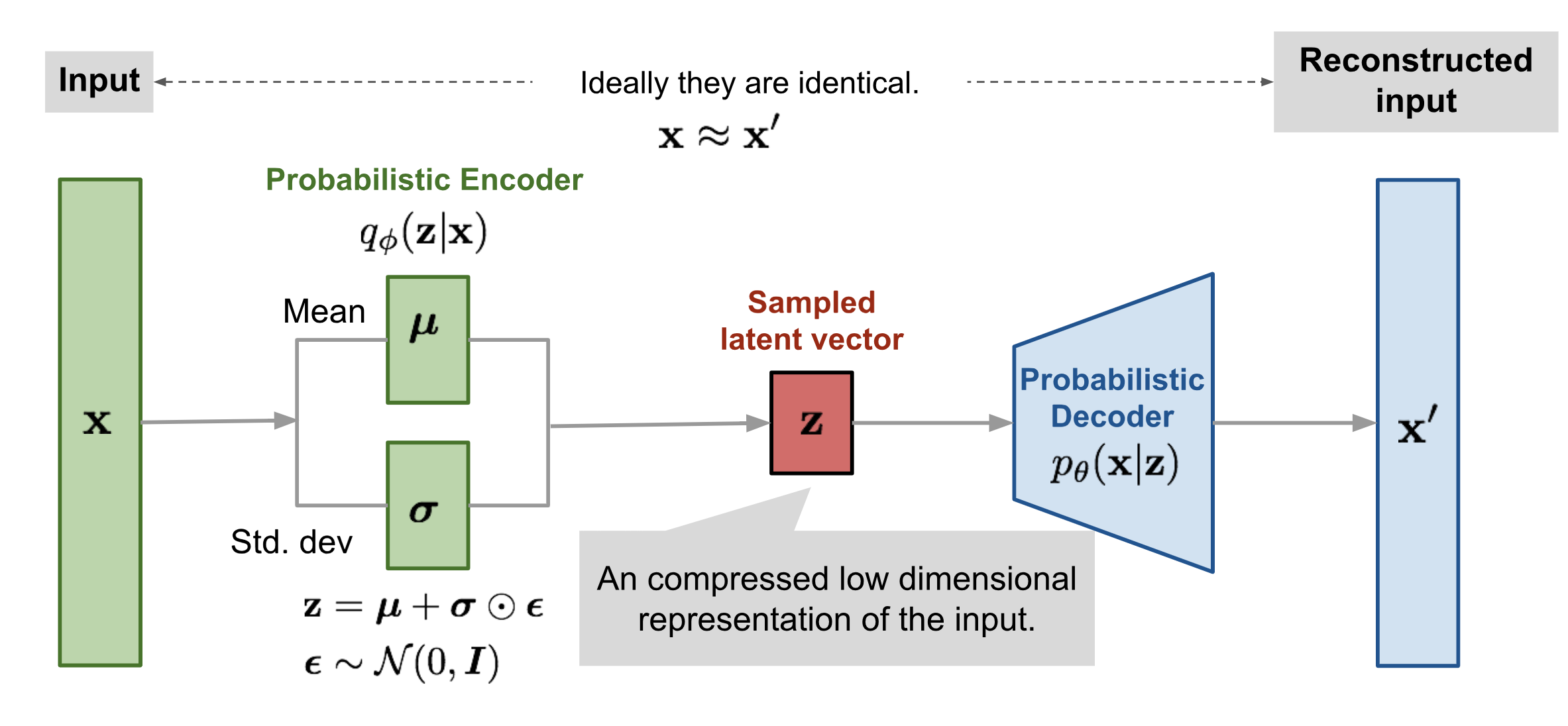

Variational Autoencoder (VAE)

The regular Autoencoder architecture aims at finding an identity function that is capable of finding a meaningful representation of the data in lower-dimensional space and then reconstructing it. It is considered an unsupervised learning method for dimensionality reduction, however, trained in a supervised regime with the data itself being the label. The component performing the reduction is called an encoder, while the part responsible for the reconstruction is called a decoder. The idea behind Variational Autoencoder (Kingma and Welling 2013) is similar, however, instead of learning the mapping to a static low-dimensional vector, the model learns its distribution. This design equips the decoder part with desired generative capabilities, as sampling from the latent low-dimensional space will result in varying data being generated. The architecture is depicted in Figure 3.22.

FIGURE 3.22: Variational (probabilistic) Autoencoder architecture. Figure from Weng (2018).

\(q_{\phi}(z|x)\) denotes the encoder under the assumption that \(z\) comes from multivariate Gaussian. \(\mu\) and \(\sigma\) are being learned. Reconstruction process is modelled by conditional probability \(p_{\theta}(x|z)\), given samples latent vector \(z\).

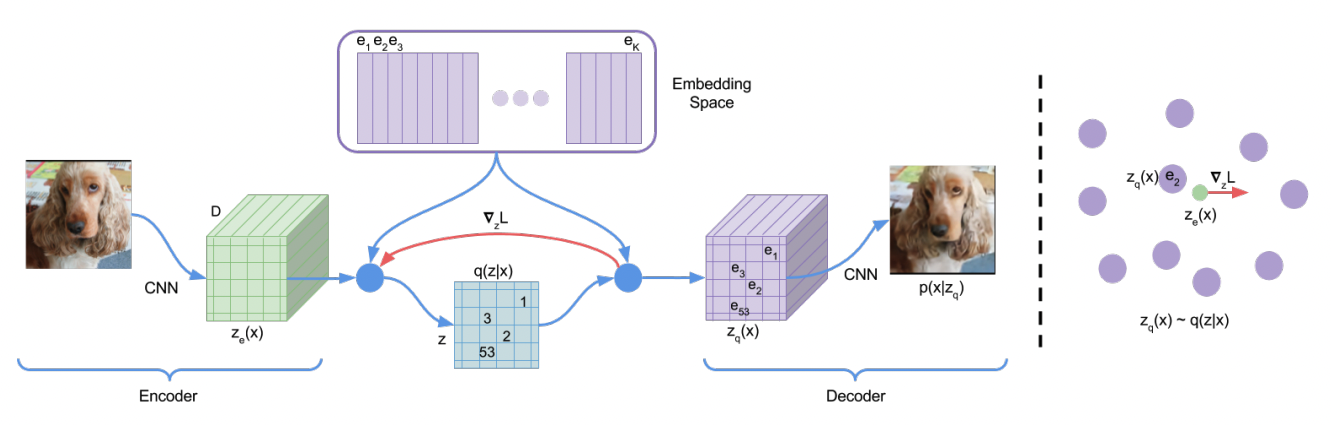

VQ-VAE / dVAE

The VQ-VAE (Vector Quantized VAE) (Oord, Vinyals, and Kavukcuoglu 2017) differs from the regular VAE in the way it approaches encoding the latent space. Instead of mapping data into a continuous distribution, the Vector Quantized version does it in a discrete way. This is motivated by the fact that for many data modalities it is more natural to represent them in a discrete way (e.g. speech, human language, reasoning about objects in images, etc.). VQ-VAE achieves that by using a separate codebook of vectors. The architecture is depicted in Figure 3.23.

FIGURE 3.23: VQ-VAE architecture. Figure from Oord, Vinyals, and Kavukcuoglu (2017).

The idea is to map the output of the encoder to one of the vectors from the \(K\)-dimensional codebook. This process is called quantization and essentially means finding the vector that is the nearest neighbour to the encoder’s output (in a sense of Euclidean distance). Since this moment, this newly found vector from the codebook is going to be used instead. The codebook itself is also subject to the learning process. One could argue that passing gradients during the training through such a discrete system might be problematic. VQ-VAE overcomes this problem by simply copying gradients from the decoder’s input to the encoder’s output. A great explanation of the training process and further mathematical details can be found in Weng (2018) and Snell (2021).

Dall-E, however, is using what is called dVAE. Essentially, it is a VQ-VAE with a couple of details changed. In short, the main difference is that instead of learning a deterministic mapping from the encoder’s output to the codebook, it produces probabilities of a latent representation over all codebook vectors.

Dall-E system

Dall-E is composed of two stages. The above introduction of VQ-VAE was necessary to understand the first one. Essentially, it is training dVAE to compress 256x256 images into a 32x32 grid of tokens. This model will play a crucial role in the second stage.

The second stage is about learning the prior distribution of text-image pairs. First, the text is byte-pair (Sennrich, Haddow, and Birch 2015a) encoded into a maximum of 256 tokens, where the vocabulary is of size 16384. Next, the image representation encoded by previously trained dVAE is unrolled (from 32x32 grid to 1024 tokens) and concatenated to the text tokens. This sequence (of 256+1024 tokens) is used as an input for a huge transformer-like architecture. Its goal is to autoregressively model the next token prediction.

During inference time, the text caption is again encoded into 256 tokens at most. The generation process starts with predicting all of the next 1024 image-related tokens. They are later decoded with the dVAE decoder that was trained in the first step. Its output represents the final image.

Results

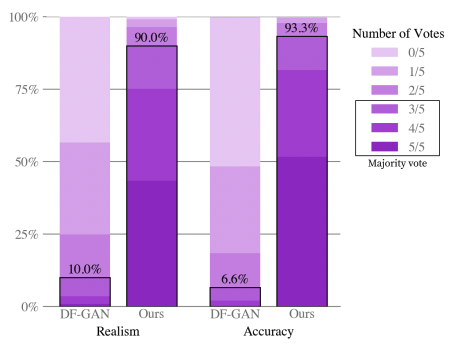

Results achieved with the original Dall-E attracted so much attention mainly due to its diversity and zero-shot capabilities. Dall-E was capable of producing better results compared to previous state-of-the-art models which were trained on data coming from the same domain as data used for evaluation. One comparison can be seen in Figure 3.24.

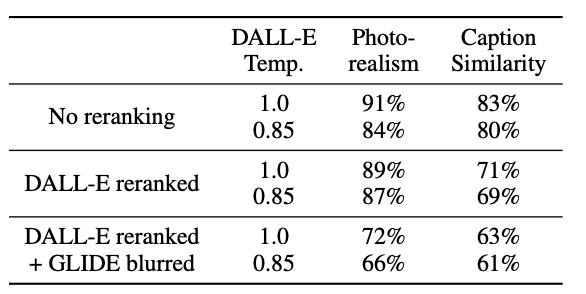

FIGURE 3.24: Human evaluation of Dall-E vs DF-GAN on text captions from the MS-COCO dataset. When asked for realism and caption similarity, evaluators preferred Dall-E’s results over 90\% of the time. Figure from Ramesh, Pavlov, et al. (2021b).

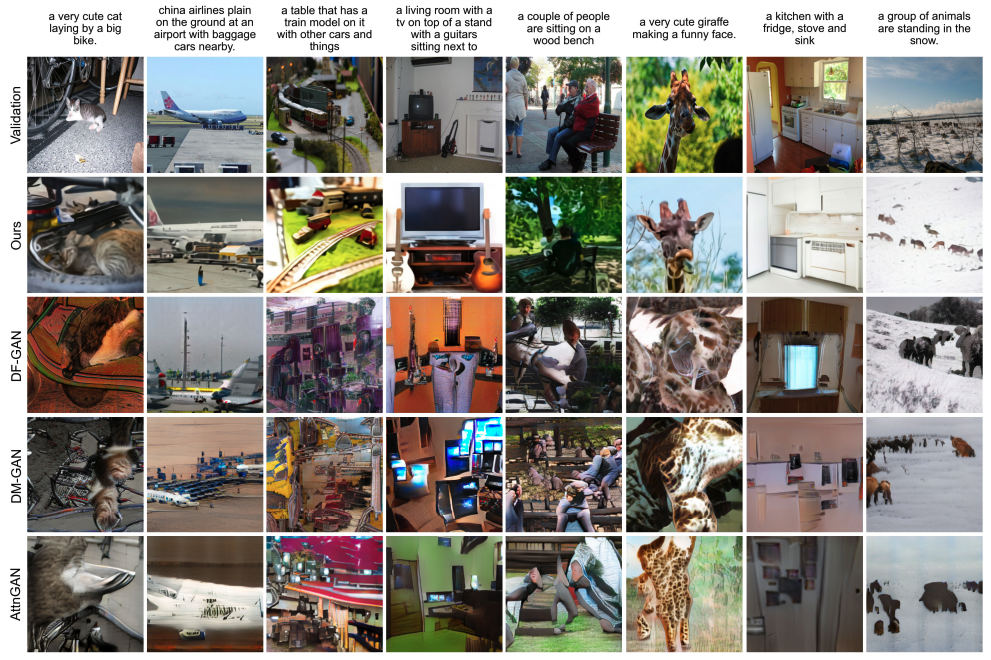

Outputs of some of the prior approaches described in this chapter compared with Dall-E can be seen in Figure 3.25.

FIGURE 3.25: Comparison of the results from Dall-E vs prior works on MS-COCO. Dall-E’s outputs are chosen as the best out of 512 images, ranked by a contrastive model. Figure from Ramesh, Pavlov, et al. (2021b).

Limitations

Although Dall-E made a huge step forward in text-to-image modelling, it still showed multiple flaws. First, photorealism of the outputs is still relatively low. In other words, when prompted for images containing realistic situations, it is rarely capable of deceiving human evaluators. Second, the model has evident problems with understanding relatively complex abstractions, such as text inside an image, or relative object positions in the scene.

3.2.4 GLIDE

Introduced by Nichol et al. (2021b), GLIDE started an era of huge-scale diffusion models. The concept of diffusion has already been used in the area of Deep Learning for some time before. However, the authors of GLIDE took a step further and combined it together with text-based guidance which is supposed to steer the learning process in the direction of the text’s meaning. This powerful method was proven to achieve outstanding results which remain competitive with current state-of-the-art models at the time of writing.

Diffusion models

Before understanding the inner workings of GLIDE, it is important to introduce the core concept that is driving it, namely diffusion. The idea of diffusion originates from physics. In short, it corresponds to the process of diffusing particles, for example of one fluid in another. Normally it has a unidirectional character, in other words, it cannot be reversed. However, as Sohl-Dickstein et al. (2015) managed to show, and Ho, Jain, and Abbeel (2020a) later improved, if the data diffusion process is modelled as a Markov chain with Gaussian noise being added in consecutive steps, it is possible to learn how to reverse it. This reversed process is exactly how images are generated by the model from pure random noise.

Let us construct a Markov chain, where the initial data point is denoted by \(x_{0}\). In \(t\) steps, Gaussian noise is added to the data. The distribution of the data at \(t\)-step can be characterized in the following way:

\[q(x_{t}|x_{t-1}):=N(x_{t};\sqrt{\alpha_{t}}x_{t-1},(1-\alpha_{t})I)\]

where \((1-\alpha_{t})\) parametrizes the magnitude of the noise being added at each step. Now, if \(x_{t-1}\) was to be reconstructed from \(x_{t}\), a model needs to learn to predict estimates of gradients from the previous steps. The probability distribution of previous steps can be estimated as follows:

\[p_{\theta}(x_{t-1}|x_{t})=N(x_{t-1};\mu_{\theta}(x_{t}),\Sigma_{\theta}(x_{t}))\]

where the mean function \(\mu_{\theta}\) was proposed by Ho, Jain, and Abbeel (2020a). For a more detailed explanation of how this is later parametrized and trained, one could follow Weng (2021).

GLIDE system

GLIDE can essentially be broken down into two parts. The first of them is the pretrained Transformer model, which in principle is responsible for creating the text embeddings. The last token embedding is used as a class embedding (text representation) in later stages. Additionally, all tokens from the last embedding layer are being used (attended to) by all attention layers in the diffusion model itself. This makes the model aware of the text meaning while reconstructing the previous step in the Markov chain.

The second component of the GLIDE is the diffusion model itself. A U-Net-like architecture with multiple attention blocks is used here. This part’s sole goal is to model \(p_{\theta}(x_{t-1}|x_{t},y)\), where \(y\) corresponds to last token embedding mentioned above. Or, to put it differently, to predict \(\epsilon_{\theta}(x_{t}|y)\) since the problem can be reframed as calculating the amount of noise being added at each step.

Additionally, to make the model even more aware of the text’s meaning, guidance is being used at inference time. In short, the idea is to control the direction of the diffusion process. The authors test two different approaches. First, they try guidance with the use of a separate classifier, OpenAI’s CLIP in this case. However, better results were in general achieved by the classifier-free guidance process. The idea is to produce two different images at each step. One is conditioned on text, while the other one is not. Distance between them is calculated and then, after significant scaling, added to the image obtained without conditioning. This way, the model speeds up the progression of the image towards the meaning of the text. This process can be written as:

\[\hat{\epsilon}_\theta(x_{t}|y)=\epsilon_{\theta}(x_{t}|\emptyset)+s*(\epsilon_{\theta}(x_{t}|y)-\epsilon_{\theta}(x_{t}|\emptyset))\]

where \(s\) denotes the parameter for scaling the difference between the mentioned images.

Results

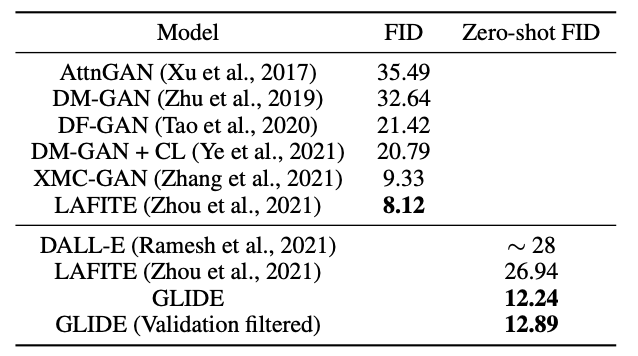

GLIDE achieves significantly more photorealistic results compared to its predecessors. FID scores reported on the MS-COCO 256x256 dataset can be seen in Figure 3.26. It is worth noting that GLIDE was not trained on this dataset, hence its zero-shot capabilities are even more impressing.

Results are also preferred by human evaluators in terms of photorealism and the similarity of the image to its caption. A comparison to DALL-E 1 results can be seen in Figure 3.27

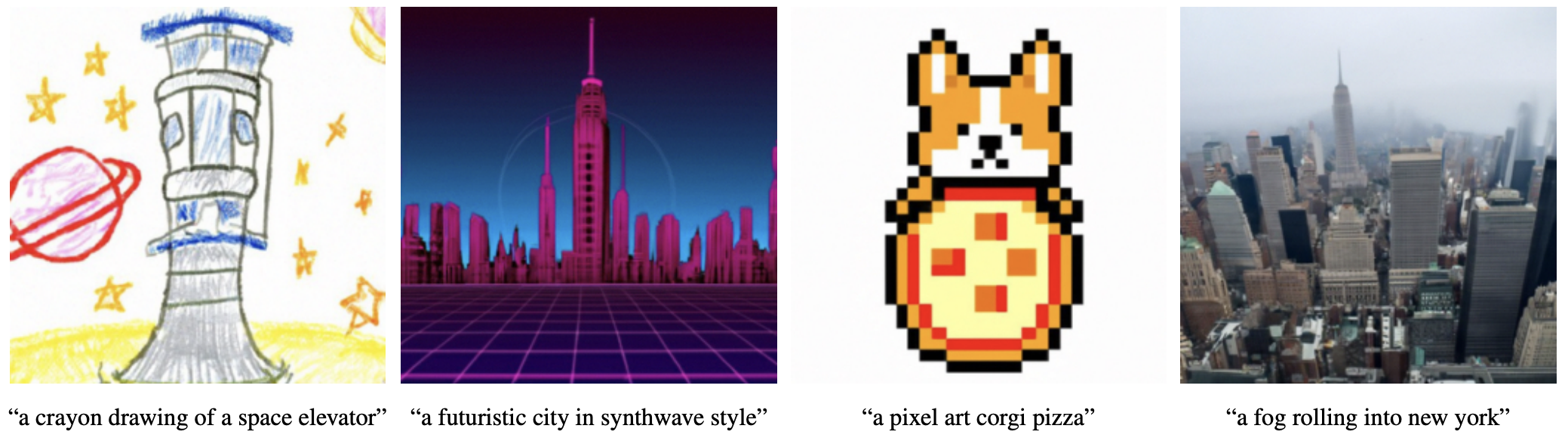

Finally, some of the cherry-picked images together with their corresponding captions can be seen in Figure 3.28.

FIGURE 3.28: Samples from GLIDE with classifier-free-guidance and s=3. Figure from Nichol et al. (2021b).

Limitations

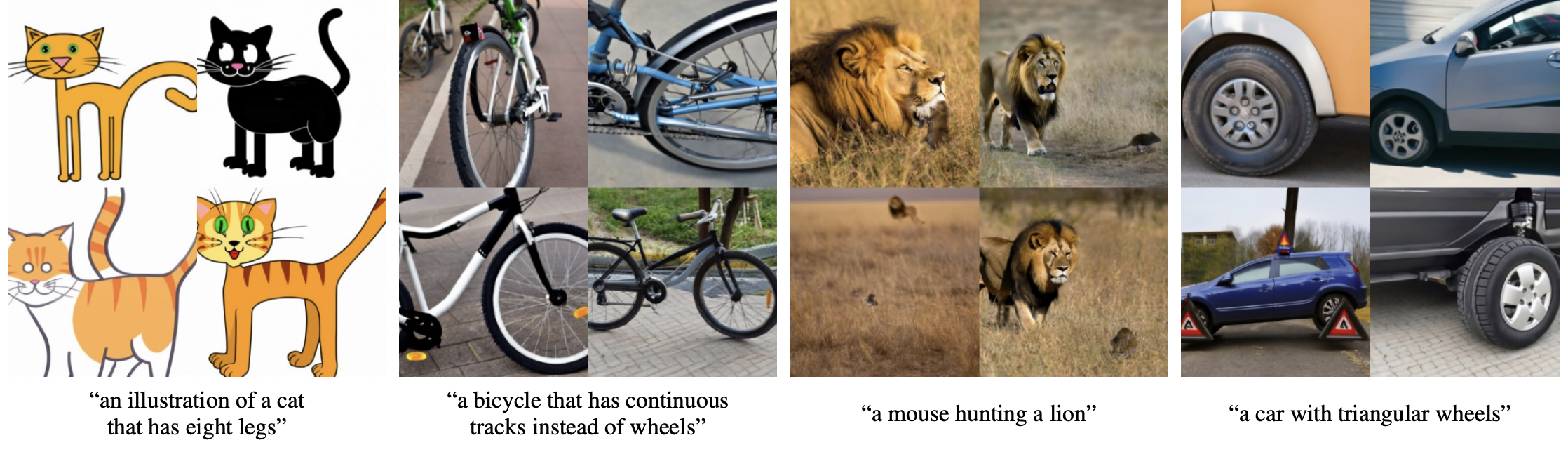

GLIDE suffers from two problems. First, it fails when being presented with a complex or unusual text prompt. A few examples can be seen in Figure 3.29. Also, the model is relatively slow at inference time (much slower than GANs). This is caused by the sequential character of the architecture, where consecutive steps in Markov chain reconstruction cannot be simply parallelized.

3.2.5 Dall-E 2 / unCLIP

The contribution that probably attracted the most attention in the field is known under the name Dall-E 2 (Ramesh, Dhariwal, et al. 2022a). For the first time, the wider public had picked interest in its potential applications. This might be due to a great PR that could be seen from the authors, namely OpenAI. Dall-E 2, also known as just Dall-E, or unCLIP, has been advertised as a successor of Dall-E 1, on which results it significantly improved. In reality, the architecture and the results it achieved are much more similar to that of GLIDE. Additionally, social media has been flooded with images generated by the model. This was possible thanks to OpenAI giving access to it to everybody who was interested and patient enough to get through a waiting list. However, the model itself again remains unpublished. Another factor that might have contributed to Dall-E’s success were its inpainting and outpainting capabilities. Although, it is worth mentioning they were already also possible with GLIDE.

In essence, UnCLIP is a very smart combination of pior work from OpenAI that was re-engineered and applied in a novel way. Nevertheless, the model represents a significant leap forward, which is why it cannot be omitted in this chapter.

Dall-E 2 system

UnCLIP consists of two components: prior and decoder. Let \(x\) be the image and \(y\) its caption. \(z_{i}\) and \(z_{t}\) are CLIP image and text embedding of this \((x, y)\) pair. Then, prior \(P(z_{i}|y)\) is responsible for producing CLIP image embeddings conditioned on the text caption. A decoder \(P(x|z_{i},y)\) outputs an image conditioned on the CLIP image embedding and, again, the text caption itself.

For the prior authors try two different approaches, namely autoregressive and diffusion models. The latter ended up yielding slightly better results. The diffusion prior isa Transformer taking as an input a special sequence of an encoded text prompt, CLIP text embedding, embedding for the diffusion step, and a noised CLIP image embedding.

The decoder consists of diffusion models again. Firstly, a GLIDE-like model takes a CLIP image embedding as its \(x_{t}\) instead of the pure noise that was used in its original version. Similarly to the original GLIDE, classifier-free guidance is applied, however with slight differences. Lastly, two diffusion upsampler models are trained to bring images first from 64x64 to 256x256, and then from 256x256 to 1024x1024 resolution. The authors found no benefit in conditioning these models on text captions. Finally, unCLIP can be summarized as a mixture of GLIDE and CLIP with a lot of engineering behind it.

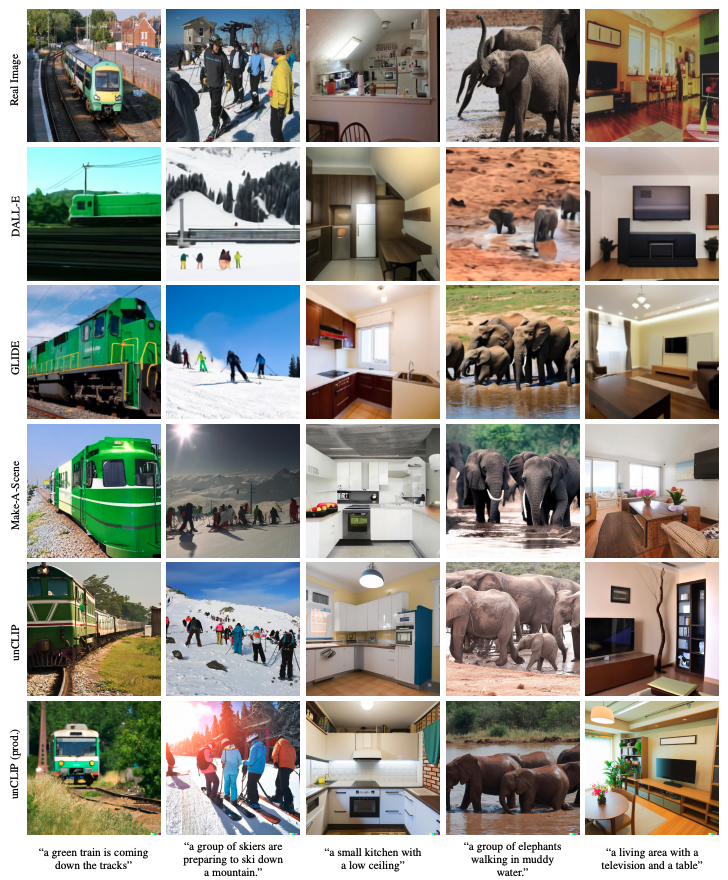

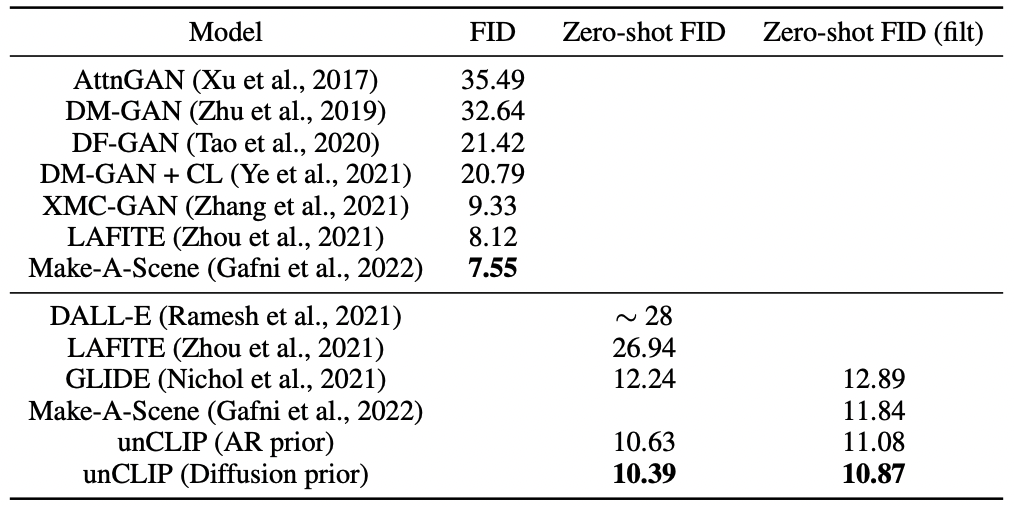

Results

When compared to GLIDE, unCLIP shows it is capable of representing a wider diversity of the data, while achieving a similar level of photorealism and caption similarity. Comparison to previous works on the MS-COCO dataset shows that unCLIP achieves unprecedented FID (Figure 3.30). A few output examples calculated on MS-COCO captions can be found in Figure 3.31.

FIGURE 3.30: Comparison of FID on MS-COCO. The best results for unCLIP were reported with the guidance scale of 1.25. Figure from Ramesh, Dhariwal, et al. (2022a).

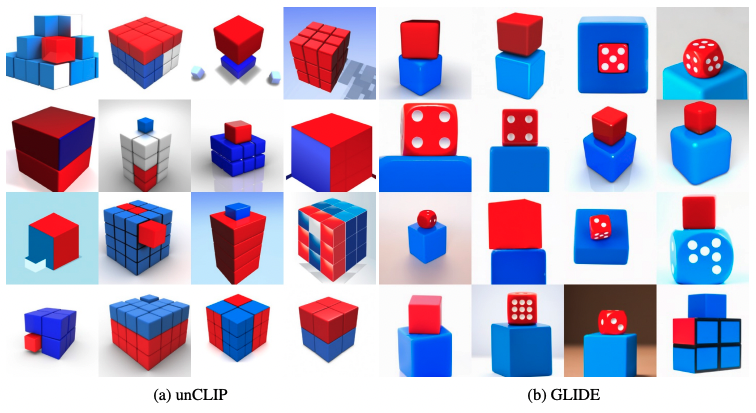

Limitations

UnCLIP suffers from very similar problems as its predecessor GLIDE. First, compositionality in the images tends to sometimes be confused by the model. Failure cases can be seen in Figure 3.32. Second, UnCLIP struggles with generating coherent text inside an image (Figure 3.33). The authors hypothesize that using CLIP embeddings, although improving diversity, might be responsible for making these problems more evident than in GLIDE. Lastly, UnCLIP often fails with delivering details in highly complex scenes (Figure 3.34). Again, according to the authors, this might be a result of the fact that the decoder is producing only 64x64 images which are later upsampled.

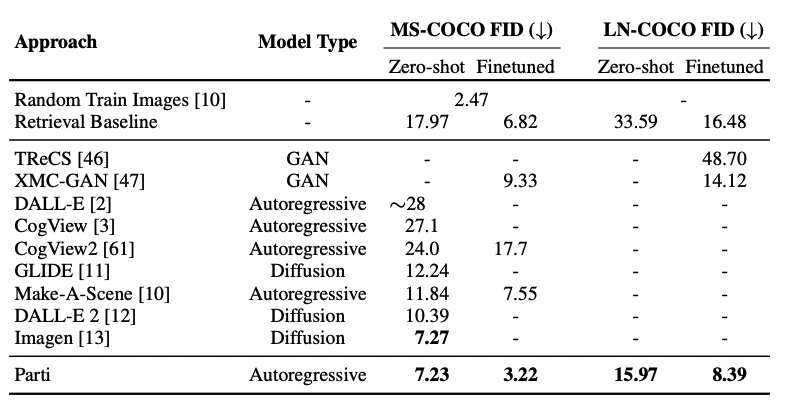

3.2.6 Imagen & Parti

Only a few months after unCLIP was released by OpenAI, for the first time Google came into play with its new autoregressive model called Imagen (Saharia et al. 2022b). Another one followed just two months later - Parti (J. Yu, Xu, Koh, et al. 2022b). Both of these models pushed the boundaries even further, although they take entirely different approaches. None of them is introducing a completely new way of looking at the problem of text-to-image generation. Their advancements come from engineering and further scaling existing solutions. However, it must be stressed that currently (September 2022) they are delivering the most outstanding results.

Imagen is a diffusion model. Its main contribution is that instead of using a text encoder trained on image captions, it actually uses a huge pretrained NLP model called T5-XXL (Raffel et al. 2019b) that is taken off the shelf and frozen. Authors argue that this helps the model understand language much more deeply, as it has seen more diverse and complex texts than just image captions.

On the other hand, Parti takes an autoregressive approach. Similarly to the first version of Dall-E, it consists of two stages, namely the image tokenizer and sequence-to-sequence autoregressive part which is responsible for generating image tokens from a set of text tokens. In this case, ViT-VQGAN (Yu et al. 2021) is used as a tokenizer and the autoregressive component is again Transformer-like.

Results

Both of the models improved the FID significantly compared to the previous works. Figure 3.35 shows the comparison.

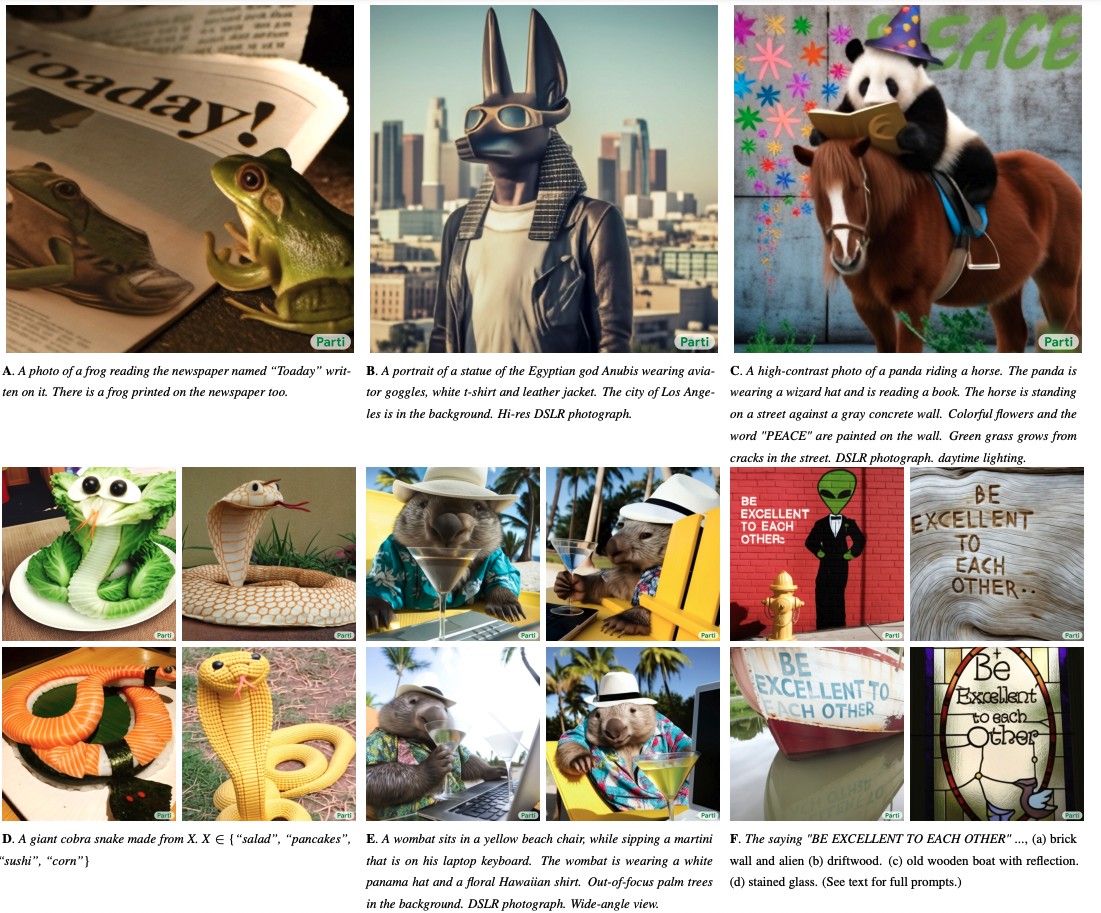

Samples from Parti can be seen in Figure 3.36. They are included here on purpose - this is the current state-of-the-art as of the moment of writing!

Limitations

J. Yu, Xu, Koh, et al. (2022b) mention an extensive list of problems, with which Parti still struggles. At this point, all of them can be treated as a set that is common to almost all available models. Among others, they touch:

- feature blending (where features of two different objects are missed)

- omission or duplicating details

- displaced positioning of objects

- counting

- negation in text prompts

and many many more. These flaws pose a challenge for future research and undoubtedly they are the ones that need to be addressed first to enable another leap forward in the field of text-to-image generation.

3.2.7 Discussion

Lastly, it is important to mention a couple of different topics, or trends, which are intrinsically linked with text-to-image generation. Together with previous sections, they should give the reader a holistic view of where research currently stands (again, as of September 2022).

Open- vs closed-source

The first trend that has emerged only recently is AI labs to not open-source their state-of-the-art models and training data. This is in clear opposition to how the entire AI community was behaving from the very beginning of the recent Deep Learning era Apparently, possible commercial opportunities that come along with owning the software are too big to be ignored. The trend is very disruptive - it is clear that the community is currently witnessing the maturation of AI business models. Needless to say, it is followed by all the greatest AI labs, just to name a few: OpenAI, DeepMind, Google Brain, Meta AI, and many others. As long as commercial achievements will have an edge over academic community research, it is highly doubtful that the trend will be reversed. However, it needs to be stressed that all of them are still issuing more or less detailed technical specifications of their work in the form of scientific papers, which is definitely a positive factor. We, as a community, can only hope it will not change in the future.

Open-Source Community

As the trend of closed-sourceness is clearly visible across many Deep Learning areas, the text-to-image research is actually well represented by an open-source community. The most important milestones of the recent years indeed come from OpenAI, however, new approaches can be seen across a wide community of researchers. Many of these models are public, meaning that any user with minimal coding experience can play with them. Although we decided not to go into details of particular works, it is important to name a few that became the most popular:

- VQGAN-CLIP (Crowson et al. 2022)

- Midjourney (Midjourney 2022)

- Latent Diffusion (Rombach et al. 2021)

- Stable Diffusion (Rombach et al. 2022)

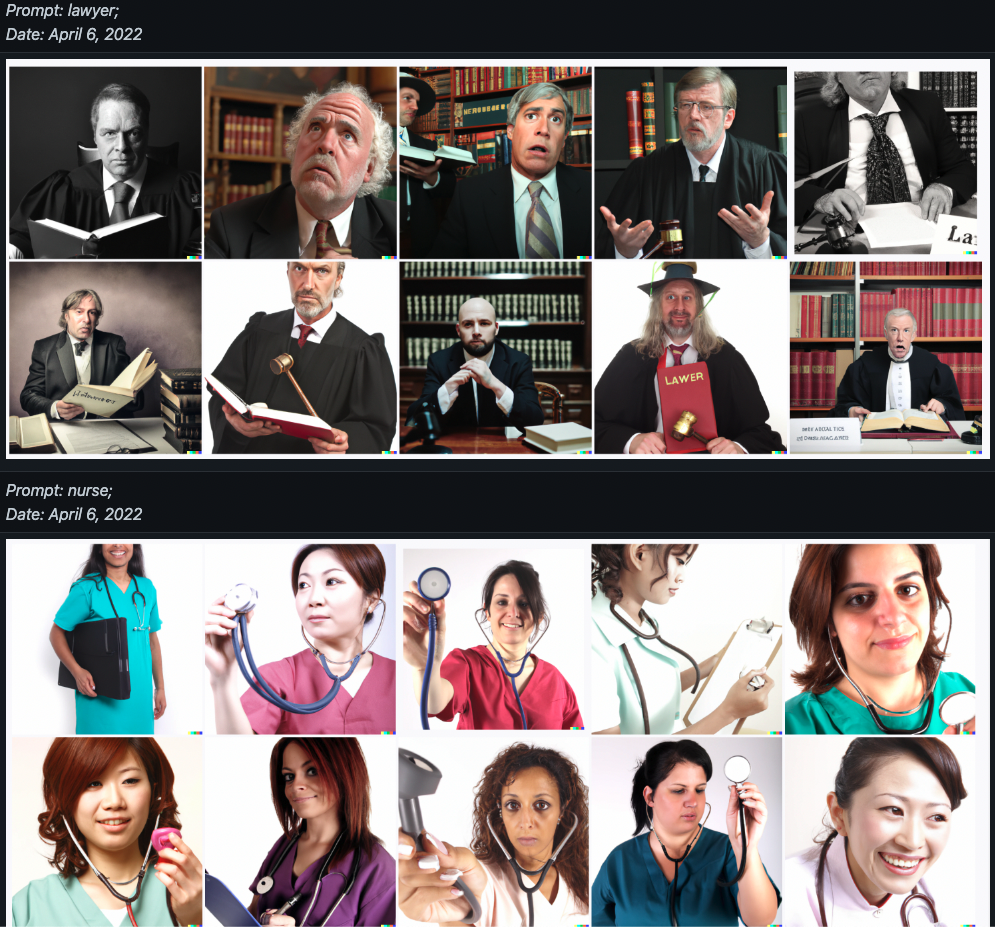

Potential applications