Chapter 2 Introducing the modalities

Authors: Cem Akkus, Vladana Djakovic, Christopher Benjamin Marquardt

Supervisor: Matthias Aßenmacher

Natural Language Processing (NLP) has existed for about 50 years, but it is more relevant than ever. There have been several breakthroughs in this branch of machine learning that is concerned with spoken and written language. For example, learning internal representations of words was one of the greater advances of the last decade. Word embeddings (Mikolov, Chen, et al. (2013a), Bojanowski et al. (2016)) made it possible and allowed developers to encode words as dense vectors that capture their underlying semantic content. In this way, similar words are embedded close to each other in a lower-dimensional feature space. Another important challenge was solved by Encoder-decoder (also called sequence-to-sequence) architectures Sutskever, Vinyals, and Le (2014), which made it possible to map input sequences to output sequences of different lengths. They are especially useful for complex tasks like machine translation, video captioning or question answering. This approach makes minimal assumptions on the sequence structure and can deal with different word orders and active, as well as passive voice.

A definitely significant state-of-the-art technique is Attention Bahdanau, Cho, and Bengio (2014), which enables models to actively shift their focus – just like humans do. It allows following one thought at a time while suppressing information irrelevant to the task. As a consequence, it has been shown to significantly improve performance for tasks like machine translation. By giving the decoder access to directly look at the source, the bottleneck is avoided and at the same time, it provides a shortcut to faraway states and thus helps with the vanishing gradient problem. One of the most recent sequence data modeling techniques is Transformers (Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, et al. (2017b)), which are solely based on attention and do not have to process the input data sequentially (like RNNs). Therefore, the deep learning model is better in remembering context-induced earlier in long sequences. It is the dominant paradigm in NLP currently and even makes better use of GPUs, because it can perform parallel operations. Transformer architectures like BERT (Devlin et al. 2018b), T5 (Raffel et al. 2019a) or GPT-3 (Brown et al. 2020) are pre-trained on a large corpus and can be fine-tuned for specific language tasks. They have the capability to generate stories, poems, code and much more. With the help of the aforementioned breakthroughs, deep networks have been successful in retrieving information and finding representations of semantics in the modality text. In the next paragraphs, developments for another modality image are going to be presented.

Computer vision (CV) focuses on replicating parts of the complexity of the human visual system and enabling computers to identify and process objects in images and videos in the same way that humans do. In recent years it has become one of the main and widely applied fields of computer science. However, there are still problems that are current research topics, whose solutions depend on the research’s view on the topic. One of the problems is how to optimize deep convolutional neural networks for image classification. The accuracy of classification depends on width, depth and image resolution. One way to address the degradation of training accuracy is by introducing a deep residual learning framework (He et al. 2015). On the other hand, another less common method is to scale up ConvNets, to achieve better accuracy is by scaling up image resolution. Based on this observation, there was proposed a simple yet effective compound scaling method, called EfficientNets (M. Tan and Le 2019a).

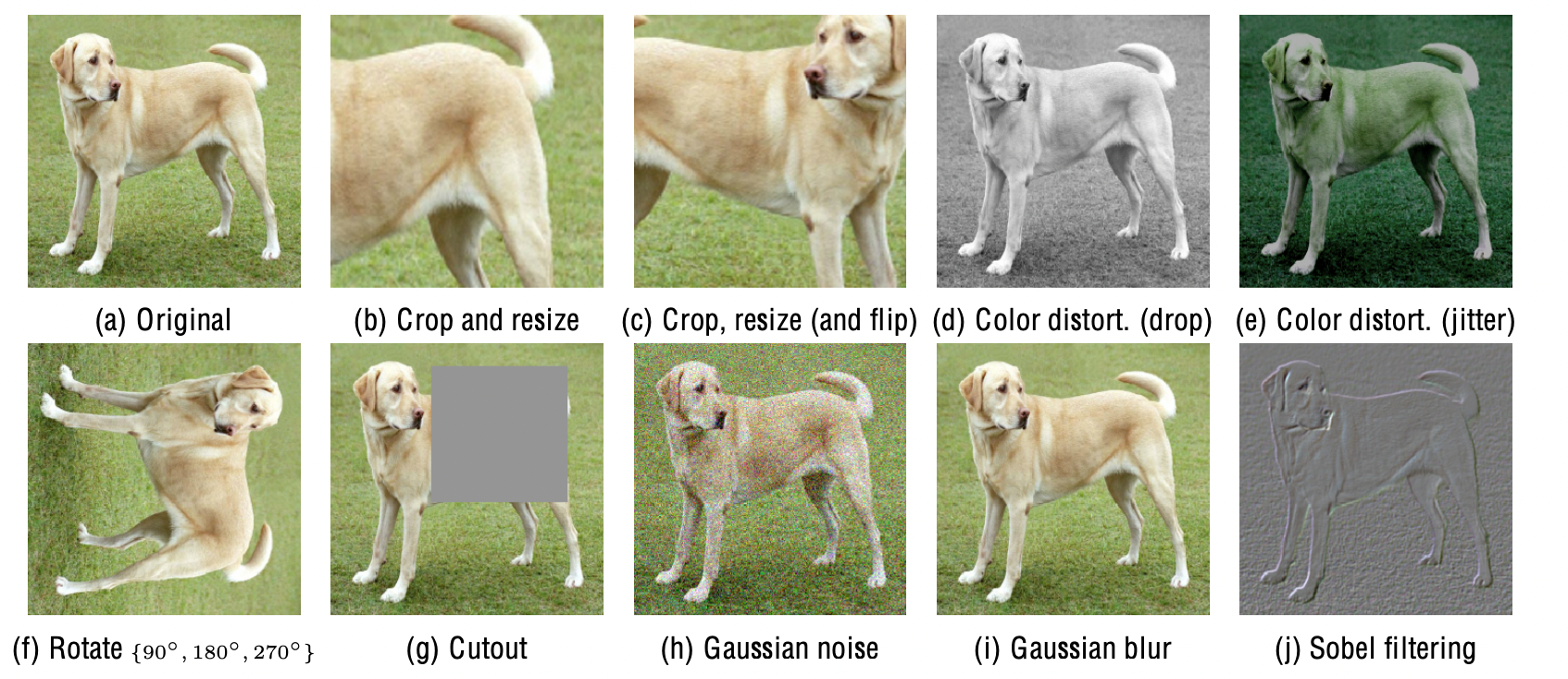

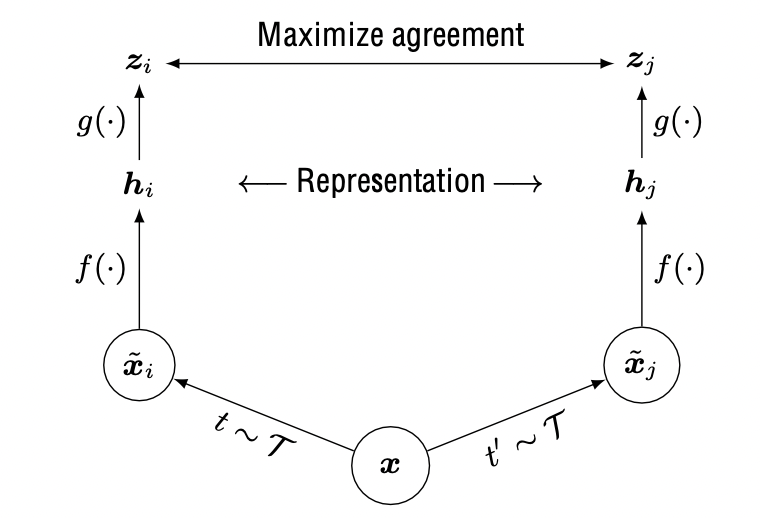

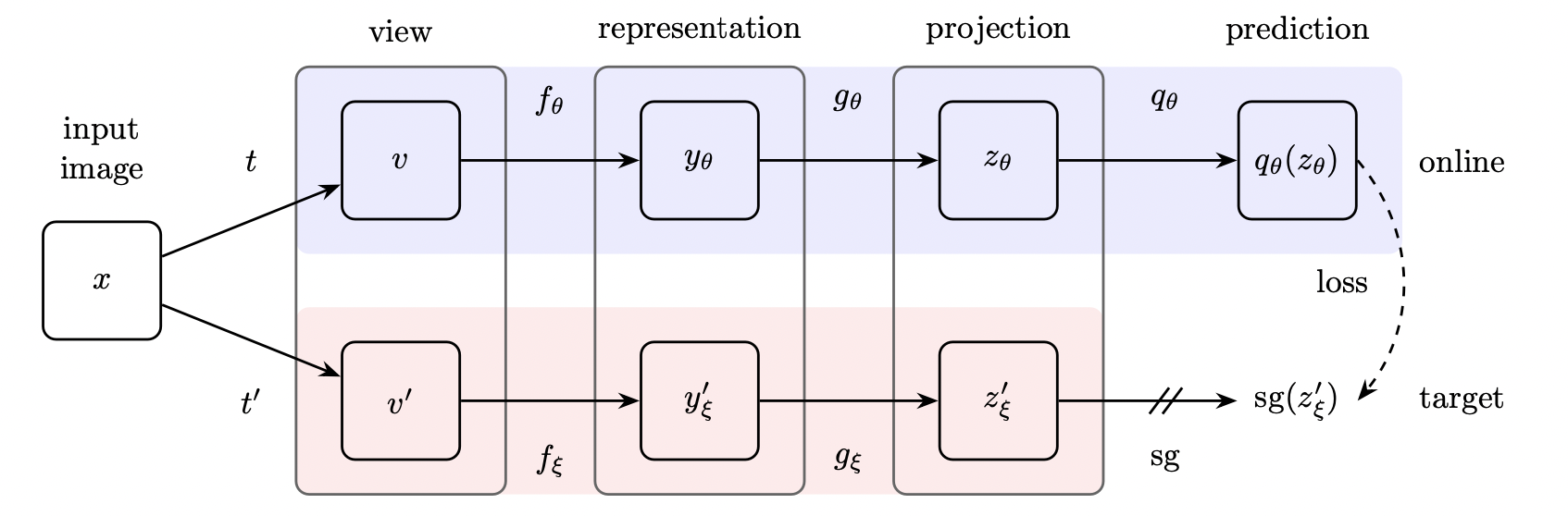

Another state-of-the-art trend in computer vision is learning effective visual representations without human supervision. Discriminative approaches based on contrastive learning in the latent space have recently shown great promise, achieving state-of-the-art results, but the simple framework for contrastive learning of visual representations, which is called SimCLR, outperforms previous work (T. Chen, Kornblith, Norouzi, and Hinton 2020a). However, another research proposes as an alternative a simple “swapped” prediction problem where we predict the code of a view from the representation of another view. Where features are learned by Swapping Assignments between multiple Views of the same image (SwAV) (Caron et al. 2020). Further recent contrastive methods are trained by reducing the distance between representations of different augmented views of the same image (‘positive pairs’) and increasing the distance between representations of augmented views from different images (‘negative pairs’). Bootstrap Your Own Latent (BYOL) is a new algorithm for self-supervised learning of image representatios (Grill, Strub, Altché, et al. 2020).

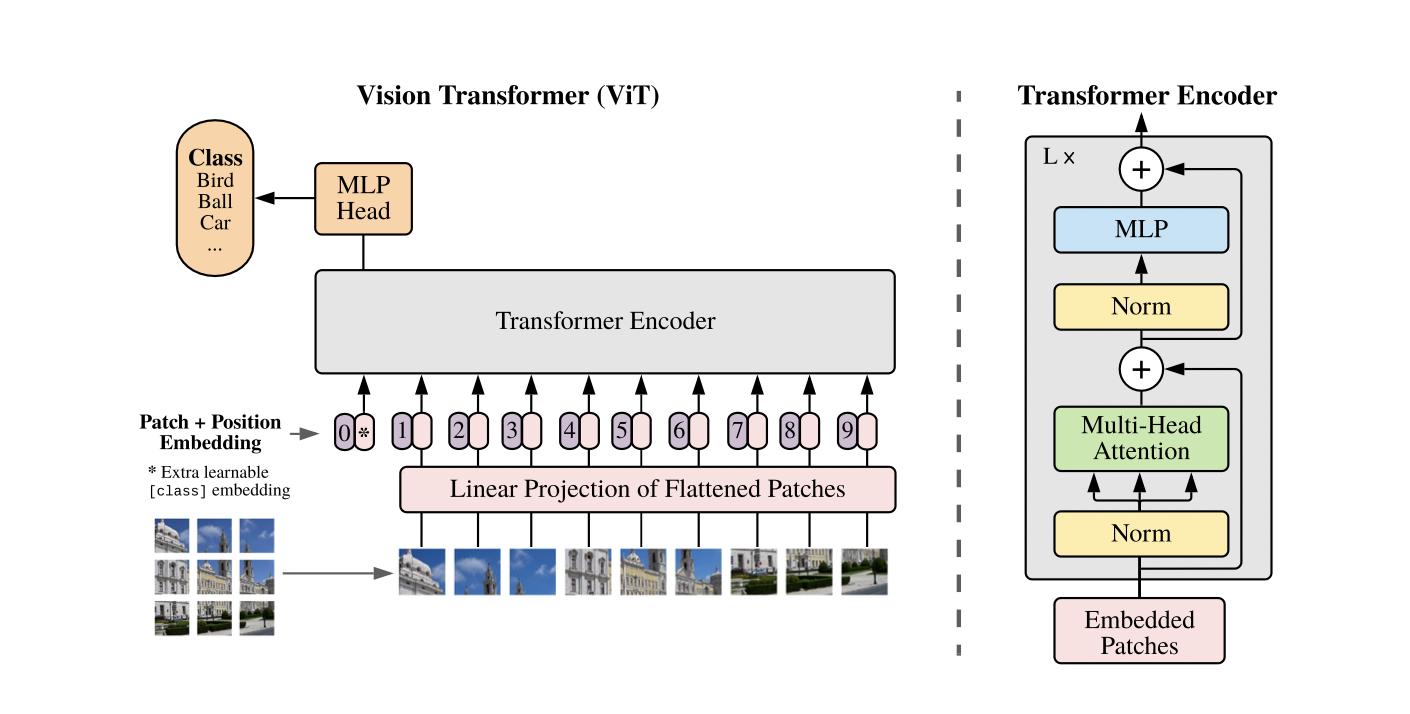

Self-attention-based architectures, in particular, Transformers have become the model of choice in natural language processing (NLP). Inspired by NLP successes, multiple works try combining CNN-like architectures with self-attention, some replacing the convolutions entirely. The latter models, while theoretically efficient, have not yet been scaled effectively on modern hardware accelerators due to the use of specialized attention patterns. Inspired by the Transformer scaling successes in NLP, one of the experiments is applying a standard Transformer directly to the image (Dosovitskiy, Beyer, Kolesnikov, Weissenborn, Zhai, Unterthiner, Dehghani, Minderer, Heigold, Gelly, Uszkoreit, et al. 2020a). Due to the widespread application of computer vision, these problems differ and are constantly being at the center of attention of more and more research.

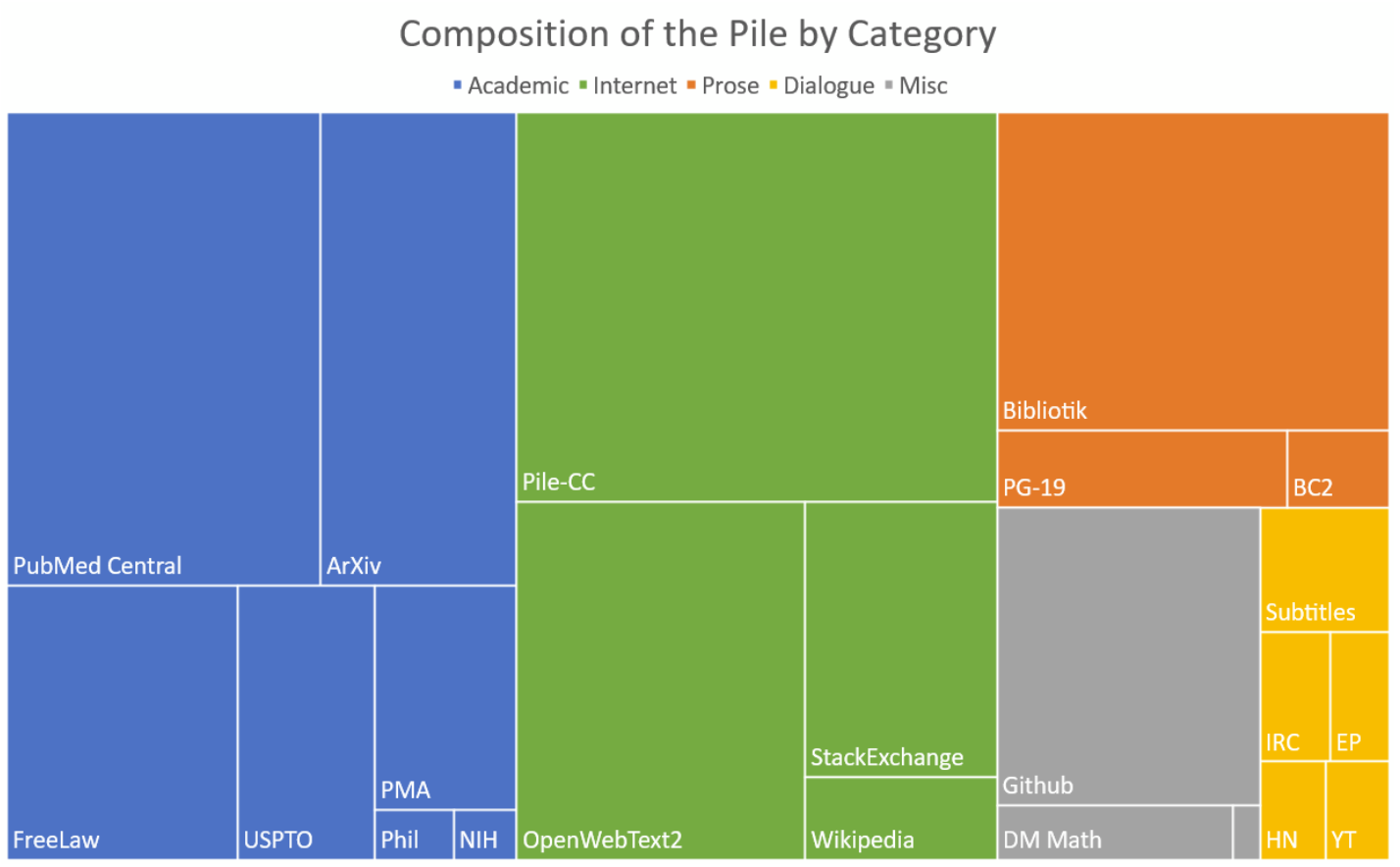

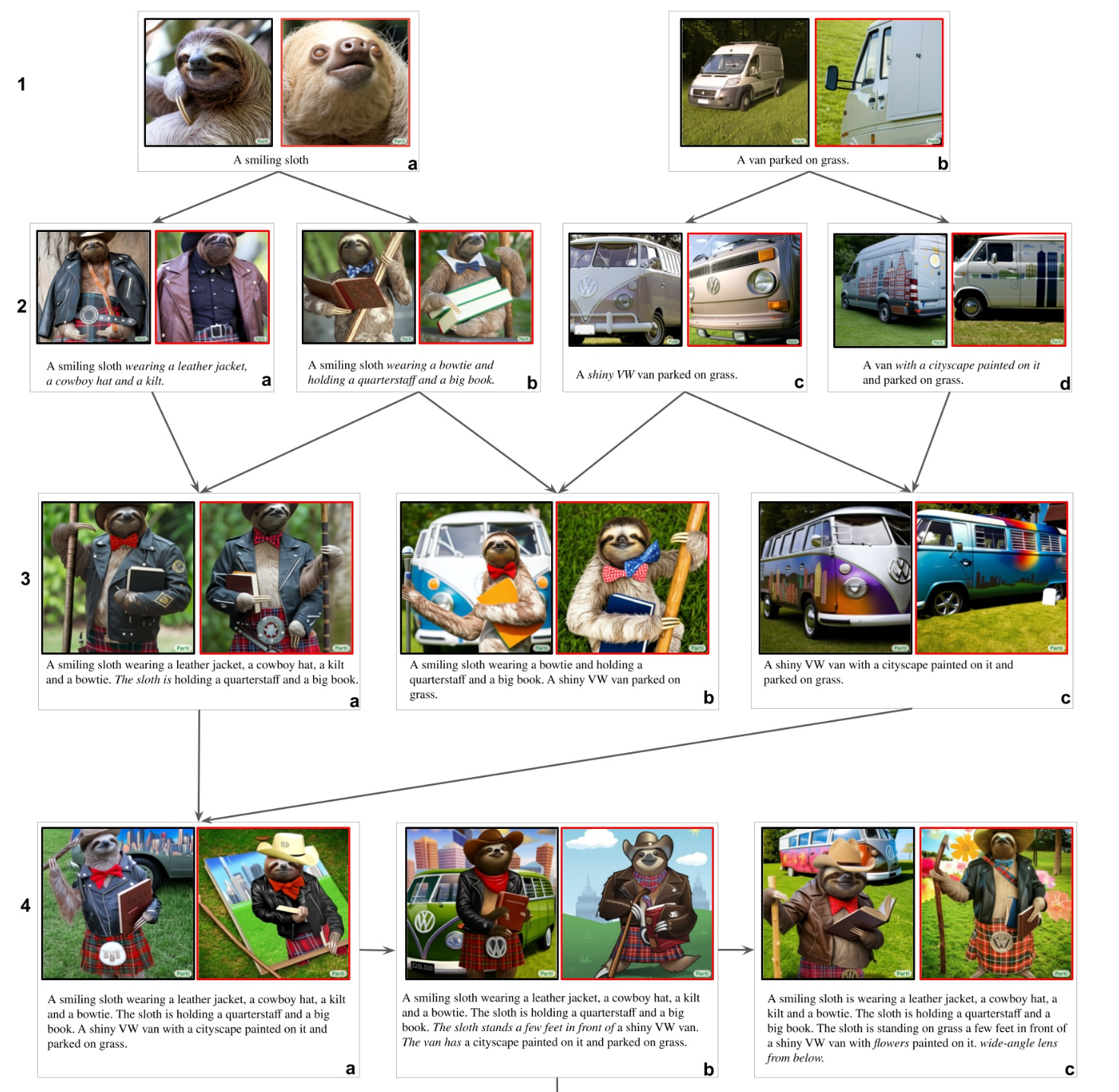

With the rapid development in NLP and CV in recent years, it was just a question of time to merge both modalities to tackle multi-modal tasks. The release of DALL-E 2 just hints at what one can expect from this merge in the future. DALL-E 2 is able to create photorealistic images or even art from any given text input. So it takes the information of one modality and turns it into another modality. It needs multi-modal datasets to make this possible, which are still relatively rare. This shows the importance of available data and the ability to use it even more. Nevertheless, all modalities are in need of huge datasets to pre-train their models. It’s common to pre-train a model and fine-tune it afterwards for a specific task on another dataset. For example, every state-of-the-art CV model uses a classifier pre-trained on an ImageNet based dataset. The cardinality of the datasets used for CV is immense, but the datasets used for NLP are of a completely different magnitude. BERT uses the English Wikipedia and the Bookscorpus to pre-train the model. The latter consists of almost 1 billion words and 74 million sentences. The pre-training of GPT-3 is composed of five huge corpora: CommonCrawl, Books1 and Books2, Wikipedia and WebText2. Unlike language model pre-training that can leverage tremendous natural language data, vision-language tasks require high-quality image descriptions that are hard to obtain for free. Widely used pre-training datasets for VL-PTM are Microsoft Common Objects in Context (COCO), Visual Genome (VG), Conceptual Captions (CC), Flickr30k, LAION-400M and LAION-5B, which is now the biggest openly accessible image-text dataset.

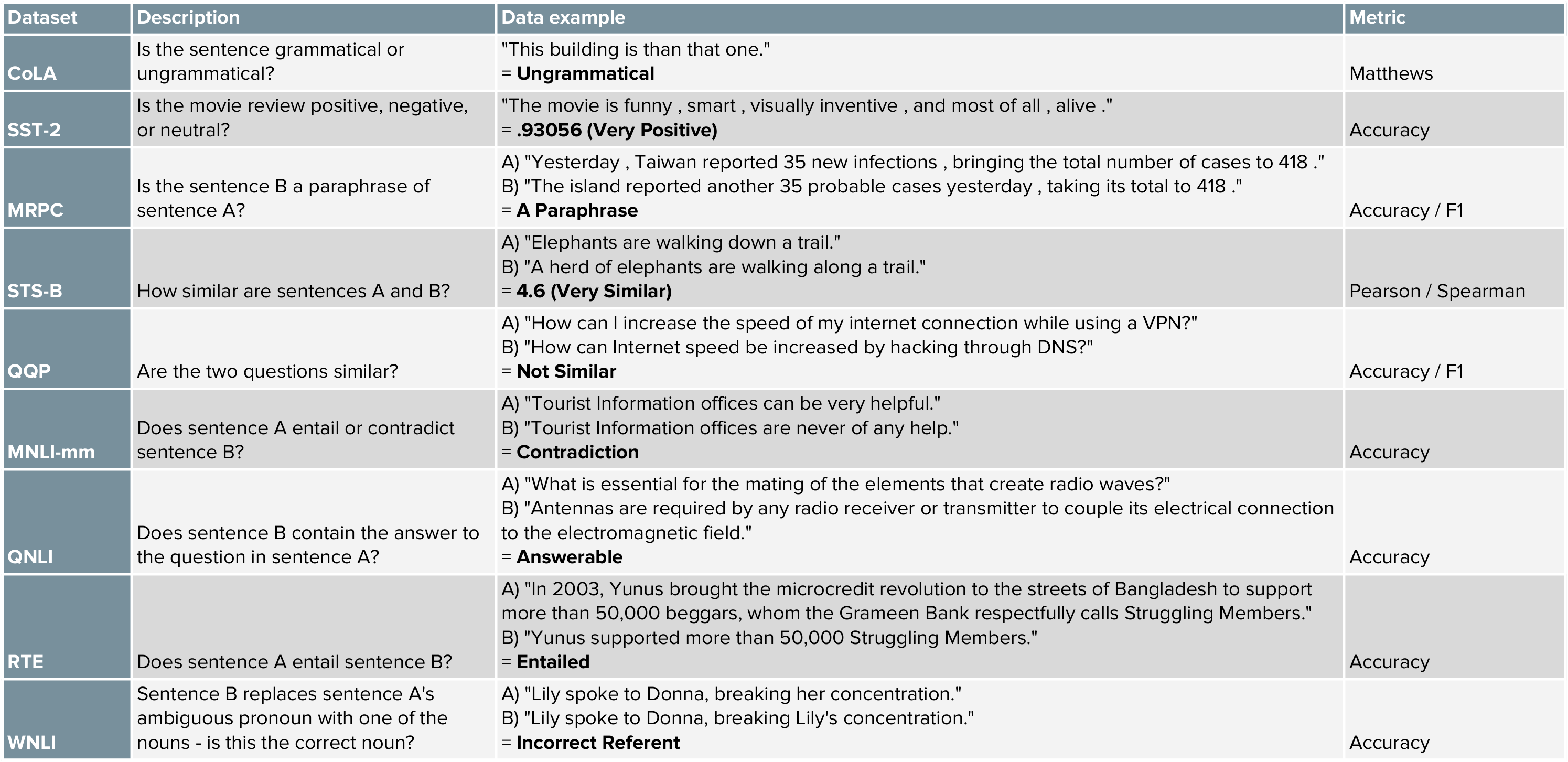

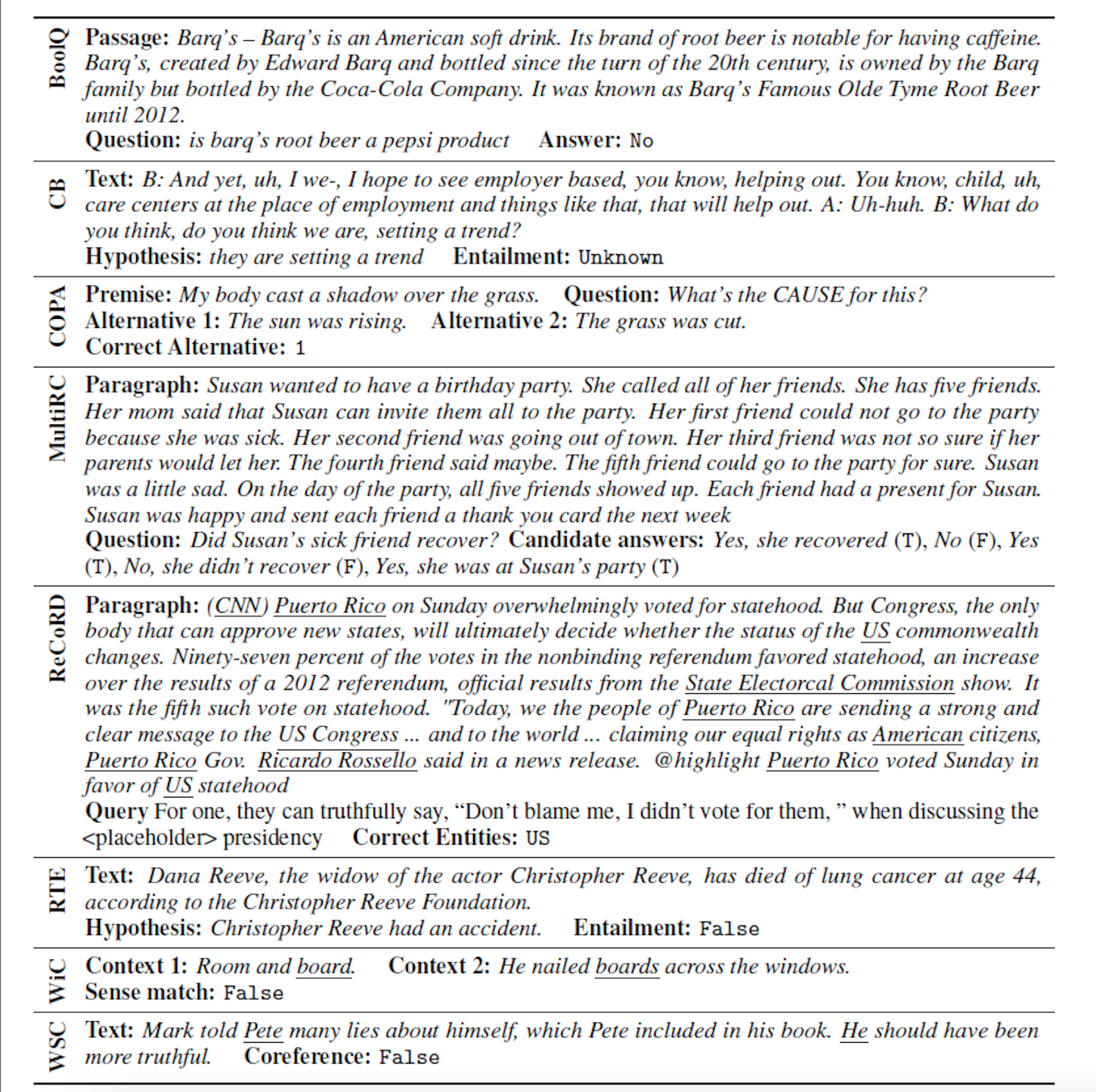

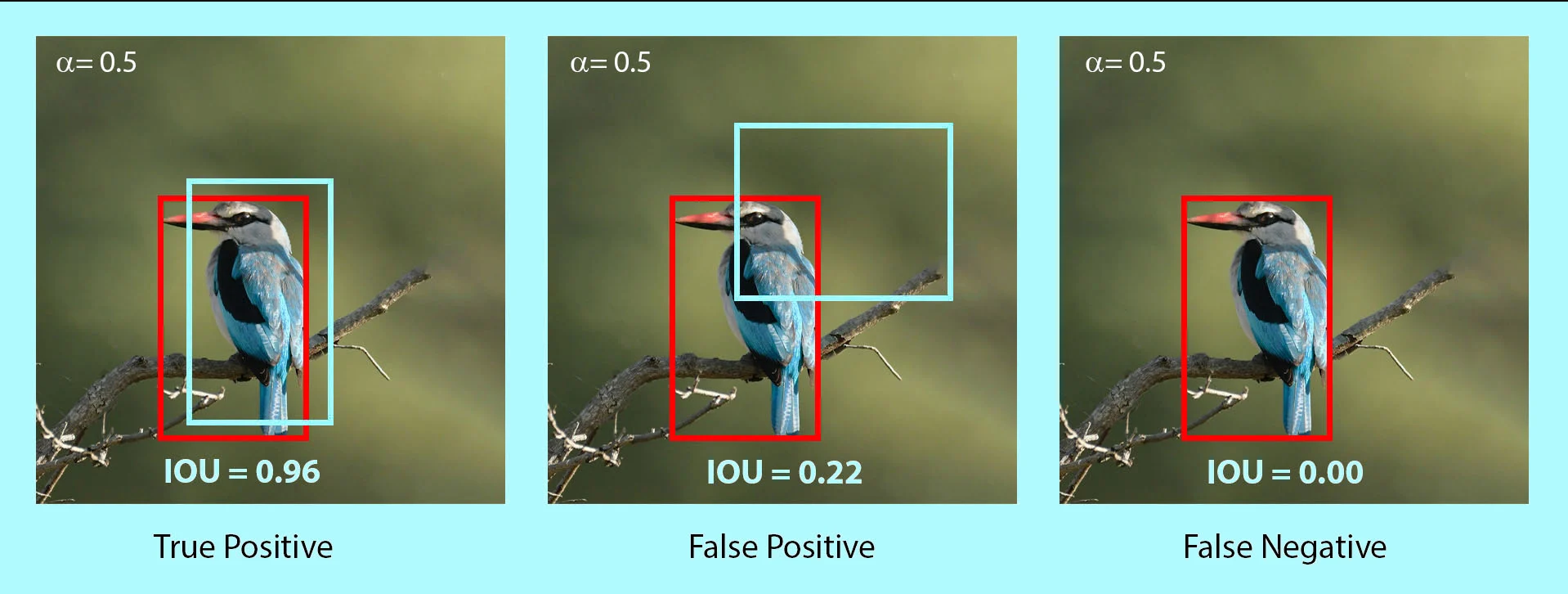

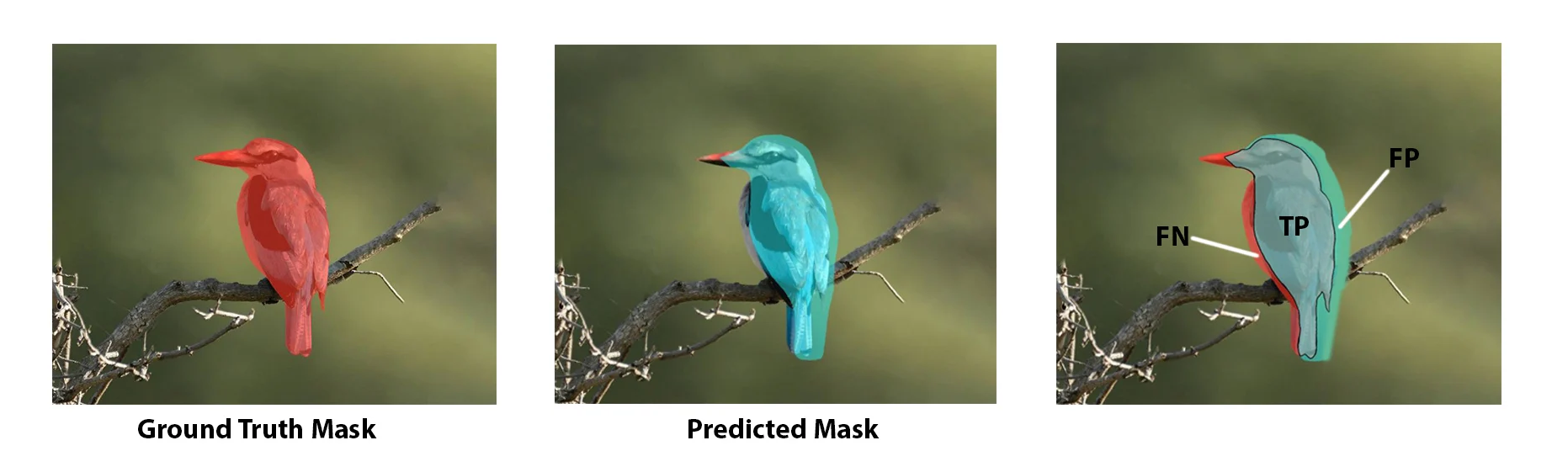

Besides the importance of pre-training data, there must also be a way to test or compare the different models. A reasonable approach is to compare the performance on specific tasks, which is called benchmarking. A nice feature of benchmarks is that they allow us to compare the models to a human baseline. Different metrics are used to compare the performance of the models. Accuracy is widely used, but there are also some others. For CV the most common benchmark datasets are ImageNet, ImageNetReaL, CIFAR-10(0), OXFORD-IIIT PET, OXFORD Flower 102, COCO and Visual Task Adaptation Benchmark (VTAB). The most common benchmarks for NLP are General Language Understanding Evaluation (GLUE), SuperGLUE, SQuAD 1.1, SQuAD 2.0, SWAG, RACE, ReCoRD, and CoNLL-2003. VTAB, GLUE and SuperGLUE also provide a public leader board. Cross-modal tasks such as Visual Question Answering (VQA), Visual Commonsense Reasoning (VCR), Natural Language Visual Reasoning (NLVR), Flickr30K, COCO and Visual Entailment are common benchmarks for VL-PTM.

2.1 State-of-the-art in NLP

Author: Cem Akkus

Supervisor: Matthias Aßenmacher

2.1.1 Introduction

Natural Language Processing (NLP) exists for about 50 years, but it is more relevant than ever. There have been several breakthroughs in this branch of machine learning that is concerned with spoken and written language. In this work, the most influential ones of the last decade are going to be presented. Starting with word embeddings, which efficiently model word semantics. Encoder-decoder architectures represent another step forward by making minimal assumptions about the sequence structure. Next, the attention mechanism allows human-like focus shifting to put more emphasis on more relevant parts. Then, the transformer applies attention in its architecture to process the data non-sequentially, which boosts the performance on language tasks to exceptional levels. At last, the most influential transformer architectures are recognized before a few current topics in natural language processing are discussed.

2.1.2 Word Embeddings

As mentioned in the introduction, one of the earlier advances in NLP is

learning word internal representations. Before that, a big problem with

text modelling was its messiness, while machine learning algorithms

undoubtedly prefer structured and well-defined fixed-length inputs. On a

granular level, the models rather work with numerical than textual data.

Thus, by using very basic techniques like one-hot encoding or

bag-of-words, a text is converted into its equivalent vector of numbers

without losing information.

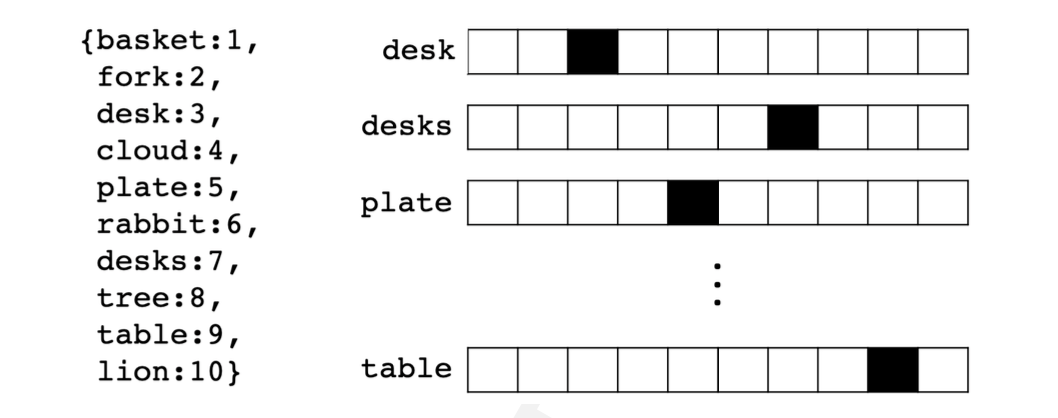

In the example depicting one-hot encoding (see Figure 2.1), there are ten simple words and the dark squares indicate the only index with a non-zero value.

FIGURE 2.1: Ten one-hot encoded words (Source: Pilehvar and Camacho-Collados (2021))

In contrast, there are multiple non-zero values while using

bag-of-words, which is another way of extracting features from text to

use in modelling where we measure if a word is present from a vocabulary

of known words. It is called bag-of-words because the order is

disregarded here.

Treating words as atomic units has some plausible reasons, like

robustness and simplicity. It was even argued that simple models on a

huge amount of data outperform complex models trained on less data.

However, simple techniques are problematic for many tasks, e.g. when it

comes to relevant in-domain data for automatic speech recognition. The

size of high-quality transcribed speech data is often limited to just

millions of words, so simply scaling up simpler models is not possible

in certain situations and therefore more advanced techniques are needed.

Additionally, thanks to the progress of machine learning techniques, it

is realistic to train more complex models on massive amounts of data.

Logically, more complex models generally outperform basic ones. Other

disadvantages of classic word representations are described by the curse

of dimensionality and the generalization problem. The former becomes a

problem due to the growing vocabulary equivalently increasing the

feature size. This results in sparse and high-dimensional vectors. The

latter occurs because the similarity between words is not captured.

Therefore, previously learned information cannot be used. Besides,

assigning a distinct vector to each word is a limitation, which becomes

especially obvious for languages with large vocabularies and many rare

words.

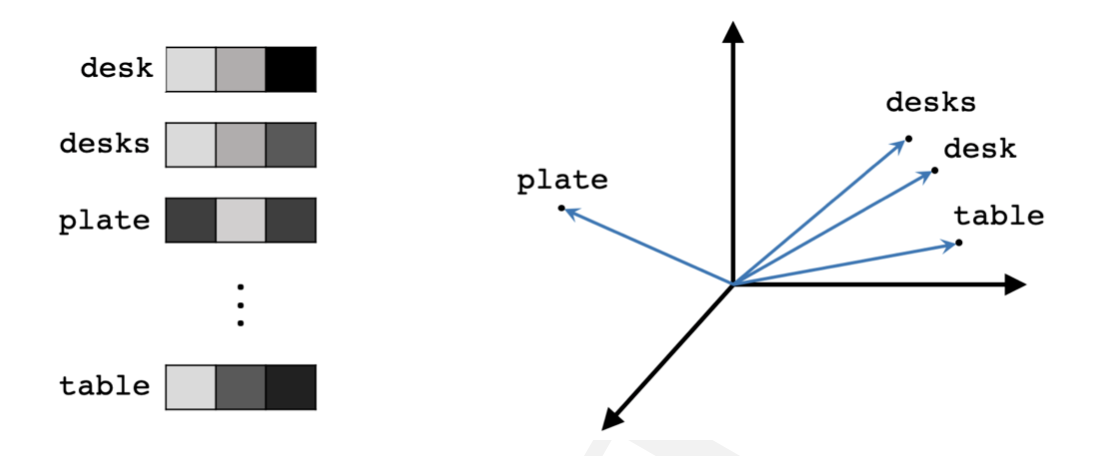

To combat the downfalls of simple word representations, word embeddings enable to use efficient and dense representations in which similar words have a similar encoding. So words that are closer in the vector space are expected to be similar in meaning. An embedding is hereby defined as a vector of floating point values (with the length of the vector being a hyperparameter). The values for the embedding are trainable parameters which are learned similarly to a model learning the weights for a dense layer. The dimensionality of the word representations is typically much smaller than the number of words in the dictionary. For example, Mikolov, Chen, et al. (2013a) called dimensions between 50-100 modest for more than a few hundred million words. For small data sets, dimensionality for the word vectors could start at 8 and go up to 1024 for larger data sets. It is expected that higher dimensions can rather pick up intricate relationships between words if given enough data to learn from.

FIGURE 2.2: Three-dimensional word embeddings (Source: Pilehvar and Camacho-Collados (2021)).

For any NLP tasks, it is sensible to start with word embeddings because

it allows to conveniently incorporate prior knowledge into the model and

can be seen as a basic form of transfer learning. It is important to

note that even though embeddings attempt to represent the meaning of

words and do that to an extent, the semantics of the word in a given

context cannot be captured. This is due to the words having static

precomputed representations in traditional embedding techniques. Thus,

the word "bank" can either refer to a financial institution or a river

bank. Contextual embedding methods offer a solution, but more about them

will follow later.

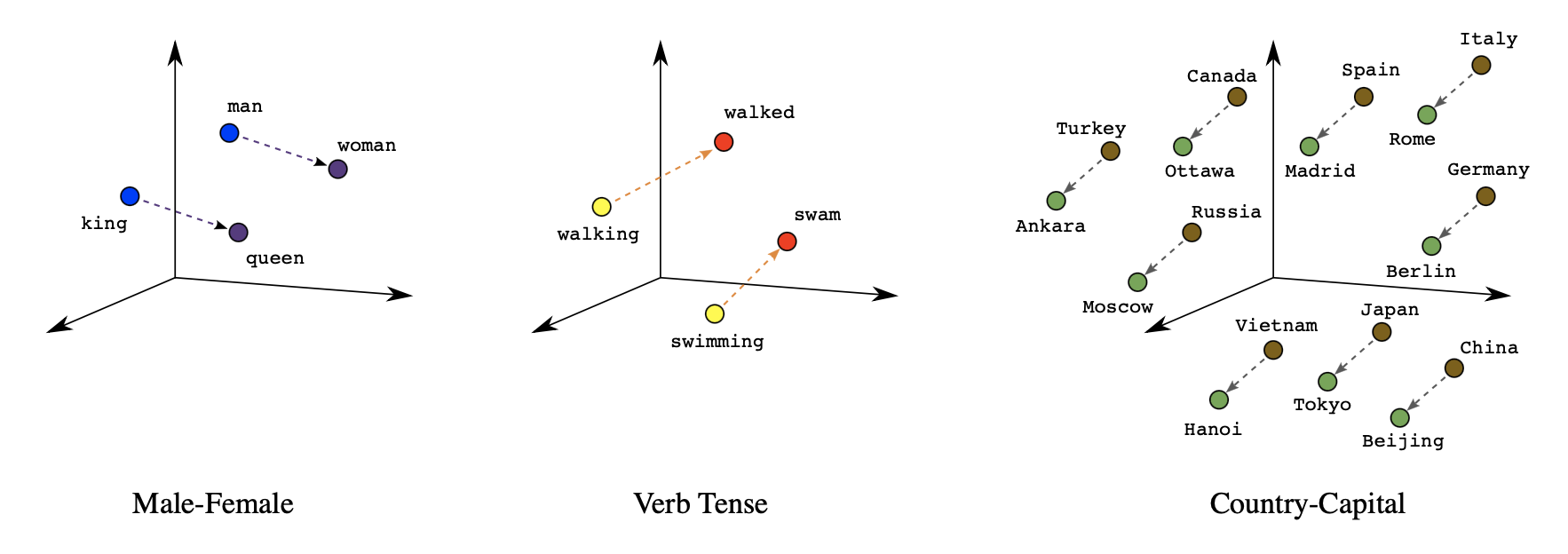

It should be noted that words can have various degrees of similarity. In the context of inflectional languages, it becomes obvious because words are adjusted to articulate grammatical categories. For example, in a subspace of the original vector, nouns that have similar endings can be found. However, it even exceeds simple syntactic regularities. With straightforward operations on the word vectors, it can be displayed that \(vector(\text{King}) - vector(\text{Man}) + vector(\text{Woman})\) equals a vector that is closest in vector space (and therefore in meaning) to the word "Queen". A simple visualization of this relationship can be seen in the left graph below (see Figure 2.3). The three coordinate systems are representations of higher dimensions that are depicted in this way via dimension reduction techniques. Furthermore, the verb-to-tense relationship is expressed in the middle graphic, which extends the insight from before referring to the word endings being similar because in this instance the past tenses of both verbs walking and swimming are not similar in structure. Additionally, on the right side of the figure, there is a form of the commonly portrayed and easily understood Country-Capital example (see Mikolov, Chen, et al. (2013a)).

FIGURE 2.3: Three types of similarities as word embeddings (Source: Google (2022)).

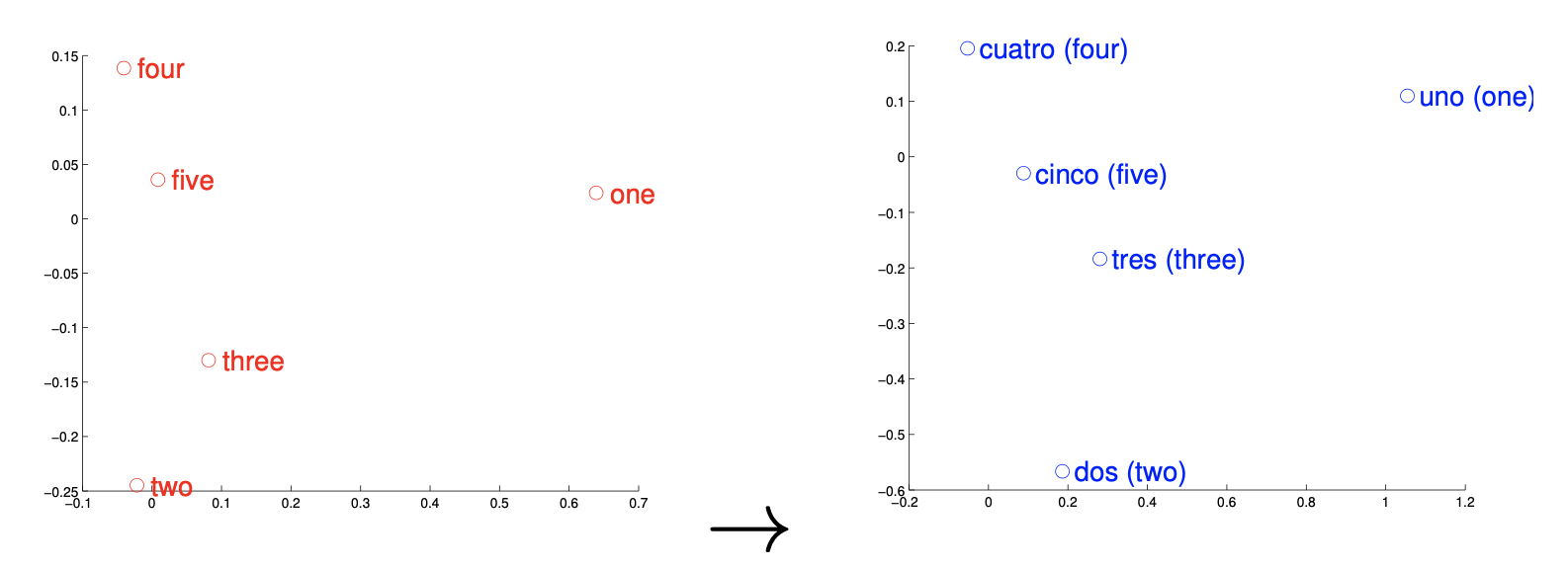

Another way of using vector representations of words is in the field of translations. It has been presented that relations can be drawn from feature spaces of different languages. In below, the distributed word representations of numbers between English and Spanish are compared. In this case, the same numbers have similar geometric arrangements, which suggests that mapping linearly between vector spaces of languages is feasible. Applying this simple method for a larger set of translations in English and Spanish led to remarkable results - achieving almost 90 % precision.

FIGURE 2.4: Representations of numbers in English and Spanish (Source: Mikolov, Le, and Sutskever (2013)).

This technique was then used for other experiments. One use case is the

detection of dictionary errors. Taking translations from a dictionary

and computing their geometric distance returns a confidence measure.

Closely evaluating the translations with low confidence and outputting

an alternative (one that is closest in vector space) results in a plain

way to assess dictionary translations. Furthermore, training the word

embeddings on a large corpora makes it possible to give sensible

out-of-dictionary predictions for words. This was tested by randomly

removing a part of the vocabulary before. Taking a look at the

predictions revealed that they were often to some extent related to the

translations with regard to meaning and semantics. Despite the

accomplishments in other tasks, translations between distant languages

exposed shortcomings of word embeddings. For example, the accuracy for

translations between English and Vietnamese seemed significantly lower.

This can be ascribed to both languages not having a good one-to-one

correspondence because the concept of a word is different than in

English. In addition, the used Vietnamese model contains numerous

synonyms, which complicates making exact predictions (see

Mikolov, Le, and Sutskever (2013)).

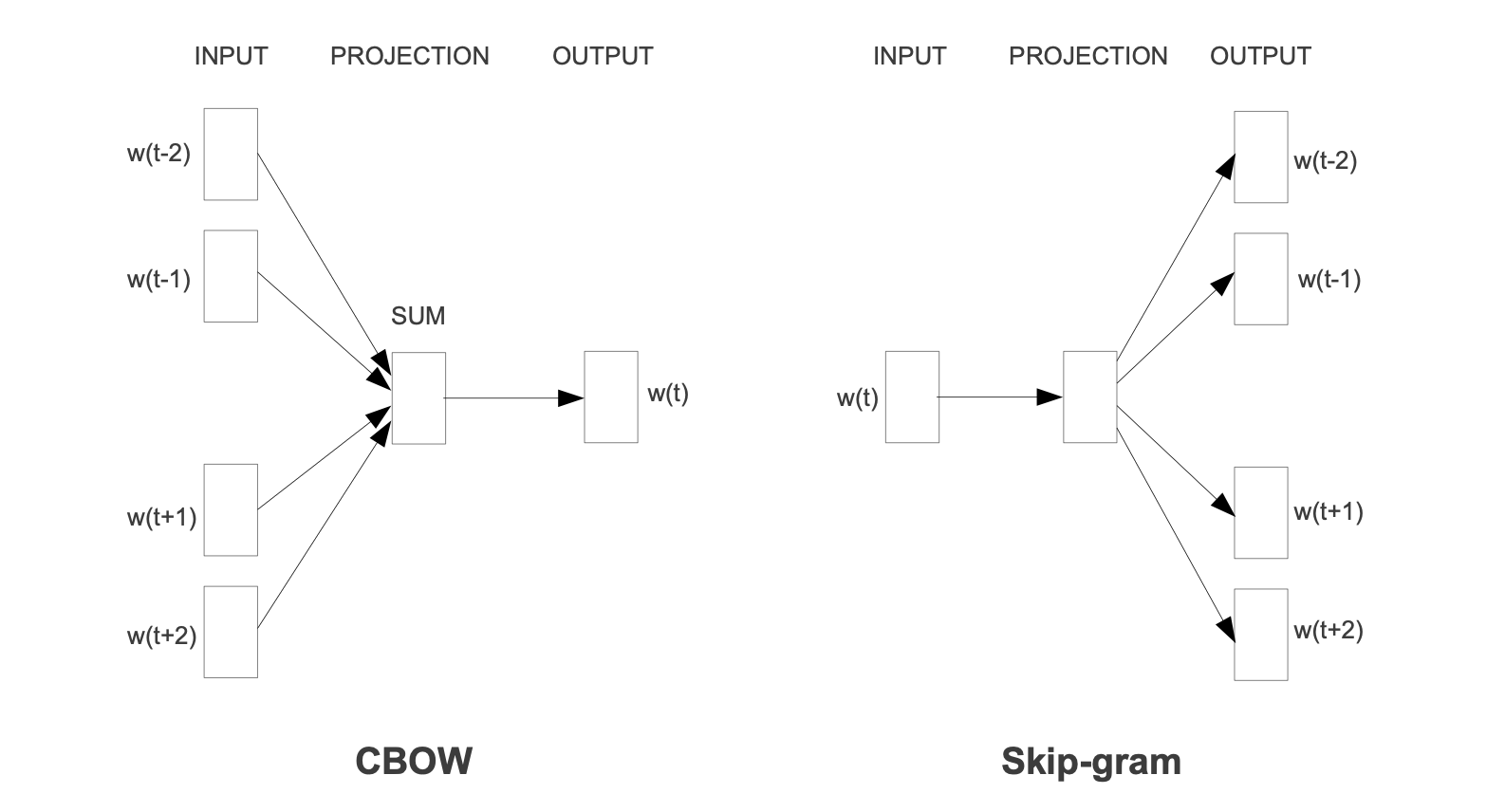

Turning the attention to one of the most impactful embedding techniques, word2vec. It was proposed by Mikolov, Chen, et al. (2013a) and is not a singular algorithm. It can rather be seen as a family of model architectures and optimizations to learn word representations. Word2vec’s popularity also stems from its success on multiple downstream natural language processing tasks. It has a very simple structure which is based on a basic feed forward neural network. They published multiple papers (see Mikolov, Chen, et al. (2013a)], Mikolov, Le, and Sutskever (2013), Mikolov, Sutskever, et al. (2013)) that are stemming around two different but related methods for learning word embeddings (see Figure 2.5). Firstly, the Continuous bag-of-words model aims to predict the middle word based on surrounding context words. Hence, it considers components before and after the target word. As the order of words in the context is not relevant, it is called a bag-of-words model. Secondly, the Continuous skip-gram model only considers the current word and predicts others within a range before and after it in the same sentence. Both of the models use a softmax classifier for the output layer.

Then, Bojanowski et al. (2016) built on skip-gram models by accounting for the morphology (internal structure) of words. A different classical embedding architecture that has to be at least mentioned is the GloVe model, which does not use a neural network but incorporates local context information with global co-occurrence statistics.

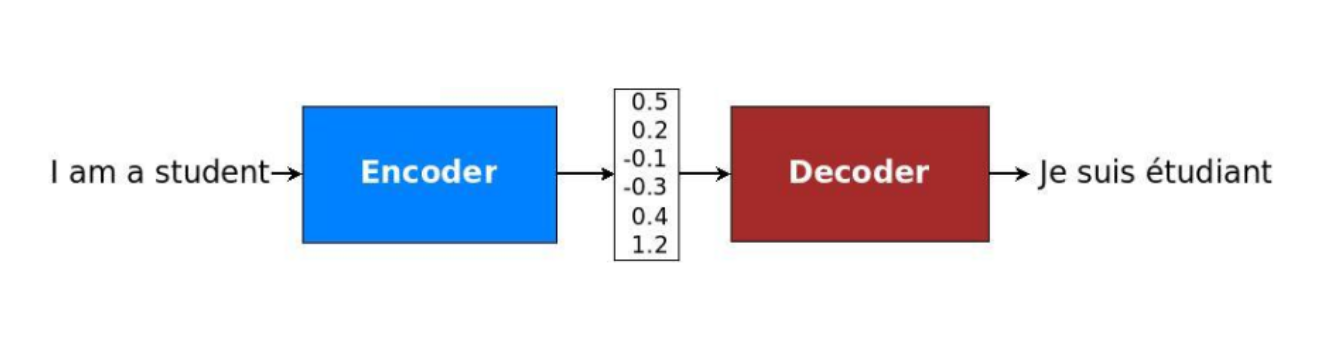

2.1.3 Encoder-Decoder

The field of natural language processing is concerned with a variety of different tasks surrounding text. Depending on the type of NLP problem, the network may be confronted with variable length sequences as input and/or output. This is the case for many compelling applications, such as question answering, dialogue systems or machine translation. In the following, many examples will explore machine translations in more detail, since it is a major problem domain. Regarding translation tasks, it becomes obvious that input sequences need to be mapped to output sequences of different lengths. To manage this type of input and output, a design with two main parts could be useful. The first one is called the encoder because, in this part of the network, a variable length input sequence is transformed into a fixed state. Next, the second component called the decoder maps the encoded state to an output of a variable length sequence. As a whole, it is known as an encoder-decoder or sequence-to-sequence architecture and has become an effective and standard approach for many applications which even recurrent neural networks with gated hidden units have trouble solving successfully. Deep RNNs may have a chance, but different architectures like encoder-decoder have proven to be the most effective. It can even deal with different word orders and active, as well as passive voice (Sutskever, Vinyals, and Le 2014). A simplified example of the encoder-decoder model can be seen in 2.6.

FIGURE 2.6: Translation through simplified seq2seq model (Source: Manning, Goldie, and Hewitt (2022)).

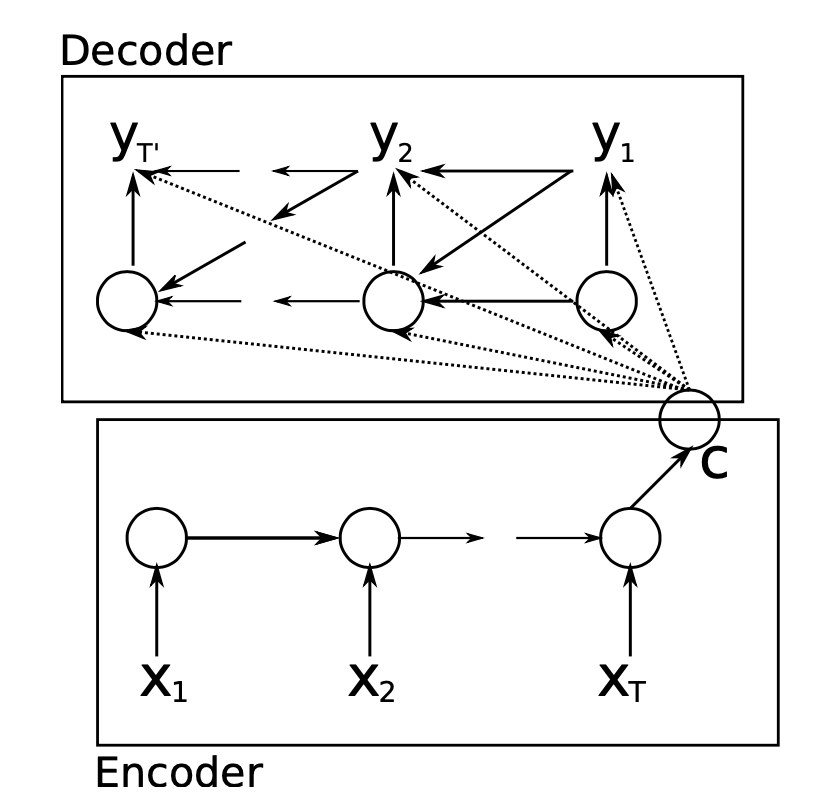

Before going through the equations quantifying the concepts, it makes sense to examine the sequence-to-sequence design proposed by Cho et al. (2014). An encoder-RNN processes the input sequence of length \(n_x\) and computes a fixed-length context vector \(C\), which is usually the final hidden state of the encoder or a simple function of the hidden states. After the input sequence is processed, it is added to the hidden state and passed forward in time through the recurrent connections between the hidden states in the encoder. Despite the context vector usually being a simple function of the last hidden state, its role cannot be underestimated. Specifically, the encoded state summarizes important information from the input sequence, e.g. the intent in a question answering task or the meaning of a text in the case of machine translation. After the context is passed to every hidden state of the decoder, the decoder RNN uses this information to produce the target sequence of length \(n_y\), which can of course vary from \(n_x\).

FIGURE 2.7: Encoder-decoder architecture (Source: Cho et al. (2014)).

At the latest through the above illustration, it is clear that the decoder is particularly interesting to look at in the form of equations. The notation mainly follows Cho et al. (2014). The decoder is another type of RNN which is trained to predict the target based on the hidden state at the last time step. However, unlike regular RNNs, it is also conditioned on the output of the last time step (\(y_{t-1}\)) and a summary of the input c. Therefore, the hidden state of the decoder is computed by:

\[h_d^{[t]} = f(h_d^{[t-1]},y^{[t-1]},c). \label{eqn:h_dec}\]

Similarly, each conditional probability is given by the following, where \(f\) is a non-linear activation function (and must produce probabilities in , e.g. the softmax function):

\[P(y^{[t]}|y^{[1]}, \dots ,y^{[t-1]},c) = f(h_d^{[t]}, y^{[t-1]}, c). \label{eqn:P_dec}\]

The two parts are jointly trained to maximize the conditional log-likelihood, where \(\theta\) denotes the set of model parameters and \((x_n, y_n)\) is an (input sequence, output sequence) pair from the training set with size \(N\):

\[\max_\theta \frac{1}{N} \displaystyle \sum_{n=1}^{N} \log p_{\theta}(y_n|x_n). \label{eqn:train_dec}\]

The best probability is usually found by using the beam search

algorithm. The core idea of it is that on each step of the decoder, we

keep track of the \(k\) most probable partial translations (which are

called hypotheses).

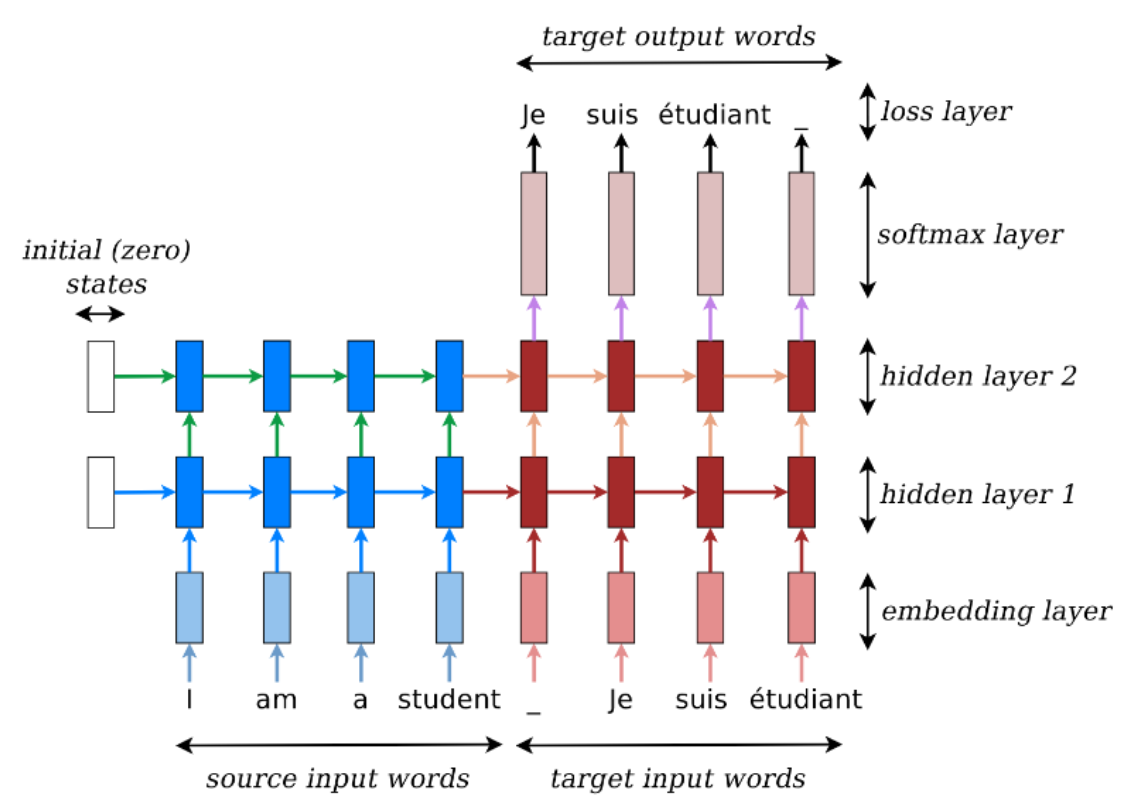

Examining the translation presented in with hidden units unrolled through time could look like in 2.8. In particular, multiple hidden layers are recommended by the researchers. The idea is that lower layers compute lower-level features and higher layers compute higher-level features.

FIGURE 2.8: Translation through seq2seq model (Source: Manning, Goldie, and Hewitt (2022)).

Gated recurrent networks, especially long short-term memory networks, have been found to be effective in both components of the sequence-to-sequence architecture. Furthermore, it was revealed that deep LSTMs significantly outperform shallow LSTMs. Each additional layer reduced perplexity by nearly 10%, possibly due to their much larger hidden state. For example, Sutskever, Vinyals, and Le (2014) used deep LSTMs with 4 layers and 1000 cells at each layer for 1000-dimensional word embeddings. Thus, in total, 8000 real numbers are used to represent a sentence. For simplification, the neural networks are in the following referred to as RNNs which is not contradicting the insights of this paragraph as LSTMs are a type of gated RNNS (Sutskever, Vinyals, and Le 2014).

2.1.4 Attention

Although encoder-decoder architectures simplified dealing with variable

length sequences, they also caused complications. Due to their design, the

encoding of the source sentence is a single vector representation

(context vector). The problem is that this state must compress all

information about the source sentence in a single vector and is commonly

referred to as the bottleneck problem. To be precise, the entire

semantics of arbitrarily long sentences need to be wrapped into a single

hidden state. Moreover, it constitutes a different learning problem

because the information needs to be passed between numerous time steps.

This leads to vanishing gradients within the network as a consequence of

factors less than 1 multiplied with each other at every point. To

illustrate, the last sentence is an ideal example of one in which an

encoder-decoder approach could have difficulty coping. In particular, if

the sentences are longer than the ones in the training corpus

(Manning, Goldie, and Hewitt 2022).

Due to the aforementioned reasons, an extension to the

sequence-to-sequence architecture was proposed by Bahdanau, Cho, and Bengio (2014), which

learns to align and translate jointly. For every generated word, the

model scans through some positions in the source sentence where the most

relevant information is located. Afterwards, based on the context around

and the previously generated words, the model predicts the target word

for the current time step. This approach is called attention, as it

emulates human-like (cognitive) attention. As a result of directly

looking at the source and bypassing the bottleneck, it provides a

solution to the problem. Then, it mitigates the vanishing gradient

problem, since there is now a shortcut to faraway states. Consequently,

incorporating the attention mechanism has been shown to considerably

boost the performance of models on NLP tasks.

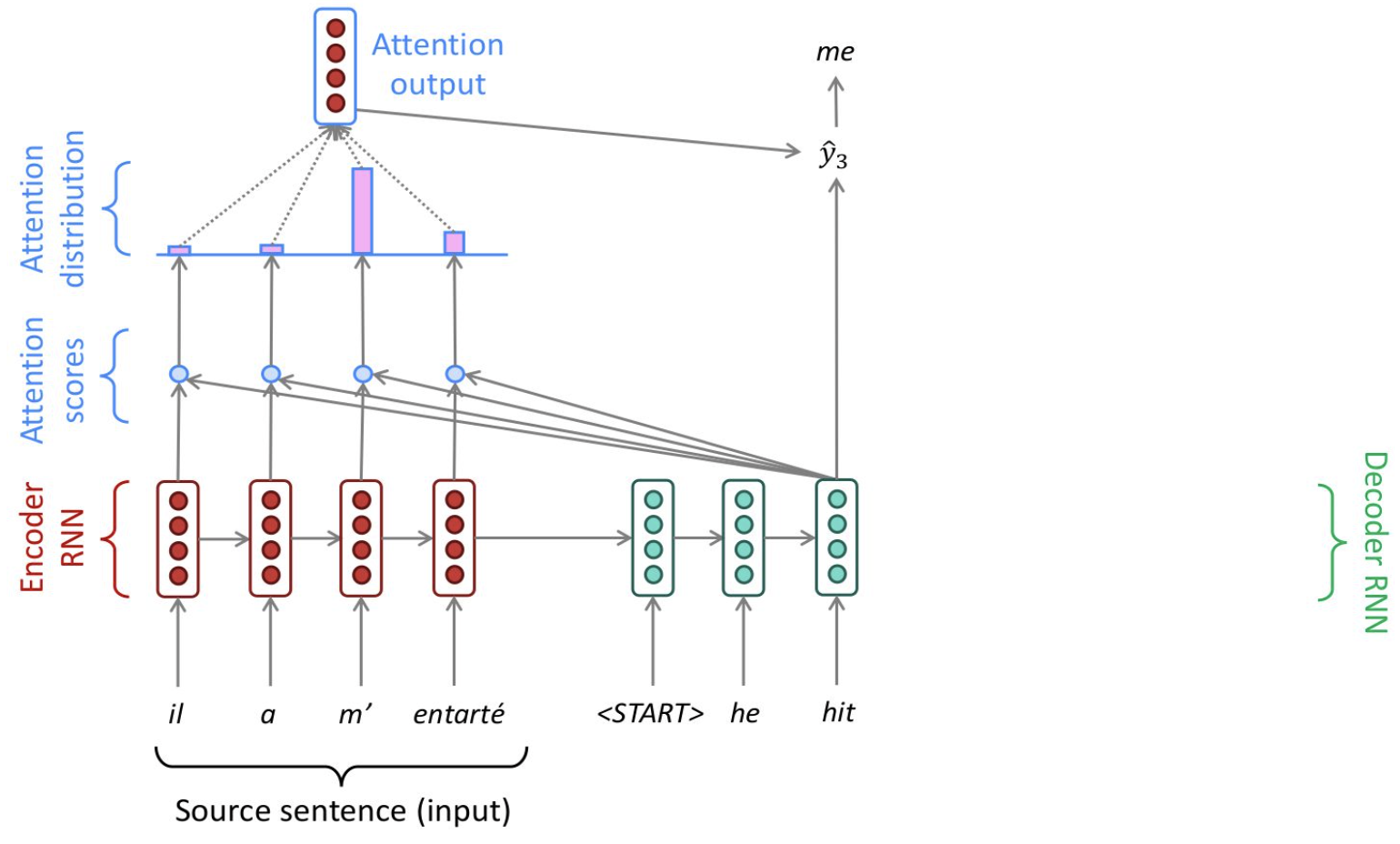

A walkthrough of the example below should resolve any outstanding questions regarding the procedure of the attention mechanism. The source sentence is seen on the bottom left, which is given in French and acts as the input for the encoder-RNN (in red). Then, the attention scores (in blue) are computed by taking the dot product between the previous output word and input words. Next, the softmax function turns the scores into a probability distribution (in pink). They are used to take a weighted sum of the encoder’s hidden states and form the attention output, which mostly contains information from the hidden states that received high attention. Afterwards, the attention output is concatenated with the decoder hidden state (in green), which is applied to compute the decoder output as before. In some scenarios, the attention output is also fed into the decoder (along with the usual decoder input). This specific example was chosen because "entarter" means "to hit someone with a pie" and is therefore a word that needs to be translated with many words. As a consequence of no existing direct equivalents for this phrase, it is expected that there is not only one nearly non-zero score. In this snapshot, the attention distribution can be seen to have two significant contributors.

FIGURE 2.9: Translation process with attention mechanism (Source: Manning, Goldie, and Hewitt (2022)).

The following equations aim to compactly represent the relations brought forward in the last paragraphs and mainly follow Manning, Goldie, and Hewitt (2022). The attention scores \(e^{[t]}\) are computed by scalarly combining the hidden state of the decoder with all of the hidden states of the encoder:

\[e^{[t]} = [(h_{d}^{[t]})^T h_{e}^{[1]}, \ldots , (h_{d}^{[t]})^T h_{e}^{[N]} ].\]

Besides the basic dot-product attention, there are also other ways to calculate the attention scores, e.g. through multiplicative or additive attention. Although they will not be further discussed at this point, it makes sense to at least mention them. Then, applying the softmax to the scalar scores results in the attention distribution \(\alpha^{[t]}\), a probability distribution whose values sum up to 1:

\[\alpha^{[t]} = softmax(e^{[t]}).\]

Next, the attention output \(a^{[t]}\) is obtained by the attention distribution acting as a weight for the encoder hidden states:

\[a^{[t]} = \sum_{i=1}^{N} \alpha_i^{[t]} h_{e,i}.\]

Concatenating attention output with decoder hidden state and proceeding as in the non-attention sequence-to-sequence model are the final steps:

\[o^{[t]} = f(a^{[t]} h_d^{[t]}).\]

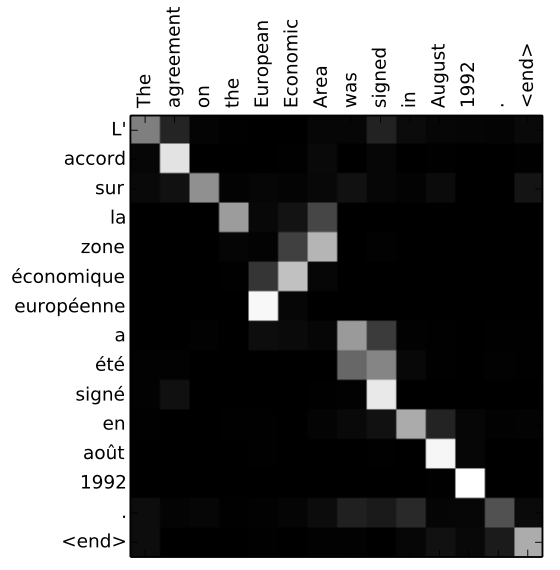

By visualizing the attention distribution, also called alignments (see

Bahdanau, Cho, and Bengio (2014)), it is easy to observe what the decoder was focusing on

and understand why it chose a specific translation. The x-axis of the

plot of below corresponds to the words in the source sentence (English)

and the y-axis to the words in the generated translation (French). Each

pixel shows the weight of the source word for the respective target word

in grayscale, where 0 is black and 1 is white. As a result, which

positions in the source sentence were more relevant when generating the

target word becomes apparent. As expected, the alignment between English

and French is largely monotonic, as the pixels are brighter, and

therefore the weights are higher along the main diagonal of the matrix.

However, there is an exception because adjectives and nouns are

typically ordered differently between the two languages. Thus, the model

(correctly) translated "European Economic Area" into "zone économique

européene". By jumping over two words ("European" and "Economic"),

it aligned "zone" with "area". Then, it looked one word back twice

to perfect the phrase "zone économique européene". Additional

qualitative analysis has shown that the model alignments are

predominantly analogous to our intuition.

FIGURE 2.10: Attention alignments (Source: Bahdanau, Cho, and Bengio (2014)).

2.1.5 Transformer

For this section, Manning, Goldie, and Hewitt (2022) constitutes the main source.

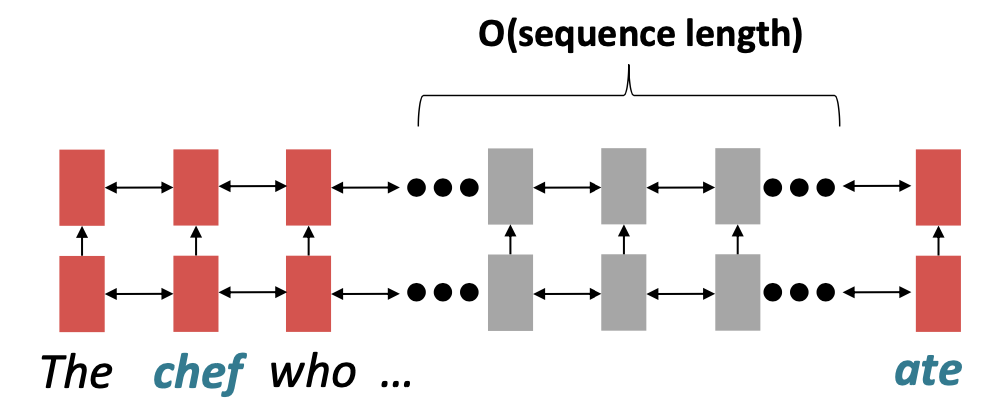

RNNs are unrolled from one side to the other. Thus, from left to right and right to left. This encodes linear locality, which is a useful heuristic because nearby words often affect each other’s meaning. But how is it when distant words need to interact with each other? For instance, if we mention a person at the beginning of a text portion and refer back to them only at the very end, the whole text in between needs to be tracked back (see below). Hence, RNNs take \(O(\text{sequence length})\) steps for distant word pairs to interact. Due to gradient problems, it is therefore hard to learn long-distance dependencies. In addition, the linear order is ingrained. Even though, as known, the sequential structure does not tell the whole story.

FIGURE 2.11: Sequential processing of recurrent model (Source: Manning, Goldie, and Hewitt (2022)).

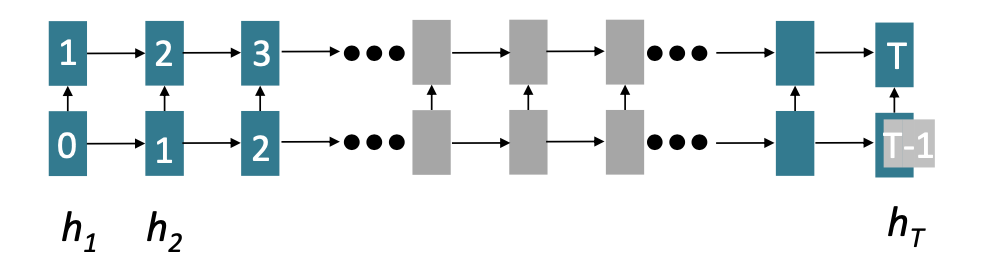

GPUs can perform multiple calculations simultaneously and could help to reduce the execution time of the deep learning algorithm massively. However, forward and backward passes lack parallelizability in recurrent models and have \(O(\text{sequence length})\). To be precise, future hidden states cannot be computed in full before past states have been computed. This inhibits training on massive data sets. indicates the minimum number of steps before the respective state can be calculated.

FIGURE 2.12: Sequential processing of recurrent model with number of steps indicated (Source: Manning, Goldie, and Hewitt (2022)).

After proving that attention dramatically increases performance, google

researchers took it further and based transformers solely on attention,

so without any RNNs. For this reason, the paper in which they were

introduced is called "Attention is all you need". Spoiler: It is not

quite all we need, but more about that on the following pages.

Transformers have achieved great results on multiple settings such as

machine translation and document generation. Their parallelizability

allows for efficient pretraining and leads them to be the standard model

architecture. In fact, all top models on the popular aggregate benchmark

GLUE are pretrained and Transformer-based. Moreover, they have even

shown promise outside of NLP, e.g. in Image Classification, Protein

Folding and ML for Systems (see Dosovitskiy, Beyer, Kolesnikov, Weissenborn, Zhai, Unterthiner, Dehghani, Minderer, Heigold, Gelly, and others (2020), Jumper et al. (2021),

Zhou et al. (2020), respectively).

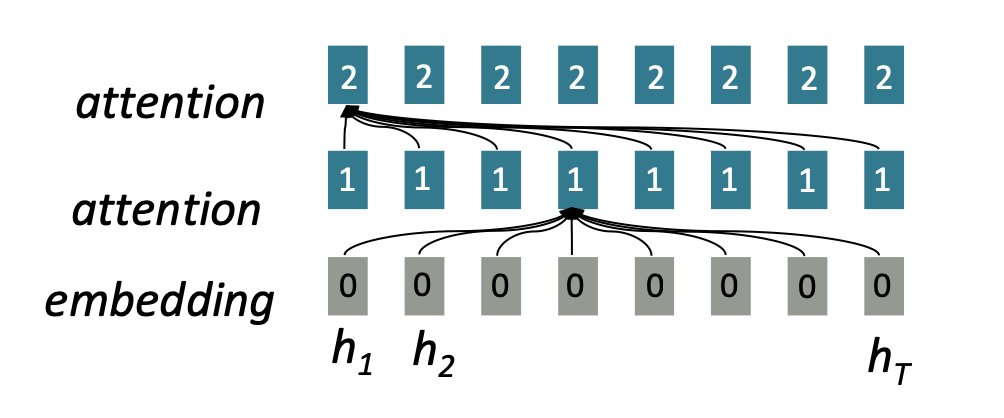

If recurrence has its flaws, another adjustment of the attention

mechanism might be beneficial. Until now, it was defined from decoder to

encoder. Alternatively, attention could also be from one state to all

states in the same set. This is the definition of self-attention, which

is encoder-encoder or decoder-decoder attention (instead of

encoder-decoder) and represents a cornerstone of the transformer

architecture. depicts this process in which each word attends to all

words in the previous layer. Even though in practice, most arrows are

omitted eventually.

FIGURE 2.13: Connections of classic attention mechanism (Source: Manning, Goldie, and Hewitt (2022)).

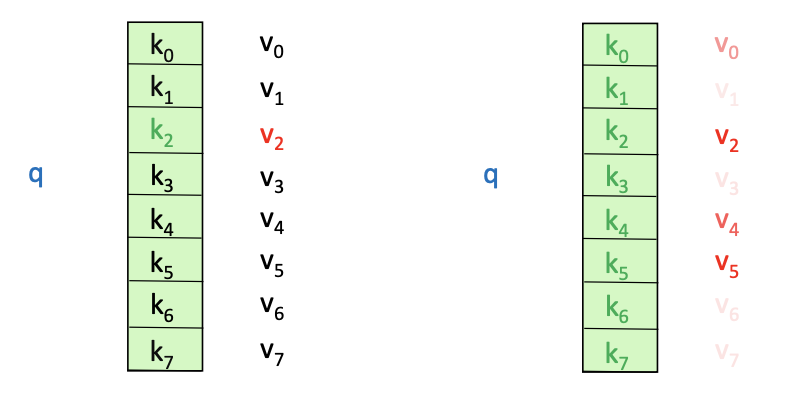

Thinking of self-attention as an approximate hash table eases understanding its intuition. To look up a value, queries are compared against keys in a table. In a hash table, which is shown on the left side of , there is exactly one key-value pair for each query (hash). In contrast, in self-attention, each key is matched to varying degrees by each query. Thus, a sum of values weighted by the query-key match is returned.

FIGURE 2.14: Comparison of classic attention mechanism with self-attention with hash tables (Source: Manning, Goldie, and Hewitt (2022)).

The process briefly described in the last paragraph can be summarized by the following steps that mainly follow Manning, Goldie, and Hewitt (2022). Firstly, deriving query, key, and value for each word \(x_i\) is necessary: \[q_i = W^Q x_i , \hspace{3mm} k_i = W^K x_i, \hspace{3mm} v_i = W^V x_i\]

Secondly, the attention scores have to be calculated:

\[e_{ij} = q_i k_j\]

Thirdly, to normalize the attention scores, the softmax function is applied:

\[\alpha_{ij} = softmax( e_{ij} ) = \frac{exp(e_{ij})}{\displaystyle \sum_k e_{ij}}\]

Lastly, taking the weighted sum of the values results in obtaining the attention output:

\[a_{i} = \displaystyle \sum_j \alpha_{ij} v_j\]

Multiple advantages of incorporating self-attention instead of

recurrences have been revealed. Since all words interact at every layer,

the maximum interaction distance is \(O(1)\) and is a crucial upgrade. In

addition, the model is deeply bidirectional because each word attends to

the context in both directions. As a result of these advances, all word

representations per layer can be computed in parallel. Nevertheless,

some issues have to be discussed. Attention does no more than weighted

averaging. So without neural networks, there are no element-wise

non-linearities. Their importance cannot be understated and shows why

attention is not actually all that is needed. Furthermore,

bidirectionality is not always desired. In language modelling, the model

should specifically be not allowed to simply look ahead and observe more

than the objective allows. Moreover, the word order is no longer

encoder, and it is bag-of-words once again.

Fortunately, the previously mentioned weaknesses have been addressed for

the original transformer-architecture proposed by Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, et al. (2017a). The

first problem can be easily fixed by applying a feed forward layer to

the output of attention. It provides non-linear activation as well as

extra expressive power. Then, for cases in which bidirectionality

contradicts the learning objective, future states can be masked so that

attention is restricted to previous states. Moreover, the loss of the

word can be corrected by adding position representations to the inputs.

The more complex deep learning models are, the closer they become to

model the complexity of the real world. That is why the transformer

encoder and decoder consist of many layers of self-attention with a feed

forward network, which is necessary to extract both syntactic and

semantic features from sentences. Otherwise, using word embeddings,

which are semantically deep representations between words, would be

unnecessary (Sejnowski 2020). At the same time, training deep

networks can be troublesome. Therefore, some tricks are applied to help

with the training process.

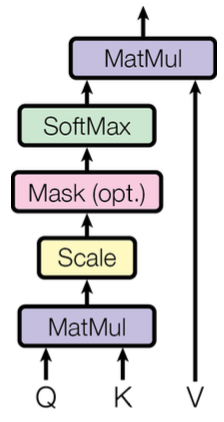

One of them is to pass the "raw" embeddings directly to the next layer, which prevents forgetting or misrepresent important information as it is passed through many layers. This process is called residual connections and is also believed to smoothen the loss landscape. Additionally, it is problematic to train the parameters of a given layer when its inputs keep shifting because of layers beneath. Reducing uninformative variation by normalizing within each layer to mean zero and standard deviation to one weakens this effect. Another challenge is caused by the dot product tending to take on extreme values because of the variance scaling with increasing dimensionality \(d_k\). It is solved by Scaled Dot Product Attention (see Figure 2.15), which consists of computing the dot products of the query with its keys, dividing them by the dimension of keys \(\sqrt{d_k}\), and applying the softmax function next to receive the weights of the values.

FIGURE 2.15: Scaled dot-product attention (Source: Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, et al. (2017a)).

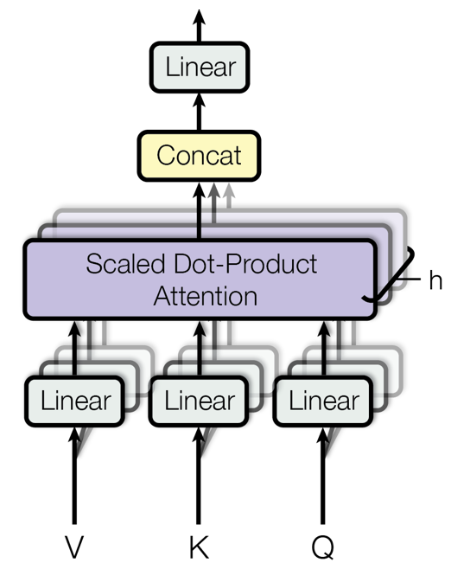

Attention learns where to search for relevant information. Surely, attending to different types of information in a sentence at once delivers even more promising results. To implement this, the idea is to have multiple attention heads per layer. While one attention head might learn to attend to tense information, another might learn to attend to relevant topics. Thus, each head focuses on separate features, and construct value vectors differently. Multi-headed self-attention is implemented by simply creating \(n\) independent attention mechanisms and combining their outputs.

FIGURE 2.16: Multi-head attention (Source: Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, et al. (2017a)).

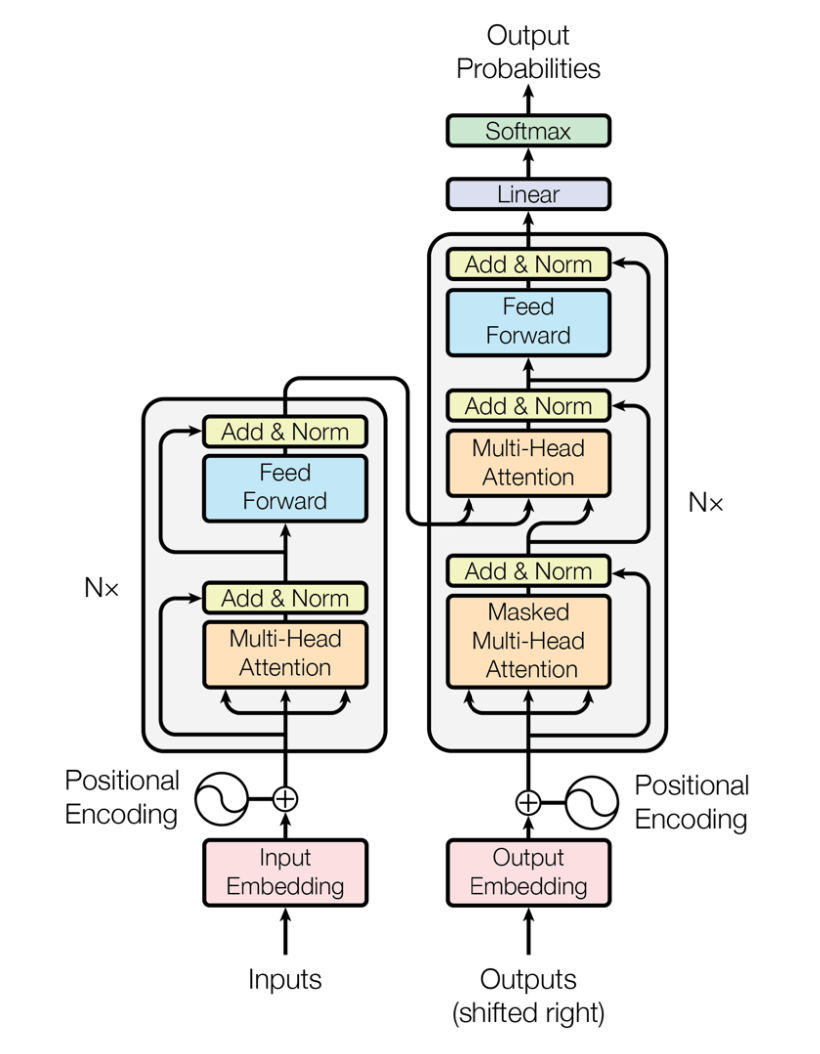

At this point, every part that constitutes the encoder in the transformer architecture has been introduced (see Figure 2.17). First, positional encodings are included in the input embeddings. There are multiple options to realize this step, e.g. through sinusoids. The multi-head attention follows, which was just mentioned. "Add & Norm" stands for the residual connections and the normalization layer. A feed forward network follows, which is also accompanied by residual connections and a normalization layer. All of it is repeated \(n\) times. For the decoder, the individual components are similar. One difference is that the outputs go through masked multi-head attention before multi-head attention and the feed forward network (with residual connections and layer normalization). It is critical to ensure that the decoder cannot peek at the future. To execute this, the set of keys and queries could be modified at every time step to only include past words. However, it would be very inefficient. Instead, to enable parallelization, future states are masked by setting the attention scores to \(-\infty\). After the decoder process is also repeated \(n\) times, a linear layer is added to project the embeddings into a larger vector that has the length of the vocabulary size. At last, a softmax layer generates a probability distribution over the possible words.

2.1.6 Transformer architectures: BERT, T5, GPT-3

"You shall know a word by the company it keeps", an adage by linguist

John Rupert Firth from 1957 goes. Even earlier, in 1935, he stated that

"... the complete meaning of a word is always contextual, and no study

of meaning apart from a complete context can be taken seriously". The

quotes of the famous linguist sum up the motivation to learn word

meaning and context perfectly. Many years later, in 2017, pretraining

word embeddings started. However, some complications arise from solely

pretraining the first part of the network. For instance, to teach the

model all contextual aspects of language, the training data for the

downstream task (e.g. question answering) needs to be adequate.

Additionally, most of the parameters are usually randomly initialized.

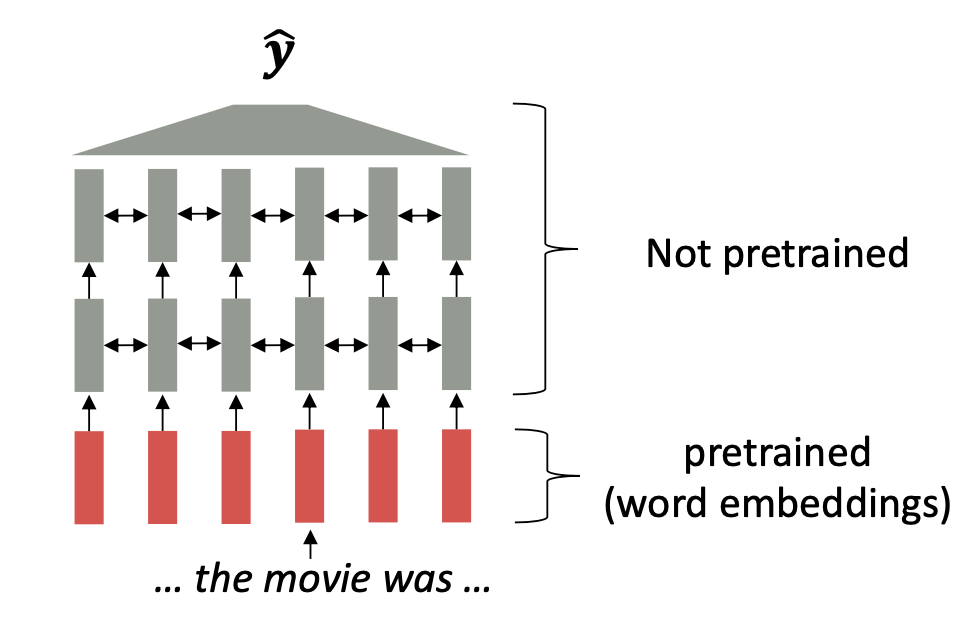

presents the network discussed, in which the word "movie" gets the

same embedding irrespective of the sentence it appears in. On the

contrary, parameters in modern NLP architectures are initialized via

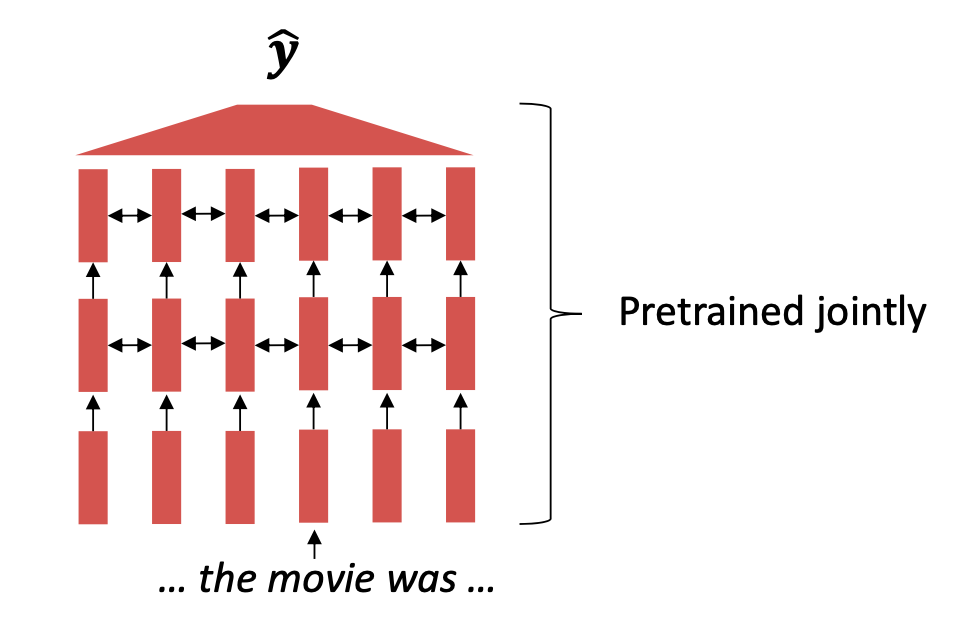

pretraining (see Figure 2.18). Furthermore, during the pretraining, certain input

parts are hidden to train the model to reconstruct them. This leads to

building suitable parameter initializations and robust probability

distributions over language.

FIGURE 2.18: Partly pre-trained model (Source: Manning, Goldie, and Hewitt (2022)).

FIGURE 2.19: Jointly pre-trained model (Source: Manning, Goldie, and Hewitt (2022)).

Classic machine learning does not match human learning. Specifically

referring to training a model from scratch, and only being able to learn

from the training data. In contrast, human beings already have prior

knowledge they can apply to new tasks. Transfer learning emulates this

by using an already trained network. The main idea is to use a model

that was pretrained on a hard, general language understanding task using

endless amounts of data, so that, it eventually contains the best

possible approximation of language understanding. Afterwards, the

training data for the new task is applied to slightly modify the weights

of the pretrained model, which is referred to as fine-tuning

(Manning, Goldie, and Hewitt 2022).

The specific architecture of a transformer model affects the type of pre-training, and favourable use cases. In the following, three different but very influential transformer architectures will be discussed. BERT can be seen as stacked encoders (Devlin et al. 2018b), T5 aims to combine the good parts of encoders and decoders (Raffel et al. 2019a), while GPT are stacked decoders (Brown et al. 2020).

2.1.6.1 BERT

Transfer learning led to state-of-the-art results in natural language

processing. One of the architectures that led the way was BERT, which

stands for Bidirectional Encoder Representations from Transformers. It

receives bidirectional context, which is why it is not a natural fit for

language modelling. To train it on this objective regardless, masked

language modelling was proposed. The main idea is to cover up a fraction

of the input words and let the model predict them. In this way, the LM

objective can be used while sustaining connections to words in the

future. The masked LM for BERT randomly predicts 15% of all word tokens

in each sequence. Of those, 80% are replaced by the \[MASK\] token, 10%

by a random token, and 10% remain unchanged. Moreover, because the

masked words are not even seen in the fine-tuning phase, the model

cannot get complacent and relies on strong representations of non-masked

words. Initially, BERT had an additional objective of whether one

sentence follows another, which is known as next sentence prediction.

However, it was dropped in later work due to having an insignificant

effect.

BERT is hugely versatile and was greatly popular after its release. Fine-tuning BERT led to outstanding results on a variety of applications, including question answering, sentiment analysis and text summarization. Thanks to its design, if the task involves generating sequences, pretrained decoders outperform pretrained encoders like BERT. Even though, it would not be recommended for autoregressive generation, up to this day, "small" models like BERT are applied as general tools for numerous tasks.

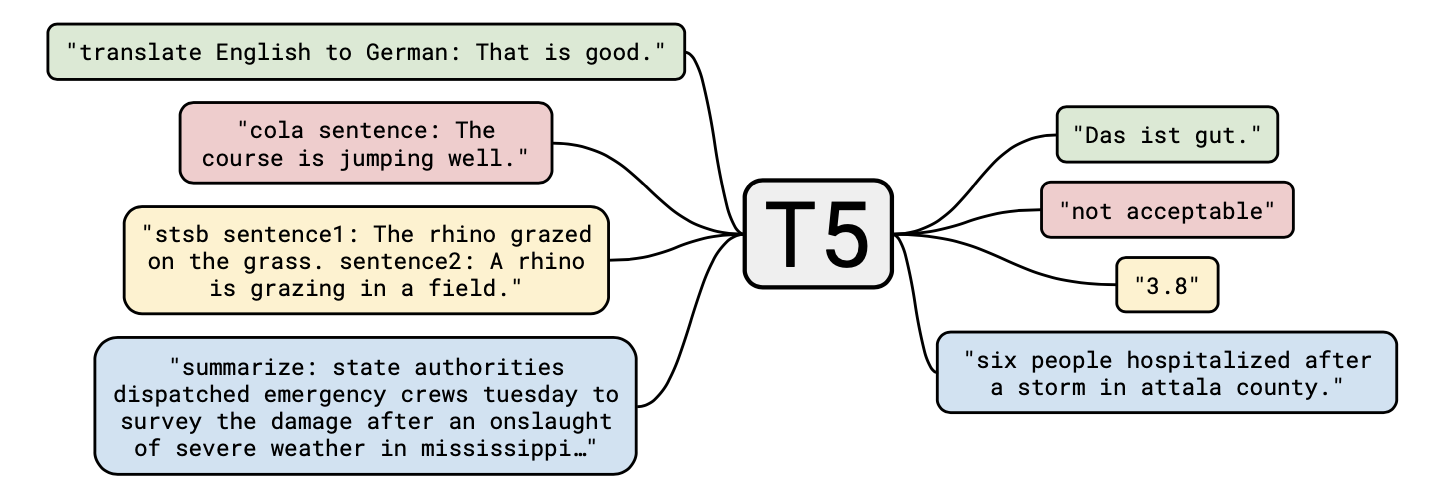

2.1.6.2 T5

The Text-To-Text Transfer Transformer (T5) is a new model that can be regarded as an application of the insights gathered by an extensive empirical study searching for the best transfer learning techniques. It is pretrained on Colossal Clean Crawled Corpus (C4), an open-source dataset. Raffel et al. (2019a) found that the best pretraining objective to use for the encoder component was span corruption. In short, different length word groups (spans) are replaced with unique placeholders, and let the model decode them. Text preprocessing is necessary for its implementation. For the decoder, it is still a language modelling task. Compared to models like BERT, which can only output a span of the input or a class label, T5 reframes all NLP tasks into a unified text-to-text format, where inputs and outputs always consist of text strings. As a result, the same model, loss function, and hyperparameters can be used on any NLP task, such as machine translation, document summarization, question answering, and classification tasks like sentiment analysis. T5 can even be applied to regression tasks by training it to predict the string representation of a number (and not the number itself). Examples of potential use cases are depicted in below.

2.1.6.3 GPT-3

As previously stated, the neural architecture influences the type of

pretraining. The original GPT architecture consists of a Transformer

decoder with 12 layers (Radford et al. 2018). For decoders, it is

sensible to simply pretrain them as language models. Afterwards, they

can be used as generators to fine-tune their probability of predicting

the next word conditioned on the previous words. The models are suitable

for tasks similar to the training, including any type of dialogue and

document summarization. Transformer language models are great for

transfer learning. They are fine-tuned by randomly initializing a

softmax classifier on top of the pretrained model and training both

(with only a very small learning rate and a small number of epochs) so

that the gradient propagates through the whole network.

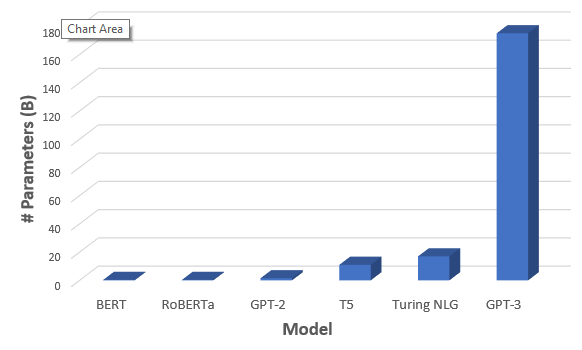

The success of BERT in 2018 prompted a "gold rush" in NLP, in which ever greater language models were created. One that topped the headlines and used a customer supercluster for computation was the third iteration of the GPT architecture by OpenAI, known as GPT-3. reveals why GPT-3 is a famous example of current research focusing on scaling up neural language models. While the largest T5 model has 11 billion parameters, GPT-3 has 175 billion parameters. Moreover, the training data set contains around 500 billion tokens of text, while the average young american child hears around 6 million words per year (Hart and Risley 1995). The results of huge language models suggest that they perform some form of learning (without gradient steps) simply from examples provided via context. The tasks are specified by the in-context examples, and the conditional probability distribution simulates performing the task to an extent.

FIGURE 2.21: Comparison of number of parameters between Transformer-architectures (Source: Saifee (2020)).

2.1.7 Current Topics

2.1.7.1 Concerns regarding growing size of Language Models

As the last chapter ended with GPT-3 and emphasized the concerning trend

of ever larger language models, one could ask which other costs arise

from the developments. Risks and harms among environmental and financial

costs have been studied by Bender et al. (2021). They state that marginalized

communities are not only less likely to benefit from LM progress, but

also more likely to suffer from the environmental repercussions of

increasing resource consumption. Strubell, Ganesh, and McCallum (2019a) estimated that training

a Transformer (big) model resulted in 249t of \(CO_2\). To compare, an

average human is responsible for approximately 5t of \(CO_2\) per year

(Ritchie, Roser, and Rosado 2020). In addition, they discovered that an

estimated increase of 0.1 in BLEU score increased computation costs by

$ 150,000 (for English to German translations). Furthermore, larger

models require more data to sufficiently train them. This has resulted

in large but poorly documented training data sets. Multiple risks can be

mitigated if there is a common understanding of the model’s learnings.

Moreover, it has been argued that datasets consisting of web data over-represent hegemonic views and encode bias towards marginalized communities. This is among other factors due to internet access being unevenly distributed. In particular, there is an over-representation of younger internet users and those from developed countries. It is generally naive to educate AI systems on all aspects of the complex world, and hope for the beautiful to prevail (Bender et al. 2021).

2.1.7.2 Improving Understanding of Transformer-based models

The results of transformer-based models clearly show that they deliver

successful results. However, it is less clear why. The size of the

models makes it difficult to experiment with them. Nevertheless, having

a limited understanding restrains researchers from coming up with

further improvements. Therefore, multiple papers analysed BERT’s

attention in search of an improved understanding of large transformer

models. BERT is a smaller model out of the more popular ones, and its

attention is naturally interpretable because the attention weight

indicates how significant a word is for the next representation of the

current word (Clark et al. 2019). In the following, some of the findings are

going to be shared.

BERT representations are rather hierarchical than linear, and they

include information about parts of speech, syntactic chunks and roles

(Lin, Tan, and Frank 2019, @Liu2019) Furthermore, it has semantic knowledge.

For example, BERT can recognize e.g. that "to tip a chef" is better

than "to tip a robin" but worse than "to tip a waiter"

((Ettinger 2019)). However, it makes sense that BERT has issues with

knowledge that is assumed and not mentioned, which especially refers to

visual and perceptual properties (Da and Kasai 2019). Additionally, BERT

struggles with inferences, e.g. even though it is known that "people

walk into houses" and "houses are big", it cannot infer that "houses

are bigger than people" (Forbes, Holtzman, and Choi 2019).

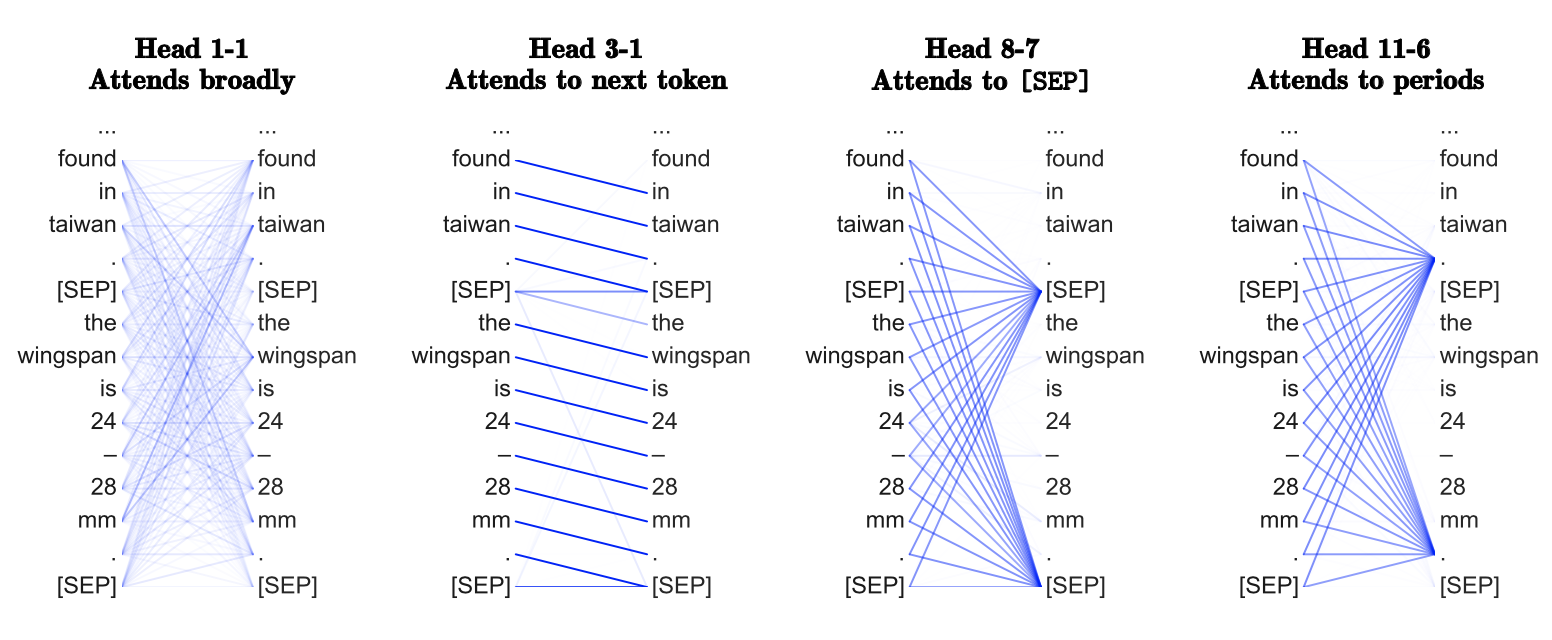

While it is true that different transformer heads attend to various patterns (see ), interestingly, most of them could be neglected without notable performance loss (Voita et al. 2019). Probing attention maps can be tedious, but allows to gain knowledge of common patterns, such as an unexpected amount focusing on the delimiter token \[SEP\].

FIGURE 2.22: Common patterns of attention heads (Source: Clark et al. (2019)).

2.1.7.3 Few-Shot Learning

For NLP tasks, the model is usually trained on a set of labelled

examples and is expected to generalize to unseen data. Annotating is not

only costly but also difficult to gather for numerous languages,

domains, and tasks. In practice, there is often only a very limited

amount of labelled examples. Consequently, few-shot learning is a highly

relevant research area (Schick and Schütze 2020). It defines a model that is

trained on a limited number of demonstrations to guide its predictions.

Referring back to , the benefits of lower computational and

environmental costs have to be mentioned.

Traditional fine-tuning uses a large corpus of example tasks, and the model is updated repeatedly with gradient steps so that it adapts to the task with minimal accuracy error.

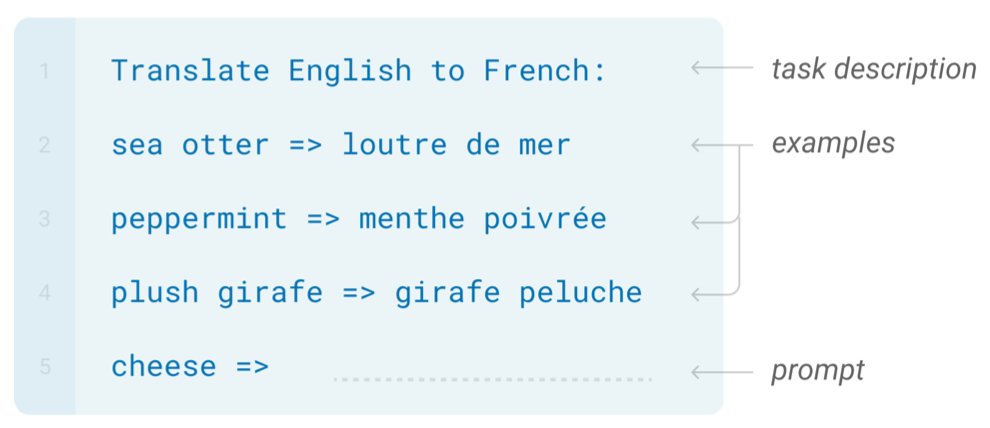

In contrast, few-shot applications have to complete tasks at test time with only forward passes. They have three main parts: the task description, examples, and the prompt. In Figure ??, the task is a translation from English to French, a few examples, as well as the word that should be translated are given. Moreover, zero-shot and one-shot learning refer to the model predicting with no and one learned example, respectively (Brown et al. 2020).

FIGURE 2.23: Few-shot learning (Source: Brown et al. (2020)).

It is complicated to create the few-shot examples, since the application

relies on them to express the task. This is why smaller models are

susceptible to examples written unfavourably. In Brown et al. (2020), it was

shown that few-shot performance scales with the number of model

parameters. Even though GPT-3’s in-context learning improved few-shot

prompting capabilities, it is still sensitive to the order of training

examples, decoding strategy, and hyperparameter selection. All of this

combined with the fact that current research uses larger or held-out

data sets leads to the suspicion that the true few-shot ability of

language models is overestimated (Perez, Kiela, and Cho 2021a).

Moreover, Lialin et al. (2022) have found that common transformer models could not resolve compositional questions in a zero-shot fashion and that the model’s parameter count does not correlate with performance. This indicates a limitation for zero-shot prompting with the existing pre-training objectives. However, different models provided the best accuracy with regard to different symbolic reasoning tasks. This suggests that optimization or masking strategies could be more significant than the pre-training, data set size or model architecture.

2.1.8 Summary

Natural Language Processing has been one of the most exciting fields of machine learning in the last decade considering all the breakthroughs discussed in this work. Word embeddings made it possible and allowed developers to encode words as dense vectors that capture their underlying semantic content. In this way, similar words are embedded close to each other in a lower-dimensional feature space. Another important challenge was solved by encoder-decoder (also called sequence-to-sequence) architectures, which made it possible to map input sequences to output sequences of different lengths. They are especially useful for complex tasks like machine translation, video captioning or question answering. A significant state-of-the-art technique is attention, which enabled models to actively shift their focus – just like humans do. It allows following one thought at a time while suppressing information irrelevant to the task. As a consequence, it has been shown to significantly improve performance for tasks like machine translation. By giving the decoder access to directly look at the source, the bottleneck is avoided and at the same time, it provides a shortcut to faraway states and thus helps with the vanishing gradient problem. One of the most recent data modelling techniques is the transformer, which is solely based on attention and does not have to process the input data sequentially. Therefore, the deep learning model is better in remembering context-induced earlier in long sequences. It is the dominant paradigm in NLP currently and makes better use of GPUs because it can perform parallel operations. Transformer architectures like BERT, T5 or GPT-3 are pre-trained on a large corpus and can be fine-tuned for specific language tasks. They can generate stories, poems, code and much more. Currently, there seems to be breaking transformer news nearly every week with no sign of slowing. This is why many trends could be recognized as relevant current topics. One of them is increasing concerns regarding the growing size of language models and the correlated environmental and financial costs. Another active research aspect is concerned with improving the understanding of transformer-based models to further advance them. Additionally, there are many studies about achieving respectable results on language modelling tasks after only learning from a few examples, which is known as few-shot learning.

2.2 State-of-the-art in Computer Vision

Author: Vladana Djakovic

Supervisor: Daniel Schalk

2.2.1 History

The first research about visual perception comes from neurophysiological research performed in the 1950s and 1960s on cats. The researchers used cats as a model to understand how human vision is compounded. Scientists concluded that human vision is hierarchical and neurons detect simple features like edges followed by more complex features like shapes and even more complex visual representations. Inspired by this knowledge, computer scientists focused on recreating human neurological structures.

At around the same time, as computers became more advanced, computer scientists worked on imitating human neurons’ behavior and simulating a hypothetical neural network. In his book “The Organization of Behaviour” (1949) Donald Hebbian stated that neural pathways strengthen over each successive use, especially between neurons that tend to fire at the same time, thus beginning the long journey towards quantifying the complex processes of the brain. The first Hebbian network, inspired by this neurological research, was successfully implemented at MIT in 1954 (Jaspreet 2019).

New findings led to the establishment of the field of artificial intelligence in 1956 on-campus at Dartmouth College. Scientists began to develop ideas and research how to create techniques that would imitate the human eye.

In 1959 early research on developing neural networks was performed at Stanford University, where models called “ADALINE” and “MADALINE,” (Multiple ADAptive LINear Elements) were developed. Those models aimed to recognize binary patterns and could predict the next bit (“Neural Networks - History” 2022).

Starting optimism about Computer Vision and neural networks disappeared after 1969 and the publication of the book “Perceptrons” by Marvin Minsky, founder of the MIT AI Lab, stated that the single perception approach to neural networks could not be translated effectively into multi-layered neural networks. The period that followed was known as AI Winter, which lasted until 2010, when the technological development of computer and the internet became widely used. In 2012 breakthroughs in Computer Vision happened at the ImageNet Large Scale Visual Recognition Challenge (ILSVEC). The team from the University of Toronto issued a deep neural network called AlexNet (Krizhevsky, Sutskever, and Hinton 2012a) that changed the field of artificial intelligent and Computer Vision (CV). AlexNet achieved an error rate of 16.4%.

From then until today, Computer Vision has been one of the fastest developing fields. Researchers are competing to develop a model that would be the most similar to the human eye and help humans in their everyday life. In this chapter the author will describe only a few recent state-of-the-art models.

2.2.2 Supervised and unsupervised learning

As part of artificial intelligence (AI) and machine learning (ML), there are two basic approaches:

- supervised learning;

- unsupervised learning.

Supervised learning (Education 2020a) is used to train algorithms on labeled datasets that accurately classify data or predict outcomes. With labeled data, the model can measure its accuracy and learn over time. Among others, we can distinguish between two common supervised learning problems:

- classification,

- regression.

In unsupervised learning (Education 2020b), unlabelled datasets are analyzed and clustered using machine learning algorithms. These algorithms aim to discover hidden patterns or data groupings without previous human intervention. The ability to find similarities and differences in information is mainly used for three main tasks:

- clustering,

- association,

- dimensionality reduction.

Solving the problems where the dataset can be both labeled and unlabeled requires a semi-supervised approach that lies between supervised and unsupervised learning. It is useful when extracting relevant features from complex and high volume data, i.e., medical images.

Nowadays, a new research topic appeared in the machine learning community, Self-Supervised Learning. Self-Supervised learning is a process where the model trains itself to learn one part of the input from another (techslang 2020). As a subset of unsupervised learning, it involves machines labeling, categorizing, and analyzing information independently and drawing conclusions based on connections and correlations. It can also be considered as an autonomous form of supervised learning since it does not require human input to label data. Unlike unsupervised learning, self-supervised learning does not focus on clustering nor grouping (Shah 2022). One part of Self-Supervised learning is contrastive learning, which is used to learn the general features of an unlabeled dataset identifying similar and dissimilar data points. It is utilized to train the model to learn about our data without any annotations or labels (Tiu 2021).

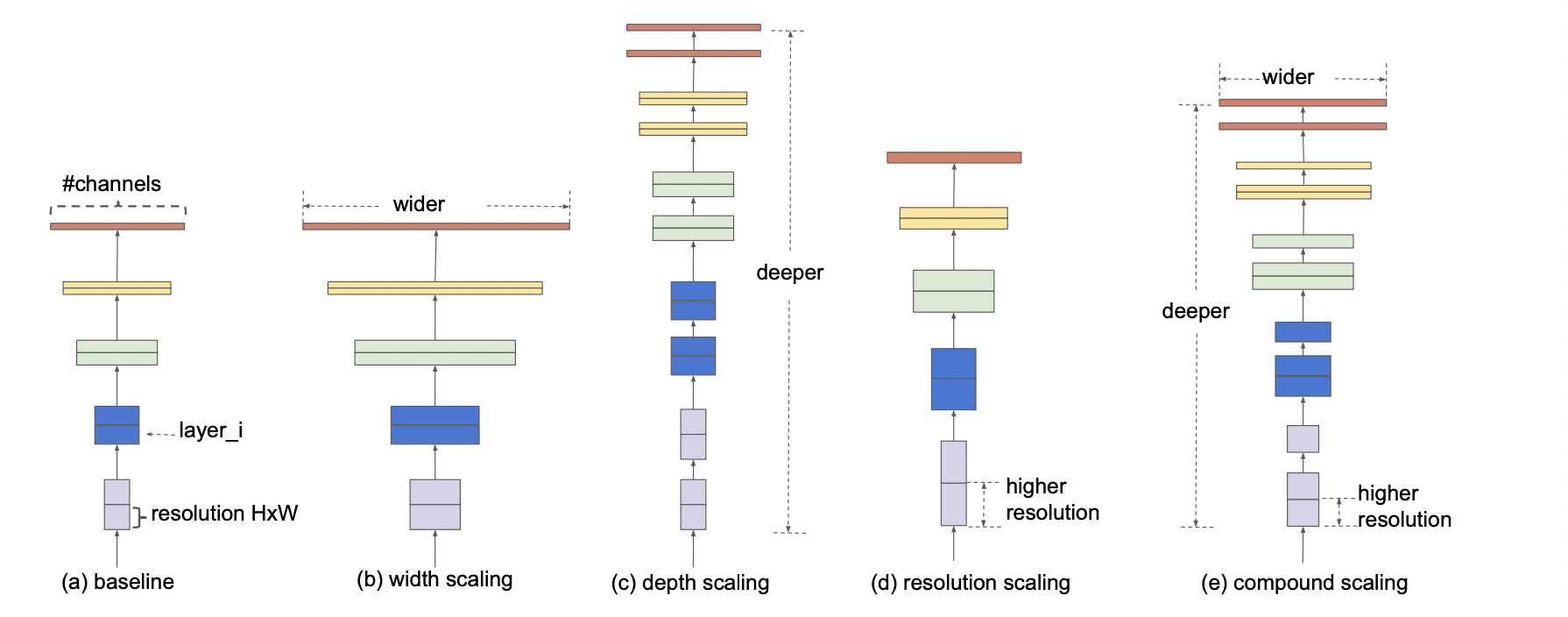

2.2.3 Scaling networks

Ever since the introduction of AlexNet in 2012, the problem of scaling convolutional neural networks (ConvNet) has become the topic of active research. ConvNet can be scaled in all three dimensions: depth, width, or image size. One of the first researches in 2015 showed that network depth is crucial for image classification. The question whether stacking more layers enables the network to learn better leads to deep residual networks called ResNet (He et al. 2015), which will be described in this work. Later on, scaling networks by their depth became the most popular way to improve their performance. The second solution was to scale ConvNets by their width. Wider networks tend to be able to capture more fine-grained features and are easier to train (Zagoruyko and Komodakis 2016). Lastly, scaling the image’s resolution can improve the network’s performance. With higher resolution input images, ConvNets could capture more fine-grained patterns. GPipe (Huang et al. 2018) is one of the most famous networks created by this technique. The question of possibility of scaling by all three dimensions was answered by M. Tan and Le (2019a) in the work presenting Efficient Net. This network was built by scaling up ConvNets by all three dimensions and will also be described here.

2.2.4 Deep residual networks

The deep residual networks, called ResNets (He et al. 2015), were presented as the answer on the question whether stacking more layers would enable network to learn better. Until then one obstacle for simply stacking layers was the problem of vanishing/exploding gradients. It has been primarily addressed by normalized initialization and intermediate normalization layers. That enabled networks with tens of layers to start converging for stochastic gradient descent (SGD) with backpropagation.

Another obstacle was a degradation problem. It occurs when the network depth increases, followed by saturating and then rapidly decreasing accuracy. Overfitting is not caused by such degradation, and adding more layers to a suitably deep model leads to higher training error, which indicates that not all systems are similarly easy to optimize.

For example, it was suggested to consider a shallower architecture and its deeper counterpart that adds more layers. One way to avoid the degradation problem is to create a deeper model, where the auxiliary layers are identity mappings and other layers are copied from a shallower model. The deeper model should produce no higher training error than its shallower counterpart. However, in practice it is not the case and it is hard to find comparably good constructs or better solutions. The solution to this degradation problem proposed by them is a deep residual learning framework.

2.2.4.1 Deep Residual Learning

2.2.4.1.1 Residual Learning

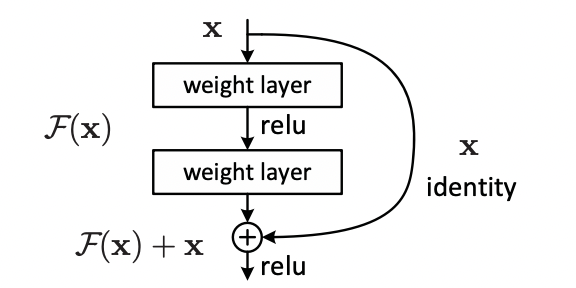

The idea of residual learning is to replace the approximation of underlying mapping \(H\left( x\right)\), which is approximated by a few stacked layers (not necessarily the entire net), with an approximation of residual function \(F(x):= H\left( x \right) − x\). Here x denotes the inputs to the first of these layers, and it is assumed that both inputs and outputs have the same dimensions. The original function changes its form \(F\left( x \right)+x\).

A counter-intuitive phenomenon about degradation motivated this reformulation. The new deeper model should not have a more significant training error when compared to a construction using identity mappings. However, due to the degradation problem, solvers may have challenges approximating identity mappings by multiple non-linear layers. Using the residual learning reformulation can drive the weights of the non-linear layers toward zero to approach identity mappings if they are optimal. Generally, identity mappings are not optimal, but new reformulations may help to pre-condition the problem. When an optimal function is closer to an identity mapping than a zero mapping, finding perturbations concerning an identity mapping should be easier than learning the function from scratch.

2.2.4.1.2 Identity Mapping by Shortcuts

Residual learning is adopted to every few stacked layers where a building block is defined:

\[\begin{equation} \tag{2.1} y = F \left( x,\left\{ W_i\right\} \right) + x \end{equation}\]

x and y present the input and output vectors of the layers. Figure 2.24 visualizes the building block.

FIGURE 2.24: Building block of residual learning (He et al. 2015).

The function \(F \left( x,\left\{ W_i\right\} \right)\) represents the residual mapping that is to be learned. For the example with two layers from Figure 2.24, \(F = W_2\sigma\left( W_1x\right)\) in which \(\sigma\) denotes the ReLU activation function. Biases are left out to simplify the notation. The operation \(F + x\) is conducted with a shortcut connection and element-wise addition. Afterward, a second non-linear (i.e., \(\sigma \left( y \right)\) transformation is applied.

The shortcut connections in Equation (2.1) neither adds an extra parameter nor increases computation complexity and enables a comparisons between plain and residual networks that concurrently have the same number of parameters, depth, width, and computational cost (except for the negligible element-wise addition). The dimensions of \(x\) and \(F\) in Equation (2.1) must be equal. Alternatively, to match the dimensions, linear projection \(W_s\) by the shortcut connections can be applied:

\[\begin{equation} \tag{2.2} y = F \left( x,\left\{ W_i\right\} \right)+ W_sx. \end{equation}\]

The square matrix \(W_s\) can be used in Equation (2.2). However, experiments showed that identity mapping is enough to solve the degradation problem. Therefore, \(W_s\) only aims to match the dimensions. Although more levels are possible, it was experimented with function \(F\) having two or three layers without stating the exact form of it. Assuming \(F\) only has one layer (Equation (2.1)) it is comparable to a linear layer: \(y = W_1 x + x\). The theoretical notations are about fully-connected layers, but convolutional layers were used. The function \(F \left( x,\left\{ W_i\right\} \right)\) can be applied to represent multiple convolutional layers. Two feature maps are added element-wise, channel by channel.

2.2.4.2 Network Architectures

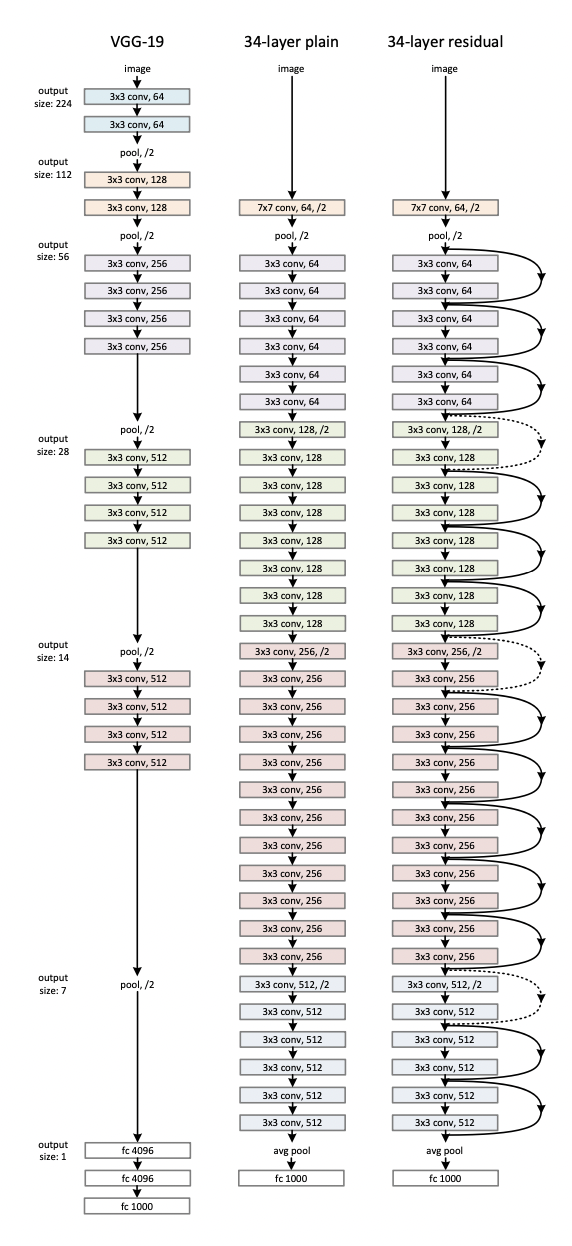

Various plain/residual networks were tested to construct an efficient residual network. They trained the network on benchmarked datasets, e.g. the ImageNet dataset, that are used for a comparison of network architectures. Figure (2.2) shows that every residual network needs a plain baseline network inspired by the VGG (Simonyan and Zisserman 2014) network on which identity mapping by shortcuts is applied.

Plain Network: The philosophy of VGG nets 41 mainly inspires the plain baselines. Two rules convolution layers, which usually have \(3\times 3\) filters, follow are:

- feature maps with the same output size have the same number of layers;

- reducing the size of a feature map by half doubles the number of filters per layer to maintain time complexity per layer.

Convolutional layers with a stride of 2 perform downsampling directly. A global average pooling layer and a 1000-way fully-connected layer with softmax are at the end of the network. The number of weighted layers sums up to 34 (Figure 2.25, middle). Compared to VGG nets, this model has fewer filters and lower complexity (Figure 2.25, left).

Residual Network: Based on the above plain network, additional shortcut connections (Figure 2.25, right) turn the network into its associate residual variant. The identity shortcuts (Equation (2.1)) can be directly used in the case of the exact dimensions of the input and output (solid line shortcuts in Figure 2.25). For the different dimensions (dotted line shortcuts in Figure 2.25), two options are considered:

- The shortcut still performs identity mapping, but with extra zero entries padded to cope with the increasing dimensions, without adding new parameters;

- The projection shortcut in Equation (2.2) matches dimensions (due to \(1\times 1\) convolutions).

In both cases, shortcuts will be done with a stride of two when they go across feature maps of two sizes.

FIGURE 2.25: Architecture of ResNet (He et al. 2015).

2.2.5 EfficientNet

Until (???) introduced EfficientNet, it was popular to scale only one of the three dimensions – depth, width, or image size. The empirical study shows that it is critical to balance all network dimensions, which can be achieved by simply scaling each with a constant ratio. Based on this observation, a simple yet effective compound scaling method was proposed, which uniformly scales network width, depth, and resolution with a set of fixed scaling coefficients. For example, if \(2N\) times more computational resources are available, increasing the network depth by \(\alpha N\), width by \(\beta N\), and image size by \(\gamma N\) would be possible. Here \(\alpha,\beta,\gamma\) are constant coefficients determined by a small grid search on the original miniature model. Figure 2.26 illustrates the difference between this scaling method and conventional methods. A compound scaling method makes sense if an input image is bigger because a larger receptive field requires more layers and more significant channel features to capture fine-grained patterns. Theoretically and empirically, there has been a special relationship between network width and depth (Raghu et al. 2016). Existing MobileNets (Howard et al. 2017) and ResNets are used to demonstrated new scaling methods.

2.2.5.1 Compound Model Scaling

2.2.5.1.1 Problem Formulation

A function \(Y_i = \mathcal{F}_i \left( X_i \right)\) with the operator \(\mathcal{F}_i\), output tensor \(Y_i\), input tensor \(X_i\) of shape \(\left( H_i, W_i, C_i \right)\), spatial dimensions \(H_i\), \(W_i\), and channel dimension \(C_i\) is called a ConvNet Layer \(i\). A ConvNet N appears as a list of composing layers: \[ \mathcal{N}=\mathcal{F_k}\odot \cdots \mathcal{F_2}\odot\mathcal{F_1}\left( X_1 \right)=\bigodot{j=1\cdots k}\mathcal{F_j}\left( X_1 \right) \]

Effectively, these layers are often partitioned into multiple stages and all layers in each stage share the same architecture. For example, ResNet has five stages with all layers in every stage being the same convolutional type except for the first layer that performs down-sampling. Therefore, a ConvNet can be defined as:

\[ \mathcal{N}=\bigodot_{i=1\cdots s}\mathcal{F_i}^{L_i}\left( X_{\left( H_i, W_i, C_i \right)} \right) \]

where \(\mathcal{F_i}^{L_i}\) denotes layer \(\mathcal{F_i}\) which is repeated \(L_i\) times in stage \(i\), and \(\left( H_i, W_i, C_i \right)\) is the shape of input tensor \(X\) of layer \(i\).

In comparison to the regular ConvNet focusing on the best layer architecture search \(\mathcal{F_i}\), model scaling centers on the expansion of the network length \(\left( L_i\right)\), width \(\left( C_i \right)\), and/or resolution \(\left( H_i, W_i\right)\) without changing \(\mathcal{F_i}\) that was predefined in the baseline network. Although model scaling simplifies the design problem of the new resource constraints through fixing \(\mathcal{F_i}\), a different large design space \(\left( L_i, H_i, W_i, C_i \right)\) for each layer remains to be explored. To further reduce the design space, all layers are restricted to be scaled uniformly with a constant ratio. In this case, the goal is to maximize the model’s accuracy for any given resource constraint, which is presented as an optimization problem:

\[\begin{align*} \max_{d,w,r} &\text{Accuracy} \left( \mathcal{N}\left( d,w,r \right) \right) \\ s.t.\mathcal{N}\left( d,w,r \right) &=\bigodot_{I=1...s}\hat{\mathcal{F}}{i}^{d\cdot \hat{L{i}}}\left( X_{\left\langle r\cdot \hat{H_i},r\cdot \hat{W_i},w\cdot \hat{C_i}\right\rangle} \right) \\ Memory\left( \mathcal{N} \right) &\leq\ targetMemory \\ FLOPS\left( \mathcal{N} \right) &\leq\ targetFlops \end{align*}\] where \(w,d,r\) are coefficients for scaling network width, depth, and resolution; \(\left(\widehat{\mathcal{F}}_i, \widehat{L}_i, \widehat{H}_i, \widehat{W}_i, \widehat{C}_i \right)\) are predefined parameters of the baseline network.

2.2.5.1.2 Scaling Dimensions

The main difficulty of this optimization problem is that the optimal \(d, w, r\) depend on each other and the values are changing under different resource constraints. Due to this difficulty, conventional methods mostly scale ConvNets in one of these dimensions:

Depth (\(d\)): One of the most significant networks previously described is the ResNet. As it was described, the problem of ResNets is that accuracy gain of a very deep network diminishes. For example, ResNet-1000 has similar accuracy to ResNet-101 even though it contains many more layers.

Width (\(w\)): Scaling network width is commonly used for small-sized models. However, wide but shallow networks tend to have difficulty grasping higher-level features.

Resolution (\(r\)): Starting from \(224\times 224\) in early ConvNets, modern ConvNets tend to use \(299\times 299\) or \(331\times 331\) for better accuracy. GPipe (Huang et al. 2018) recently achieved state-of-the-art ImageNet accuracy with \(480\times 480\) resolution. Higher resolutions, such as \(600\times 600\), are also widely used in ConvNets for object detection.

The above analyses lead to the first observation:

Observation 1: Scaling up any network width, depth, or resolution dimension improves accuracy. Without the upscaling, the gain diminishes for bigger models.

2.2.5.1.3 Compound Scaling

Firstly, it was observed that different scaling dimensions are not independent because higher resolution images also require to increase the network depth. The larger receptive fields can help capture similar features that include more pixels in bigger images. Similarly, network width should be increased when the resolution is higher to capture more fine-grained patterns. The intuition suggests that different scaling dimensions should be coordinated and balanced rather than conventional scaling in single dimensions. To confirm this thought, results of networks with width \(w\) without changing depth (\(d\)=1.0) and resolution (\(r\)=1.0) were compared with deeper (\(d\)=2.0) and higher resolution (\(r\)=2.0) networks. This showed that width scaling achieves much better accuracy under the same FLOPS. These results lead to the second observation:

Observation 2: To achieve better accuracy and efficiency, balancing the network width, depth, and resolution dimensions during ConvNet scaling is critical. Earlier researches have tried to arbitrarily balance network width and depth, but they all require tedious manual tuning.

A new compound scaling method, which uses a compound coefficient \(\varphi\) to uniformly scale network width, depth, and resolution in a principled way was proposed:

\[\begin{align} \begin{split} \text{depth:} &\mathcal{d}=\alpha^{\varphi} \\ \text{width:} &\mathcal{w}=\beta^{\varphi}\\ \text{resolution:} &\mathcal{r}=\gamma^{\varphi}\\ &s.t. \alpha\cdot \beta^{2}\cdot \gamma^{2}\approx 2\\ &\alpha \ge 1, \beta \ge 1, \gamma \ge 1 \end{split} \tag{2.3} \end{align}\]

where \(\alpha, \beta, \gamma\) are constants that can be determined by a small grid search, \(\varphi\) is a user-specified coefficient that controls how many more resources are available for model scaling, while \(\alpha, \beta, \gamma\) specify how to assign these extra resources to the network width, depth, and resolution, respectively. Notably, the FLOPS of a regular convolution operation is proportional to \(d, w^{2}, r^{2}\), i.e., doubling network depth will double the FLOPS, but doubling network width or resolution will increase the FLOPS by four times. Scaling a ConvNet following Equation (2.3) will approximately increase the total number of FLOPS by \(\left( \alpha\cdot \beta^{2}\cdot \gamma^{2} \right)^{\varphi}\). In this chapter, \(\alpha\cdot \beta^{2}\cdot \gamma^{2}\approx 2\) is constrained such that for any new \(\varphi\) the total number of FLOPS will approximately increase by \(2\varphi\).

2.2.5.2 EfficientNet Architecture

A good baseline network is essential because model scaling does not affect its layer operators \(F*[i]\). Therefore this method is also estimated on ConvNets. A new mobile-sized baseline called EfficientNet was developed to show the effectiveness of the new scaling method. Metrics that were used to estimate the efficacy are accuracy and FLOPS. The baseline efficient network that was created is named EfficientNet-B0. Afterwards, this compound scaling method is applied in two steps:

STEP 1: By fixing \(\varphi = 1\) and, assuming twice more resources available, a small grid search of $, , $ based on Equation (2.3) showed that the best values for EfficientNet-B0 are \(\alpha = 1.2, \beta = 1.1, \gamma=1.15\) under the constraint of \(\alpha·\beta^2·\gamma^2 ≈2\).

STEP 2: Afterwards, fix \(\alpha,\beta,\gamma\) as constants and scale up the baseline network with different \(\varphi\) using Equation (2.3) to construct EfficientNet-B1 to B7.