Chapter 04: First order methods

The first type of optimization methods we will have a look at are first order methods.

-

Chapter 4.01: Gradient descent

We start this chapter by taking a look at the Gradient descent algorithm. It is an iterative, first-order optimization algorithm for finding a local minimum of a differentiable function.

-

Chapter 4.02: Step size and optimality

In this subchapter we take a look at the impact that the chosen step size can have on optimization. Furthermore, we look into certain methods for determining the value of the step size such as the Armijo rule.

-

Chapter 4.03: Deep dive: Gradient descent

In this subchapter we further examine the gradient descent algorithm and take a closer look into its convergence behaviour.

-

Chapter 4.04: Weaknesses of GD – Curvature

In this subchapter we take a look at the curvature of a function and its effect on the gradient descent algorithm.

-

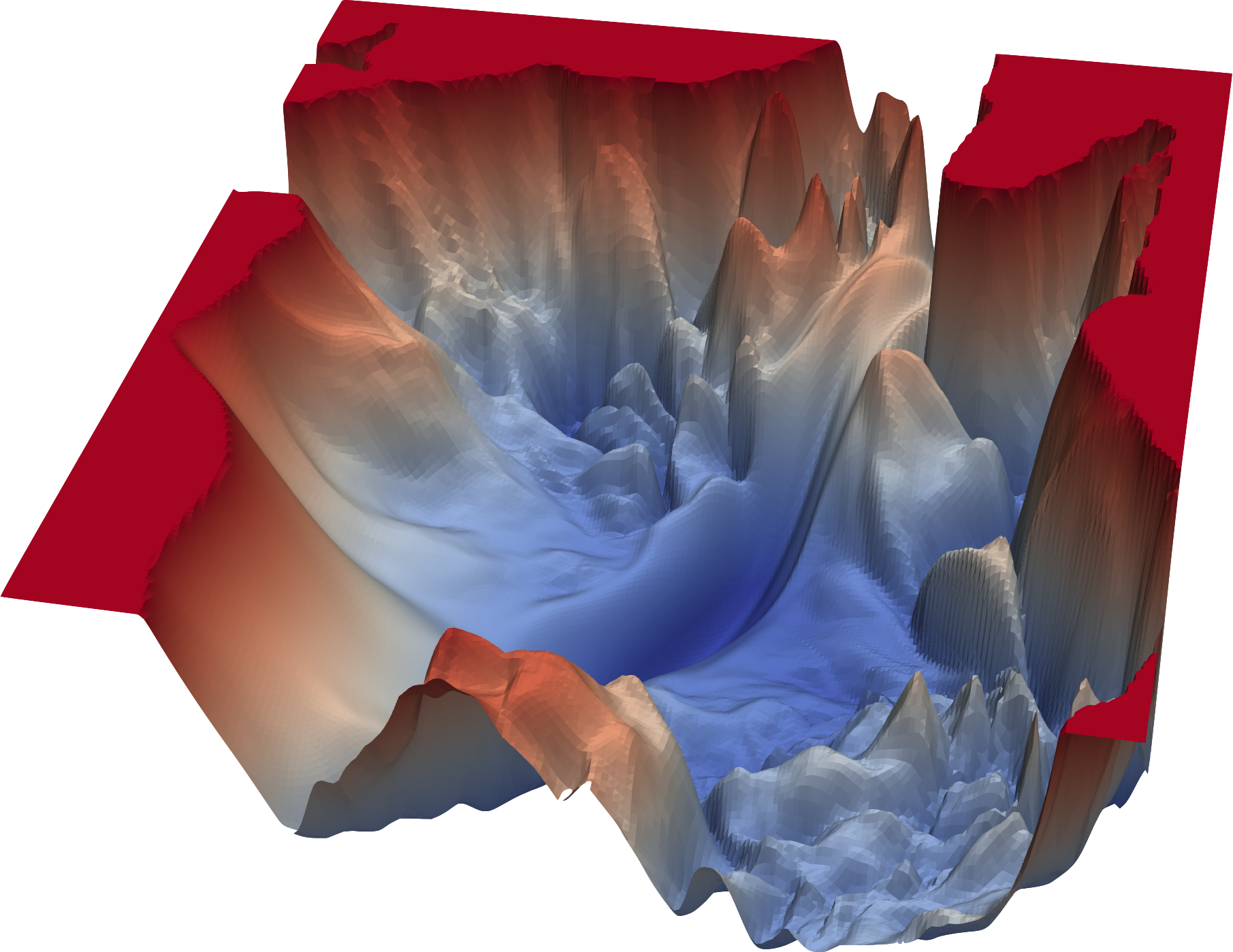

Chapter 4.05: GD – Multimodality and Saddle points

In this subchapter we take a look at Multimodality and Saddle points in the context of GD.

-

Chapter 4.06: GD with Momentum

In this subchapter we take a look at GD with momentum.

-

Chapter 4.07: GD in quadratic forms

In this subchapter we take a look at GD in quadratic forms.

-

Chapter 4.09: SGD

In this subchapter we take a look at stochastic gradient descent and discuss its stochastic and convergence behaviour. Furthermore, we look into the effect of the batch size.

-

Chapter 4.10: SGD Further Details

In this subchapter we take a look into further details of SGD such as the effect of decreasing the step size, stopping rules and SGD with momentum.

-

Chapter 4.11: ADAM and friends

In this subchapter we introduce a couple of advanced algorithms all building on SGD such as ADAM and AdaGrad.