Optimization in Machine Learning

This website offers an open and free introductory course on optimization for machine learning. The course is constructed holistically and as self-contained as possible, in order to cover most optimization principles and methods that are relevant for optimization.

This course is recommended as an introductory graduate-level course for Master’s level students.

If you want to learn more about this course, please (1) read the outline further below and (2) read the section on prerequisites

Later on, please note: (1) The course uses a unified mathematical notation. We provide cheat sheets to summarize the most important symbols and concepts. (2) Most sections already contain exercises with worked-out solutions to enable self-study as much as possible.

- Chapter 01: Mathematical Concepts

- Chapter 02: Optimization problems

- Chapter 03: Univariate Optimization

- Chapter 04: First order methods

- Chapter 4.01: Gradient descent

- Chapter 4.02: Step size and optimality

- Chapter 4.03: Deep dive: Gradient descent

- Chapter 4.04: Weaknesses of GD – Curvature

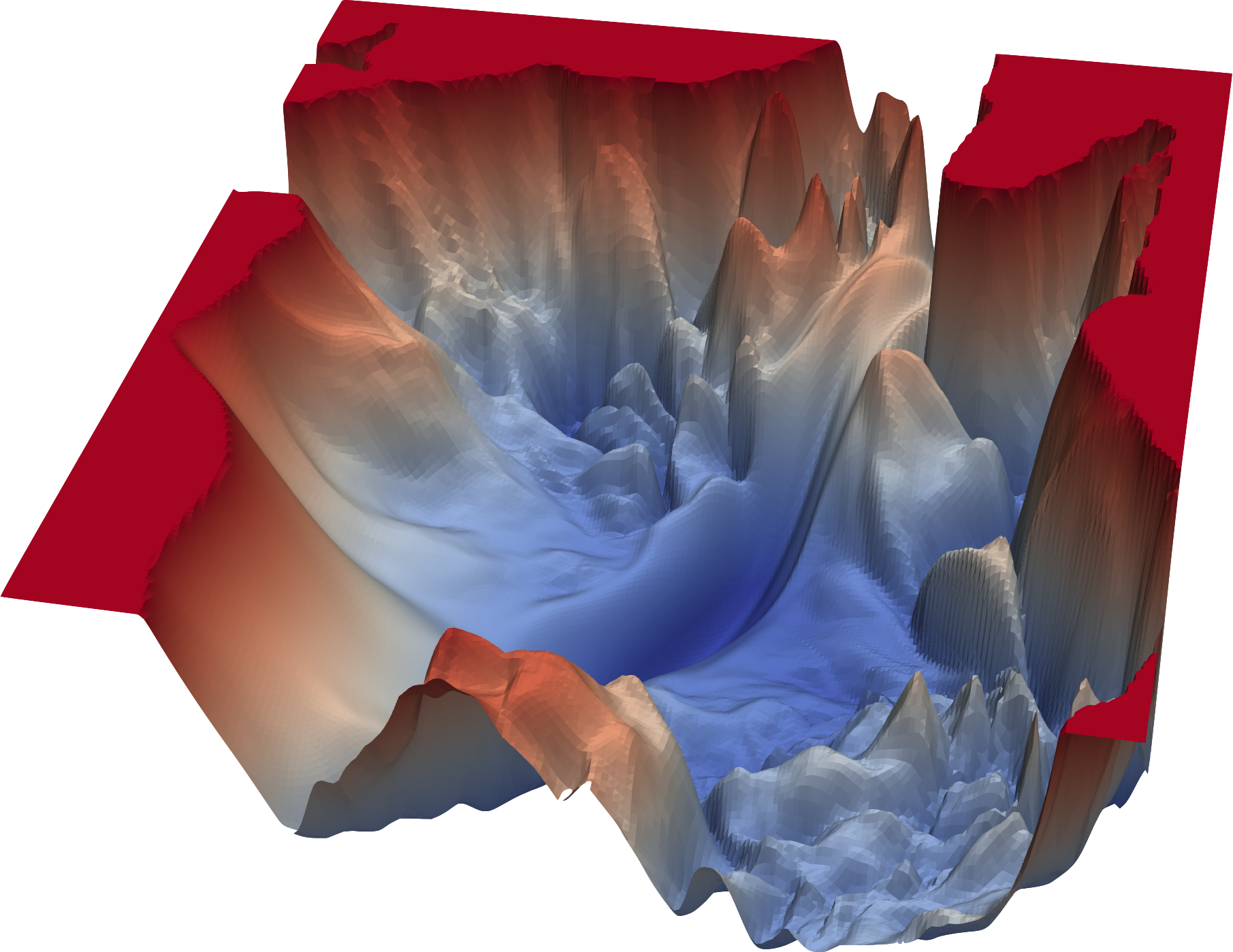

- Chapter 4.05: GD – Multimodality and Saddle points

- Chapter 4.06: GD with Momentum

- Chapter 4.07: GD in quadratic forms

- Chapter 4.09: SGD

- Chapter 4.10: SGD Further Details

- Chapter 4.11: ADAM and friends

- Chapter 05: Second order methods

- Chapter 06: Constrained Optimization

- Chapter 07: Derivative Free Optimization

- Chapter 08: Evolutionary Algorithms

- Chapter 10: Bayesian Optimization